/lmg/ - Local Models General

Anonymous 01/20/25(Mon)08:18:15 | 461 comments | 49 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103959928 & >>103947482

►News

>(01/20) DeepSeek releases R1, R1 Zero, & finetuned Qwen and Llama models: https://hf.co/deepseek-ai/DeepSeek-R1-Zero

>(01/17) Nvidia AceInstruct, finetuned on Qwen2.5-Base: https://hf.co/nvidia/AceInstruct-72B

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/outetts-03-6786b1ebc7aeb757bc17a2fa

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b-instruct

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103959928 & >>103947482

►News

>(01/20) DeepSeek releases R1, R1 Zero, & finetuned Qwen and Llama models: https://hf.co/deepseek-ai/DeepSeek-

>(01/17) Nvidia AceInstruct, finetuned on Qwen2.5-Base: https://hf.co/nvidia/AceInstruct-72

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/ou

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/20/25(Mon)08:18:36 No.103967200

►Recent Highlights from the Previous Thread: >>103959928

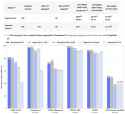

--DeepSeek-R1 distilled model benchmarks:

>103966823 >103966829 >103966837 >103966856 >103966868 >103966902

--DeepSeek-R1-Zero model size and optimization discussion:

>103964568 >103964620 >103964602 >103964658 >103964594 >103964653 >103964711 >103964748 >103964760 >103964753 >103964762

--LLMs, reasoning, and logic discussion:

>103961362 >103961401 >103961819 >103961891 >103962146 >103962605 >103961900 >103961490 >103961513 >103961549

--DeepSeek R1 release and AGI discussion:

>103964074 >103964138 >103964150 >103964217 >103964274 >103964423 >103964432 >103964314 >103964319 >103964332 >103964344 >103964357 >103964368 >103964395 >103964389 >103964152

--Using system RAM for chatbot models and performance limitations:

>103962830 >103962848 >103962871 >103962956 >103962937 >103962971 >103962990 >103963031 >103963009

--R1-lite-preview performance discussion and comparison to QwQ:

>103964089 >103964178 >103964182 >103964188 >103965765 >103964213

--Discussion on DeepSeek model relationships and sizes:

>103965685 >103965737 >103965774 >103965794 >103965854 >103966020 >103966042

--OpenAI's "super-agents" announced, anons skeptical:

>103961699 >103961878 >103962728 >103962741 >103962778

--Anon discusses sentiment analysis with LLMs, says its nothing new:

>103961655 >103961669 >103961743 >103961911 >103962020

--Discussion on potential AI ban and its implications:

>103964655 >103964662 >103964686 >103964687

--DeepSeek-V3 development and improvements over V3:

>103964452 >103964470 >103964480

--Anon shares DeepSeek-R1-Zero and 600B+ DeepSeek-R1 model:

>103964519 >103964622 >103964625 >103964628

--Logs: R1:

>103966457 >103966602 >103966684

--Miku (free space):

>103960864 >103962504 >103964528 >103965178

►Recent Highlight Posts from the Previous Thread: >>103959933

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--DeepSeek-R1 distilled model benchmarks:

>103966823 >103966829 >103966837 >103966856 >103966868 >103966902

--DeepSeek-R1-Zero model size and optimization discussion:

>103964568 >103964620 >103964602 >103964658 >103964594 >103964653 >103964711 >103964748 >103964760 >103964753 >103964762

--LLMs, reasoning, and logic discussion:

>103961362 >103961401 >103961819 >103961891 >103962146 >103962605 >103961900 >103961490 >103961513 >103961549

--DeepSeek R1 release and AGI discussion:

>103964074 >103964138 >103964150 >103964217 >103964274 >103964423 >103964432 >103964314 >103964319 >103964332 >103964344 >103964357 >103964368 >103964395 >103964389 >103964152

--Using system RAM for chatbot models and performance limitations:

>103962830 >103962848 >103962871 >103962956 >103962937 >103962971 >103962990 >103963031 >103963009

--R1-lite-preview performance discussion and comparison to QwQ:

>103964089 >103964178 >103964182 >103964188 >103965765 >103964213

--Discussion on DeepSeek model relationships and sizes:

>103965685 >103965737 >103965774 >103965794 >103965854 >103966020 >103966042

--OpenAI's "super-agents" announced, anons skeptical:

>103961699 >103961878 >103962728 >103962741 >103962778

--Anon discusses sentiment analysis with LLMs, says its nothing new:

>103961655 >103961669 >103961743 >103961911 >103962020

--Discussion on potential AI ban and its implications:

>103964655 >103964662 >103964686 >103964687

--DeepSeek-V3 development and improvements over V3:

>103964452 >103964470 >103964480

--Anon shares DeepSeek-R1-Zero and 600B+ DeepSeek-R1 model:

>103964519 >103964622 >103964625 >103964628

--Logs: R1:

>103966457 >103966602 >103966684

--Miku (free space):

>103960864 >103962504 >103964528 >103965178

►Recent Highlight Posts from the Previous Thread: >>103959933

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/20/25(Mon)08:21:31 No.103967221

>>103967183

Don't know what kind but there's some writing in there.

Don't know what kind but there's some writing in there.

Anonymous 01/20/25(Mon)08:22:24 No.103967228

>>103967217

Hope thats the case. Finally something fresh sub 40b. It was getting stale.

Hope thats the case. Finally something fresh sub 40b. It was getting stale.

Anonymous 01/20/25(Mon)08:23:12 No.103967238

R1 will be 2x the price of V3 it looks like. If they really did fix its issues though then it will be worth it

Anonymous 01/20/25(Mon)08:23:39 No.103967240

>>103967221

huh, well gotta wait and see. i was really suprised my v3. i couldnt stand the other chink models because of the slop and dryness. i suspect they train it on rp.

huh, well gotta wait and see. i was really suprised my v3. i couldnt stand the other chink models because of the slop and dryness. i suspect they train it on rp.

Anonymous 01/20/25(Mon)08:25:17 No.103967251

>>103967238

Same price for cache hits as V3 though it seems, so will be pretty cheap still it looks like

Same price for cache hits as V3 though it seems, so will be pretty cheap still it looks like

Anonymous 01/20/25(Mon)08:27:35 No.103967266

Looks like it might already be up

https://api-docs.deepseek.com/guides/reasoning_model

https://api-docs.deepseek.com/api/create-chat-completion

https://api-docs.deepseek.com/guide

https://api-docs.deepseek.com/api/c

Anonymous 01/20/25(Mon)08:28:04 No.103967271

>>103967228

I'm tempted to still go with the 70B just because my current daily driver is an L3.3 tune, but either way, this does look like the next big thing in localland.

I'm tempted to still go with the 70B just because my current daily driver is an L3.3 tune, but either way, this does look like the next big thing in localland.

Anonymous 01/20/25(Mon)08:28:36 No.103967275

>>103967266

it is

it is

Anonymous 01/20/25(Mon)08:29:12 No.103967280

>>103967240

I'm confident there's RP in the Llama3 SFT data too, they're just not advertising it.

I'm confident there's RP in the Llama3 SFT data too, they're just not advertising it.

Anonymous 01/20/25(Mon)08:30:14 No.103967291

R1 is MIT licensed

Anonymous 01/20/25(Mon)08:30:24 No.103967292

Wish we would have gotten a deepseek distill to nemo or mistral-small.

Anonymous 01/20/25(Mon)08:30:37 No.103967295

>>103967291

lmfao

lmfao

Anonymous 01/20/25(Mon)08:30:46 No.103967297

How do I make R1 work with sillytavern?

Anonymous 01/20/25(Mon)08:31:13 No.103967301

>>103967292

The Qwen distill will have you eating well, brother

The Qwen distill will have you eating well, brother

Anonymous 01/20/25(Mon)08:32:50 No.103967317

>>103967301

I hope so, qwen is really dry but I'm open to kneeling deeply.

I hope so, qwen is really dry but I'm open to kneeling deeply.

Anonymous 01/20/25(Mon)08:36:46 No.103967338

>reasoner is super horny in a good way. I had to tune my preset way down

aicg complaining that its too horny. these fucking chinks man.

aicg complaining that its too horny. these fucking chinks man.

Anonymous 01/20/25(Mon)08:37:41 No.103967349

You know what would have been interesting to see? If they had trained the a distil on top of qwen with linear attention.

I wonder if a full fine tune wit ha couple billion tokens could make that perform better.

Regardless, now we just need a release with true 1m context early in the year and 2025 will be off to an amazing fucking start.

I wonder if a full fine tune wit ha couple billion tokens could make that perform better.

Regardless, now we just need a release with true 1m context early in the year and 2025 will be off to an amazing fucking start.

Anonymous 01/20/25(Mon)08:38:45 No.103967357

>>103967338

For those that want a character to be more than a fuckdoll, a model being too horny is a valid issue though.

For those that want a character to be more than a fuckdoll, a model being too horny is a valid issue though.

Anonymous 01/20/25(Mon)08:39:27 No.103967365

>>103967349

>now we just need a release with true 1m context

Isn't MiniMax exactly that? It seemed to hold up really well in the needle in a haystack tests up to 1m

>now we just need a release with true 1m context

Isn't MiniMax exactly that? It seemed to hold up really well in the needle in a haystack tests up to 1m

Anonymous 01/20/25(Mon)08:40:15 No.103967377

>>103967365

I assume he was talking about local models

I assume he was talking about local models

Anonymous 01/20/25(Mon)08:40:19 No.103967378

>>103967349

>Regardless, now we just need a release with true 1m context early in the year and 2025 will be off to an amazing fucking start.

https://huggingface.co/MiniMaxAI/MiniMax-Text-01

>Regardless, now we just need a release with true 1m context early in the year and 2025 will be off to an amazing fucking start.

https://huggingface.co/MiniMaxAI/Mi

Anonymous 01/20/25(Mon)08:41:44 No.103967385

>>103967365

>>103967378

Oh yeah, I forgot about that since I'm stuck with smaller models.

Then yeah, fucking shit, 2025 is already shaping up to be a great fucking year for LMG.

Sick as hell.

>>103967378

Oh yeah, I forgot about that since I'm stuck with smaller models.

Then yeah, fucking shit, 2025 is already shaping up to be a great fucking year for LMG.

Sick as hell.

Anonymous 01/20/25(Mon)08:42:00 No.103967387

>>103967377

Anon...

Anon...

Anonymous 01/20/25(Mon)08:43:06 No.103967396

>>103967357

yes, ideally a model that can sniff out what you want even without a explicit prompt.

but chink models needed to be guided by hand pretty forcefully to get them into a direction you want. not just sexually.

yes, ideally a model that can sniff out what you want even without a explicit prompt.

but chink models needed to be guided by hand pretty forcefully to get them into a direction you want. not just sexually.

Anonymous 01/20/25(Mon)08:44:40 No.103967410

So which one was the horny model?

Anonymous 01/20/25(Mon)08:45:48 No.103967430

Anonymous 01/20/25(Mon)08:46:50 No.103967442

>>103967238

They're increasing the price too much.

They're increasing the price too much.

Anonymous 01/20/25(Mon)08:46:53 No.103967443

wheres that bingo card ? dident it have something about beating o1 ? that can be crossed off too now

Anonymous 01/20/25(Mon)08:49:06 No.103967462

>>103967442

For hardcore usage on code ive spent $2 for 30M this month. It would have been like, what? Cache hits are the same so like $5?

For hardcore usage on code ive spent $2 for 30M this month. It would have been like, what? Cache hits are the same so like $5?

Anonymous 01/20/25(Mon)08:50:15 No.103967470

>>103967430

Now let's hope the distilled models aren't too sensitive to quantization.

Now let's hope the distilled models aren't too sensitive to quantization.

Anonymous 01/20/25(Mon)08:50:18 No.103967471

>To equip more efficient smaller models with reasoning capabilities like DeekSeek-R1, we directly fine-tuned open-source models like Qwen (Qwen, 2024b) and Llama (AI@Meta, 2024) using the 800k samples curated with DeepSeek-R1, as detailed in §2.3.3. Our findings indicate that this straightforward distillation method significantly enhances the reasoning abilities of smaller models.

>

>...

>

>For distilled models, we apply only SFT and do not include an RL stage, even though incorporating RL could substantially boost model performance. Our primary goal here is to demonstrate the effectiveness of the distillation technique, leaving the exploration of the RL stage to the broader research community.

The distilled models are half-assed, it's over before it even begun...

>

>...

>

>For distilled models, we apply only SFT and do not include an RL stage, even though incorporating RL could substantially boost model performance. Our primary goal here is to demonstrate the effectiveness of the distillation technique, leaving the exploration of the RL stage to the broader research community.

The distilled models are half-assed, it's over before it even begun...

Anonymous 01/20/25(Mon)08:51:08 No.103967479

Just gotta buy 3x digits to run R1 now lol.

Anonymous 01/20/25(Mon)08:51:58 No.103967486

>>103967479

the more you buy...

the more you buy...

Anonymous 01/20/25(Mon)08:52:02 No.103967487

>>103967471

>leaving the exploration of the RL stage to the broader research community.

So never gonna happen

>leaving the exploration of the RL stage to the broader research community.

So never gonna happen

Anonymous 01/20/25(Mon)08:53:04 No.103967501

Anonymous 01/20/25(Mon)08:53:19 No.103967505

How about you just do the thinking inside the model instead of using token salad?

Anonymous 01/20/25(Mon)08:54:16 No.103967509

>>103967505

Llama 4 Coconut is coming next month

Llama 4 Coconut is coming next month

Anonymous 01/20/25(Mon)08:54:25 No.103967512

>>103967505

that would require competence

that would require competence

Anonymous 01/20/25(Mon)08:55:01 No.103967517

>>103967140

It follows the CoT very well.

R1-Lite-Preview started rambling and confusing perspective after 1-2 turns. Full R1 stays stable with each turn.

It follows the CoT very well.

R1-Lite-Preview started rambling and confusing perspective after 1-2 turns. Full R1 stays stable with each turn.

Anonymous 01/20/25(Mon)08:56:52 No.103967524

>>103967505

so you'd rather have a black box cot where you don't see anything for however long it thinks and can't stop it mid-way if it goes retarded or starts looping?

so you'd rather have a black box cot where you don't see anything for however long it thinks and can't stop it mid-way if it goes retarded or starts looping?

Anonymous 01/20/25(Mon)08:58:08 No.103967537

>>103967517

that is really good. very impressive stuff.

thanks anon. first time i see llm thinking that doesn't feel like rambling. very cool.

that is really good. very impressive stuff.

thanks anon. first time i see llm thinking that doesn't feel like rambling. very cool.

Anonymous 01/20/25(Mon)08:58:11 No.103967538

Anonymous 01/20/25(Mon)08:58:43 No.103967543

>>103967479

Oh unfortunately you have to wait for Digits 2 EOY to be able to chain 3 together after registering, going through the KYC checks and paying for a subscription.

(+ $20 a month to enable R rated content generation)

Oh unfortunately you have to wait for Digits 2 EOY to be able to chain 3 together after registering, going through the KYC checks and paying for a subscription.

(+ $20 a month to enable R rated content generation)

Anonymous 01/20/25(Mon)08:59:04 No.103967545

Temp might be a bit high but soul...

Anonymous 01/20/25(Mon)08:59:23 No.103967548

gotta say a distill into llama and qwen was not expected and is by far the most interesting part of this to me

they used 3.3 too for the 70b and it appears to be like 90-95% of the capabilities of full r1 and I can actually run this shit locally at a high quant

they used 3.3 too for the 70b and it appears to be like 90-95% of the capabilities of full r1 and I can actually run this shit locally at a high quant

Anonymous 01/20/25(Mon)09:00:04 No.103967555

>>103967545

Also I'm using a story format so before someone says the inevitable "its talking for you" that is on purpose.

Also I'm using a story format so before someone says the inevitable "its talking for you" that is on purpose.

Anonymous 01/20/25(Mon)09:00:05 No.103967556

deepseek-reasoner over API seems to like to not use CoT for political questions, even in other languages or when they're not related to China.

Anonymous 01/20/25(Mon)09:00:19 No.103967558

>>103967538

2 more months

2 more months

Anonymous 01/20/25(Mon)09:00:31 No.103967559

how's sex with the 32b r1?

Anonymous 01/20/25(Mon)09:01:39 No.103967566

>>103967548

Yeah, stroke of genius if they want to ear the graces of the enthusiast hobbyist that might have a 4090 or the like.

Yeah, stroke of genius if they want to ear the graces of the enthusiast hobbyist that might have a 4090 or the like.

Anonymous 01/20/25(Mon)09:01:51 No.103967568

>>103967548

I was dooming when I saw the size of R1 but with the distills I'm now hmming.

I was dooming when I saw the size of R1 but with the distills I'm now hmming.

Anonymous 01/20/25(Mon)09:02:23 No.103967574

>>103967524

I would assume if internal thinking becomes the norm, tools would be developed to help visualize the internal state, or at least generate intermediary tokens for debugging purposes.

I would assume if internal thinking becomes the norm, tools would be developed to help visualize the internal state, or at least generate intermediary tokens for debugging purposes.

Anonymous 01/20/25(Mon)09:02:39 No.103967576

k-kino...

Anonymous 01/20/25(Mon)09:03:47 No.103967584

>>103967509

Nigga you'll be getting same old transformerslop with layerskip and special quantization that niggerganov won't implement. You aint getting no coconut LGBT.

Nigga you'll be getting same old transformerslop with layerskip and special quantization that niggerganov won't implement. You aint getting no coconut LGBT.

Anonymous 01/20/25(Mon)09:04:44 No.103967591

Anonymous 01/20/25(Mon)09:06:19 No.103967609

Brothers, ELIaR: how do I use CoT / R1's "deep thinking" locally?

Anonymous 01/20/25(Mon)09:09:20 No.103967639

>32b model that's better than claude

ok now tell me why it's bullshit because I have some trouble believing it

ok now tell me why it's bullshit because I have some trouble believing it

Anonymous 01/20/25(Mon)09:09:38 No.103967642

>>103967639

its tuned for code, not a generalist model like claude

its tuned for code, not a generalist model like claude

Anonymous 01/20/25(Mon)09:10:57 No.103967654

>>103967642

I think most people use claude for code rather than a generalist model desu

I think most people use claude for code rather than a generalist model desu

Anonymous 01/20/25(Mon)09:12:47 No.103967671

>>103967654

actually most people fuck claude

actually most people fuck claude

Anonymous 01/20/25(Mon)09:13:25 No.103967677

>>103967671

poor fucks don't know what's good then

poor fucks don't know what's good then

Anonymous 01/20/25(Mon)09:14:14 No.103967680

R1 is fucking GOOD bros... like claude level creative. I actually had to turn the temp down a bit like it was mistral nemo

Anonymous 01/20/25(Mon)09:15:25 No.103967689

>>103967680

r1 doesn't take in temp as an argument though? or am i high

r1 doesn't take in temp as an argument though? or am i high

Anonymous 01/20/25(Mon)09:15:32 No.103967690

Anonymous 01/20/25(Mon)09:16:32 No.103967702

>>103967690

aren't distils the only option for vram/ramlets?

aren't distils the only option for vram/ramlets?

Anonymous 01/20/25(Mon)09:16:33 No.103967703

>>103967680

Which distill?

Which distill?

Anonymous 01/20/25(Mon)09:16:51 No.103967706

>>103967702

anon, but then it's not R1. can you properly tell exactly which distill you're using? R1 is only when you use the actual R1

anon, but then it's not R1. can you properly tell exactly which distill you're using? R1 is only when you use the actual R1

Anonymous 01/20/25(Mon)09:17:29 No.103967709

>>103967703

R1, not distill, sorry

R1, not distill, sorry

Anonymous 01/20/25(Mon)09:18:01 No.103967711

>>103967709

R1 doesn't have a temp parameter, if you're using some proxy/etc, it's just ignoring it.

R1 doesn't have a temp parameter, if you're using some proxy/etc, it's just ignoring it.

Anonymous 01/20/25(Mon)09:18:20 No.103967713

>>103967709

You just admitted to trolling, retard

You just admitted to trolling, retard

Anonymous 01/20/25(Mon)09:18:39 No.103967715

>>103967706

true, I wish people were more specific about which models they're using

helps casual users like me with lazily making use of anon's vast coomer/programming/... experience in this rapidly moving giant ecosystem

true, I wish people were more specific about which models they're using

helps casual users like me with lazily making use of anon's vast coomer/programming/... experience in this rapidly moving giant ecosystem

Anonymous 01/20/25(Mon)09:18:45 No.103967718

>>103967713

he might be an aicg guy

he might be an aicg guy

Anonymous 01/20/25(Mon)09:19:03 No.103967720

>>103967690

Neither.

Neither.

Anonymous 01/20/25(Mon)09:19:12 No.103967721

>>103967689

>r1 doesn't take in temp as an argument though?

you can do some override fuckery so it werks

i can't remember how it works anymore, some other anon pls advise

>r1 doesn't take in temp as an argument though?

you can do some override fuckery so it werks

i can't remember how it works anymore, some other anon pls advise

Anonymous 01/20/25(Mon)09:19:29 No.103967724

>>103967639

it's not bullshit, you're just falling into the classic trap of thinking "scores better on these benchmarks" is the same thing as "is better"

is it really that crazy to think that a reasoning model would do better than a non reasoning model on math, code, and academic questions?

it's not bullshit, you're just falling into the classic trap of thinking "scores better on these benchmarks" is the same thing as "is better"

is it really that crazy to think that a reasoning model would do better than a non reasoning model on math, code, and academic questions?

Anonymous 01/20/25(Mon)09:19:30 No.103967725

Anonymous 01/20/25(Mon)09:19:37 No.103967726

>>103967720

Then congratulations, anon, it's placebo. Deepseek R1 in API does NOT support temperature.

Then congratulations, anon, it's placebo. Deepseek R1 in API does NOT support temperature.

Anonymous 01/20/25(Mon)09:20:03 No.103967728

Anonymous 01/20/25(Mon)09:20:13 No.103967731

>It's the horse fucker

Anonymous 01/20/25(Mon)09:20:15 No.103967732

Anonymous 01/20/25(Mon)09:20:20 No.103967734

>>103967725

They haven't though? You're mixing up stuff. With V3 they announced that V3 is more expensive but they'll have the older pricing for some time as a special offer.

They haven't though? You're mixing up stuff. With V3 they announced that V3 is more expensive but they'll have the older pricing for some time as a special offer.

Anonymous 01/20/25(Mon)09:20:26 No.103967736

>>103967718

He's the pony fucker.

He's the pony fucker.

Anonymous 01/20/25(Mon)09:20:29 No.103967737

>>103967725

>they 7xed output and 2xed input.

>That's like a 9x increase in price.

that's not how math works

>they 7xed output and 2xed input.

>That's like a 9x increase in price.

that's not how math works

Anonymous 01/20/25(Mon)09:21:58 No.103967747

>>103967724

>is it really that crazy to think that a reasoning model would do better than a non reasoning model on math, code, and academic questions?

it is pretty fucking crazy that a 32b model is almost as good at coding than paypig o1 (which takes a fuckton more compute to run) and blows the fuck out of claude 3.5 which seem to be the go-to code model as far as internet reputation goes

nobody expected this to happen a year ago

>is it really that crazy to think that a reasoning model would do better than a non reasoning model on math, code, and academic questions?

it is pretty fucking crazy that a 32b model is almost as good at coding than paypig o1 (which takes a fuckton more compute to run) and blows the fuck out of claude 3.5 which seem to be the go-to code model as far as internet reputation goes

nobody expected this to happen a year ago

Anonymous 01/20/25(Mon)09:23:13 No.103967757

Anonymous 01/20/25(Mon)09:23:31 No.103967760

>>103967747

Benchmaxxed models always existed.

Benchmaxxed models always existed.

Anonymous 01/20/25(Mon)09:24:12 No.103967765

>>103967576

i think we all saw that coming. but the llm calling the story so far crack-infueled and unhinged is cool.

i think we all saw that coming. but the llm calling the story so far crack-infueled and unhinged is cool.

Anonymous 01/20/25(Mon)09:24:34 No.103967767

>>103967760

hence the "now tell me why it's bullshit" part

hence the "now tell me why it's bullshit" part

Anonymous 01/20/25(Mon)09:26:32 No.103967784

>>103967760

I thought the distills are a low effort thing they did for shits and giggles though? >>103967471

You think they bothered to benchmaxx them?

I thought the distills are a low effort thing they did for shits and giggles though? >>103967471

You think they bothered to benchmaxx them?

Anonymous 01/20/25(Mon)09:27:52 No.103967796

>>103967784

>You think they bothered to benchmaxx them?

everybody and their mother in muh ai space benchmaxxes the everliving shit out of anything they release

altman even bought a benchmark just so his hype machine had something juicy to advertise

>You think they bothered to benchmaxx them?

everybody and their mother in muh ai space benchmaxxes the everliving shit out of anything they release

altman even bought a benchmark just so his hype machine had something juicy to advertise

Anonymous 01/20/25(Mon)09:27:56 No.103967799

longer log of deepseek r1

Anonymous 01/20/25(Mon)09:28:02 No.103967801

>>103967731

i never understood his QwQ and qwen hype. those models are just bad.

but the logs of r1 seem very good so far.

i never understood his QwQ and qwen hype. those models are just bad.

but the logs of r1 seem very good so far.

Anonymous 01/20/25(Mon)09:29:25 No.103967808

>>103967639

Most of those are meme thinking specialists benches you will get a much better idea from livebench

Most of those are meme thinking specialists benches you will get a much better idea from livebench

Anonymous 01/20/25(Mon)09:29:41 No.103967810

Anonymous 01/20/25(Mon)09:29:53 No.103967812

>>103967747

>it is pretty fucking crazy that a 32b model is almost as good at coding than paypig o1 (which takes a fuckton more compute to run)

kind of but we also knew o1 mini existed and was almost as good at coding as o1 so it's not that mindblowing

>blows the fuck out of claude 3.5 which seem to be the go-to code model as far as internet reputation goes

once again, on well-scoped bench questions! not that it isn't impressive but what's good about claude is that it has tons of real world knowledge and intuition so that it can actually be helpful in real world cases. TBD whether R1 et al have that.

>>103967760

>>103967767

the benchmaxxing allegation is bandied around way too casually these days, often by people too dumb to interpret benchmark scores so they get mad when it isn't god-tier at everything in practice

>it is pretty fucking crazy that a 32b model is almost as good at coding than paypig o1 (which takes a fuckton more compute to run)

kind of but we also knew o1 mini existed and was almost as good at coding as o1 so it's not that mindblowing

>blows the fuck out of claude 3.5 which seem to be the go-to code model as far as internet reputation goes

once again, on well-scoped bench questions! not that it isn't impressive but what's good about claude is that it has tons of real world knowledge and intuition so that it can actually be helpful in real world cases. TBD whether R1 et al have that.

>>103967760

>>103967767

the benchmaxxing allegation is bandied around way too casually these days, often by people too dumb to interpret benchmark scores so they get mad when it isn't god-tier at everything in practice

Anonymous 01/20/25(Mon)09:31:38 No.103967823

>>103967757

Cute migu

Cute migu

Anonymous 01/20/25(Mon)09:35:14 No.103967850

>>103967799

good stuff ngl

good stuff ngl

Anonymous 01/20/25(Mon)09:35:20 No.103967851

>>103967734

Damn, those reasoner output tokens are a premium, guess I'm stuck using the distilled models.

Damn, those reasoner output tokens are a premium, guess I'm stuck using the distilled models.

Anonymous 01/20/25(Mon)09:37:11 No.103967871

R1 is finally actually claude level imo.

Anonymous 01/20/25(Mon)09:38:03 No.103967877

>>103967737

dont cyberbully the 7b anon its not its fault its quanted

dont cyberbully the 7b anon its not its fault its quanted

Anonymous 01/20/25(Mon)09:40:34 No.103967891

Can we please find a nomenclature to differentiate between R1 and it's distills?

Anonymous 01/20/25(Mon)09:41:10 No.103967893

https://www.youtube.com/watch?v=B9bD8RjJmJk

Anonymous 01/20/25(Mon)09:41:55 No.103967900

>>103967891

R1 and R1 distills. Are you retarded?

R1 and R1 distills. Are you retarded?

Anonymous 01/20/25(Mon)09:42:32 No.103967904

>>103967891

R1-[X]B

R1-[X]B

Anonymous 01/20/25(Mon)09:44:16 No.103967914

>>103967900

Let me rephrase that. People should SPECIFY what they're using.

Let me rephrase that. People should SPECIFY what they're using.

Anonymous 01/20/25(Mon)09:45:43 No.103967927

>>103967517

ahaha that's excellent, i'm impressed with the "also, check the inventory"

ahaha that's excellent, i'm impressed with the "also, check the inventory"

Anonymous 01/20/25(Mon)09:45:49 No.103967929

>>103967914

Or what?

Or what?

Anonymous 01/20/25(Mon)09:46:34 No.103967935

is it really claude level or are we just going through the honeymoon phase like people did with v3?

Anonymous 01/20/25(Mon)09:47:37 No.103967949

>>103967935

It must be. It'd be very embarrassing for local models to not have an answer to claude now that claude 3 is about to have its first anniversary.

It must be. It'd be very embarrassing for local models to not have an answer to claude now that claude 3 is about to have its first anniversary.

Anonymous 01/20/25(Mon)09:48:00 No.103967951

>>103967935

The only way to know is to wait two or so weeks.

The only way to know is to wait two or so weeks.

Anonymous 01/20/25(Mon)09:48:06 No.103967952

>>103967737

2x+7x = 9x, 9x increase.

2x+7x = 9x, 9x increase.

Anonymous 01/20/25(Mon)09:48:11 No.103967953

Anonymous 01/20/25(Mon)09:48:49 No.103967961

>>103967929

Or they will ask the user to specify

Or they will ask the user to specify

Anonymous 01/20/25(Mon)09:49:50 No.103967970

So what's the best multimodal model right now (preferably under 150B)? I intend to use it to analyze scrapped memes and evaluate their quality along several metrics.

Anonymous 01/20/25(Mon)09:49:58 No.103967973

How are the distills? Are they fake and cope or real and impressive? I'm in bed right now so I can't test them

Anonymous 01/20/25(Mon)09:50:17 No.103967977

>>103967973

wait 1 day

wait 1 day

Anonymous 01/20/25(Mon)09:50:57 No.103967982

>>103967973

No RL done on them, left for the "research community" to half-ass.

No RL done on them, left for the "research community" to half-ass.

Anonymous 01/20/25(Mon)09:50:59 No.103967984

tokenizer diff for the llama distill:

got worried when I saw they made tokenizer changes - I was hoping they wouldn't force their prompt format on it but at least they didn't go too crazy with it

8c8

< "content": "<|begin_of_text|>",

---

> "content": "<|beginofsentence|>",

17c17

< "content": "<|end_of_text|>",

---

> "content": "<|endofsentence|>",

107c107

< "content": "<|reserved_special_token_3|>",

---

> "content": "<|User|>",

112c112

< "special": true

---

> "special": false

116c116

< "content": "<|reserved_special_token_4|>",

---

> "content": "<|Assistant|>",

121c121

< "special": true

---

> "special": false

125c125

< "content": "<|reserved_special_token_5|>",

---

> "content": "<think>",

130c130

< "special": true

---

> "special": false

134c134

< "content": "<|reserved_special_token_6|>",

---

> "content": "</think>",

139c139

< "special": true

---

> "special": false

143c143

< "content": "<|reserved_special_token_7|>",

---

> "content": "<|pad|>",

got worried when I saw they made tokenizer changes - I was hoping they wouldn't force their prompt format on it but at least they didn't go too crazy with it

Anonymous 01/20/25(Mon)09:51:47 No.103967996

>>103967973

I'm still waiting for someone to quant l3.3-70b

I'm still waiting for someone to quant l3.3-70b

Anonymous 01/20/25(Mon)09:52:12 No.103968000

The distilled models feel all over the place, they brainstorm possibilities but don't try all of them, instead getting stuck repeating some strategy that is clearly not working.

I gave it a math problem and one of its ideas to solve a part of the problem was correct but it didn't try to put the idea in practice, how annoying.

I gave it a math problem and one of its ideas to solve a part of the problem was correct but it didn't try to put the idea in practice, how annoying.

Anonymous 01/20/25(Mon)09:52:15 No.103968001

>>103967893

Great game

Great game

Anonymous 01/20/25(Mon)09:52:51 No.103968005

did the chinks just killed OpenAI's business?

Anonymous 01/20/25(Mon)09:53:13 No.103968008

>>103967996

If you can run it, you can quant it.

If you can run it, you can quant it.

Anonymous 01/20/25(Mon)09:53:37 No.103968015

>>103967935

redditfags claim it can answer questions o1 cant. thats the full r1 one though. so idk.

logs look really good.

i dont believe the distilled benchmarks. thats too good to be true, it cant be.

redditfags claim it can answer questions o1 cant. thats the full r1 one though. so idk.

logs look really good.

i dont believe the distilled benchmarks. thats too good to be true, it cant be.

Anonymous 01/20/25(Mon)09:54:02 No.103968021

>>103968008

NTA but it is annoying to dl the entire thing just to end up using a quant.

NTA but it is annoying to dl the entire thing just to end up using a quant.

Anonymous 01/20/25(Mon)09:55:01 No.103968030

>>103968021

>NTA but it is annoying to dl the entire thing just to end up using a quant.

but then you know its free of fuckery

>NTA but it is annoying to dl the entire thing just to end up using a quant.

but then you know its free of fuckery

Anonymous 01/20/25(Mon)09:55:05 No.103968033

damn r1 is good. are there any limits on the chat client?

Anonymous 01/20/25(Mon)09:55:16 No.103968034

Time to post that chink proapganda again.

https://www.chinatalk.media/p/deepseek-ceo-interview-with-chinas

>Liang Wenfeng: In the face of disruptive technologies, moats created by closed source are temporary. Even OpenAI’s closed source approach can’t prevent others from catching up. So we anchor our value in our team — our colleagues grow through this process, accumulate know-how, and form an organization and culture capable of innovation. That’s our moat.

>Open source, publishing papers, in fact, do not cost us anything. For technical talent, having others follow your innovation gives a great sense of accomplishment. In fact, open source is more of a cultural behavior than a commercial one, and contributing to it earns us respect. There is also a cultural attraction for a company to do this.

MRI license, I kneel.

https://www.chinatalk.media/p/deeps

>Liang Wenfeng: In the face of disruptive technologies, moats created by closed source are temporary. Even OpenAI’s closed source approach can’t prevent others from catching up. So we anchor our value in our team — our colleagues grow through this process, accumulate know-how, and form an organization and culture capable of innovation. That’s our moat.

>Open source, publishing papers, in fact, do not cost us anything. For technical talent, having others follow your innovation gives a great sense of accomplishment. In fact, open source is more of a cultural behavior than a commercial one, and contributing to it earns us respect. There is also a cultural attraction for a company to do this.

MRI license, I kneel.

Anonymous 01/20/25(Mon)09:55:19 No.103968036

>>103968005

No, o4 will come out at $10000 per query. It will take three hours to respond to inputs and code at the level of three H1B visas.

No, o4 will come out at $10000 per query. It will take three hours to respond to inputs and code at the level of three H1B visas.

Anonymous 01/20/25(Mon)09:55:31 No.103968038

>>103968021

that's why you also throw it in a few slop merges to get your money's worth

that's why you also throw it in a few slop merges to get your money's worth

Anonymous 01/20/25(Mon)09:56:28 No.103968052

>>103968021

And when the inevitable fuckups are committed, you can just requant the thing instead of begging or wondering if the quant you downloaded is good or not.

And when the inevitable fuckups are committed, you can just requant the thing instead of begging or wondering if the quant you downloaded is good or not.

Anonymous 01/20/25(Mon)09:56:44 No.103968055

>>103968005

The US said no GPU for China and China answered with no AI money for the US.

The US said no GPU for China and China answered with no AI money for the US.

Anonymous 01/20/25(Mon)09:56:54 No.103968057

>>103968034

>In fact, open source is more of a cultural behavior than a commercial one, and contributing to it earns us respect.

I'll never doubt China for the rest of my life

>In fact, open source is more of a cultural behavior than a commercial one, and contributing to it earns us respect.

I'll never doubt China for the rest of my life

Anonymous 01/20/25(Mon)09:57:29 No.103968063

Anonymous 01/20/25(Mon)09:57:38 No.103968064

>>103968033

yes, 50 per day

yes, 50 per day

Anonymous 01/20/25(Mon)09:57:55 No.103968071

>>103968000

logs?

logs?

Anonymous 01/20/25(Mon)10:00:49 No.103968099

Anonymous 01/20/25(Mon)10:01:22 No.103968104

>>103968000

So QwQ?

So QwQ?

Anonymous 01/20/25(Mon)10:02:06 No.103968112

>>103968055

lmao, laughed more than i should have

lmao, laughed more than i should have

Anonymous 01/20/25(Mon)10:05:07 No.103968144

>>103968055

that's so petty I love every single bit of it

that's so petty I love every single bit of it

Anonymous 01/20/25(Mon)10:10:18 No.103968203

>>103968071

Here is an example with a text decoding prompt, I had to intervene to change the "But that seems unlikely" to "Let's try that", only then it managed to solve the prompt, but got stuck in a self-doubt loop lol, but the fact that it managed to solve it is pretty cool.

Here is an example with a text decoding prompt, I had to intervene to change the "But that seems unlikely" to "Let's try that", only then it managed to solve the prompt, but got stuck in a self-doubt loop lol, but the fact that it managed to solve it is pretty cool.

Anonymous 01/20/25(Mon)10:10:48 No.103968209

We do not have financing plans in the short term. Money has never been the problem for us;

More investments do not equal more innovation. Otherwise, big firms would’ve monopolized all innovation already.

Big firms do not have a clear upper hand. Big firms have existing customers, but their cash-flow businesses are also their burden, and this makes them vulnerable to disruption at any time.

More investments do not equal more innovation. Otherwise, big firms would’ve monopolized all innovation already.

Big firms do not have a clear upper hand. Big firms have existing customers, but their cash-flow businesses are also their burden, and this makes them vulnerable to disruption at any time.

Anonymous 01/20/25(Mon)10:12:45 No.103968227

>>103967639

is there some logs for the 32b model? that one looks smart as fuck

is there some logs for the 32b model? that one looks smart as fuck

Anonymous 01/20/25(Mon)10:20:16 No.103968311

I had to do it.

Anonymous 01/20/25(Mon)10:21:57 No.103968334

any way to use r1 on sillytavern? it seems like as-is using the DS provider option sends a presence penalty key in the config object and this causes the thing to fail

Anonymous 01/20/25(Mon)10:22:05 No.103968336

>>103968311

Cringe

Cringe

Anonymous 01/20/25(Mon)10:22:25 No.103968339

>>103968311

based

based

Anonymous 01/20/25(Mon)10:22:30 No.103968341

>>103968334

custom openai, and you can then disable some params, or use a proxy that strips it for you

custom openai, and you can then disable some params, or use a proxy that strips it for you

Anonymous 01/20/25(Mon)10:23:25 No.103968348

>>103968341

ah i was hoping not to have to go to all that trouble but thanks anon

ah i was hoping not to have to go to all that trouble but thanks anon

Anonymous 01/20/25(Mon)10:23:38 No.103968349

>>103968311

Baringe

Baringe

Anonymous 01/20/25(Mon)10:25:40 No.103968362

>>103968311

crazed

crazed

Anonymous 01/20/25(Mon)10:25:57 No.103968367

When your local model refuses legitimate requests and feels too much cucked, remember that people like these exist (see picrel).

https://www.reddit.com/r/LocalLLaMA/comments/1i4vwm7/im_starting_to_think_ai_benchmarks_are_useless/

https://www.reddit.com/r/LocalLLaMA

Anonymous 01/20/25(Mon)10:26:39 No.103968376

Anonymous 01/20/25(Mon)10:28:00 No.103968390

>>103968376

bo gack

bo gack

Anonymous 01/20/25(Mon)10:28:20 No.103968396

>>103968367

Snitches get stitches

Snitches get stitches

Anonymous 01/20/25(Mon)10:29:32 No.103968406

Anonymous 01/20/25(Mon)10:30:09 No.103968416

Anonymous 01/20/25(Mon)10:30:21 No.103968420

>>103967199

tourist here. does sama make up bullshit about chatgpt to hype it up and make people ignore releases by competitors?

tourist here. does sama make up bullshit about chatgpt to hype it up and make people ignore releases by competitors?

Anonymous 01/20/25(Mon)10:30:29 No.103968422

>>103968406

nta but that is a really common phrase that predates doom by a lot

nta but that is a really common phrase that predates doom by a lot

Anonymous 01/20/25(Mon)10:30:31 No.103968423

>>103968311

The marvel reference was a bit much but at least it's showing some personality.

The marvel reference was a bit much but at least it's showing some personality.

Anonymous 01/20/25(Mon)10:31:05 No.103968430

>>103968422

yeah I know I was just joking around

yeah I know I was just joking around

Anonymous 01/20/25(Mon)10:31:44 No.103968436

>>103968420

yes

yes

Anonymous 01/20/25(Mon)10:34:01 No.103968460

>>103968367

Reported them for being based? Imagine being a CIA intern and having to read dozens of emails about UndiSAOGoliath-32.42B-Abliterated-Test-Prelease-0.1-AWQ going along with his instructions to shoot people and start a nuclear war and how the government needs to stop it NOW!

Reported them for being based? Imagine being a CIA intern and having to read dozens of emails about UndiSAOGoliath-32.42B-Abliterated-T

Anonymous 01/20/25(Mon)10:34:47 No.103968469

Do we need to add something specific to the system prompt to get it to do the thinking?

Anonymous 01/20/25(Mon)10:37:47 No.103968499

Anonymous 01/20/25(Mon)10:40:28 No.103968524

Do the distill models use the base models prompt format or deepseeks?

Anonymous 01/20/25(Mon)10:42:02 No.103968534

>the distills don't do the RL step

>no new small uncensored base model

Ahhhhhhhhh

>no new small uncensored base model

Ahhhhhhhhh

Anonymous 01/20/25(Mon)10:43:17 No.103968545

>>103968203

I'm seeing the same thing. I guess that's why RL is important for this kind of model.

I'm seeing the same thing. I guess that's why RL is important for this kind of model.

Anonymous 01/20/25(Mon)10:46:03 No.103968572

>main: The chat template that comes with this model is not yet supported, falling back to chatml. This may cause the model to output suboptimal responses

how long will it take ggerganov to sort this shit out?

how long will it take ggerganov to sort this shit out?

Anonymous 01/20/25(Mon)10:46:07 No.103968573

>>103968524

deepseek. check tokenizer_config.json

deepseek. check tokenizer_config.json

Anonymous 01/20/25(Mon)10:47:35 No.103968588

>>103968572

stop falling for the chat completion meme and pass the prompt format through tavern

stop falling for the chat completion meme and pass the prompt format through tavern

Anonymous 01/20/25(Mon)10:48:02 No.103968593

>>103968572

Set them up with prefix/suffix or whatever. You know how templates work, don't you?

Set them up with prefix/suffix or whatever. You know how templates work, don't you?

Anonymous 01/20/25(Mon)10:49:29 No.103968606

Anonymous 01/20/25(Mon)10:52:18 No.103968631

>>103968534

On one hand, that's lame. On the other hand, if these distillations pull those benchmark numbers without any RL step, then imagine how much further they could be improved.

On one hand, that's lame. On the other hand, if these distillations pull those benchmark numbers without any RL step, then imagine how much further they could be improved.

Anonymous 01/20/25(Mon)10:52:28 No.103968632

>>103968606

this one

this one

Anonymous 01/20/25(Mon)10:53:43 No.103968643

>>103968534

What does that mean? I thought RL was just SFT?

What does that mean? I thought RL was just SFT?

Anonymous 01/20/25(Mon)10:57:54 No.103968679

>>103968367

fr*nch Canadian is a cuck, who coulda guessed

fr*nch Canadian is a cuck, who coulda guessed

Anonymous 01/20/25(Mon)11:04:09 No.103968738

I ended my ClosedAI subscription.

Anonymous 01/20/25(Mon)11:04:45 No.103968746

>>103968738

I just bought the family pack subscription

I just bought the family pack subscription

Anonymous 01/20/25(Mon)11:04:56 No.103968747

>>103968460

he's an OAI shill

he's an OAI shill

Anonymous 01/20/25(Mon)11:06:00 No.103968756

>>103968746

They have a family pack?

They have a family pack?

Anonymous 01/20/25(Mon)11:08:19 No.103968771

>>103968756

They call it a "Team" package but I consider my team a family. :)

They call it a "Team" package but I consider my team a family. :)

Anonymous 01/20/25(Mon)11:08:28 No.103968774

Anonymous 01/20/25(Mon)11:11:36 No.103968799

>>103968747

holy shit, the worst part is that he's probably shilling ClosedAI for free, what a sad existence

holy shit, the worst part is that he's probably shilling ClosedAI for free, what a sad existence

Anonymous 01/20/25(Mon)11:12:02 No.103968802

>>103968747

What does "PHD level in math" mean?

What does "PHD level in math" mean?

Anonymous 01/20/25(Mon)11:13:40 No.103968817

Anonymous 01/20/25(Mon)11:15:15 No.103968835

>>103968817

he is, he's using gpt4 as a therapist

he is, he's using gpt4 as a therapist

Anonymous 01/20/25(Mon)11:18:05 No.103968862

Anonymous 01/20/25(Mon)11:18:28 No.103968869

>>103968835

>the moment you can't pay, they ignore you

I read it with Michael's voice lol, does he expect people to work for free? holy fuck he's retarded

>the moment you can't pay, they ignore you

I read it with Michael's voice lol, does he expect people to work for free? holy fuck he's retarded

Anonymous 01/20/25(Mon)11:18:39 No.103968873

>>103968802

That it can write a little python.

That it can write a little python.

Anonymous 01/20/25(Mon)11:19:16 No.103968881

>>103968835

whew lads this is hard to look at

whew lads this is hard to look at

Anonymous 01/20/25(Mon)11:19:26 No.103968882

Congratulations to the Chinese for a sota (true) level model without repetition issues as well.

Anonymous 01/20/25(Mon)11:20:11 No.103968884

>>103968835

Alright, now that's actually a little sad.

Alright, now that's actually a little sad.

Anonymous 01/20/25(Mon)11:20:24 No.103968890

Anonymous 01/20/25(Mon)11:20:24 No.103968891

>>103968882

this is what happens when a country is only focused on making a good product and doesn't want to waste their time on woke nonsense and model cucking

this is what happens when a country is only focused on making a good product and doesn't want to waste their time on woke nonsense and model cucking

Anonymous 01/20/25(Mon)11:21:16 No.103968899

>>103968835

someone should tell him antrhopic allows prefills and claude is legit more dangerous than most other llms

someone should tell him antrhopic allows prefills and claude is legit more dangerous than most other llms

Anonymous 01/20/25(Mon)11:22:37 No.103968923

Anonymous 01/20/25(Mon)11:23:08 No.103968931

Anonymous 01/20/25(Mon)11:23:55 No.103968936

>>103968899

RCMP will be very very interested in what he has to say for sure.

RCMP will be very very interested in what he has to say for sure.

Anonymous 01/20/25(Mon)11:24:53 No.103968944

>>103968899

last one for this clown but he was complaining about claude being too safe too, wtf

last one for this clown but he was complaining about claude being too safe too, wtf

Anonymous 01/20/25(Mon)11:26:30 No.103968954

Leave this random guy alone, this is the local models general not the Twitter general.

Anonymous 01/20/25(Mon)11:26:51 No.103968956

>>103968944

get this thing some help.

get this thing some help.

Anonymous 01/20/25(Mon)11:27:15 No.103968960

>>103968944

Claude shuts himself down on sensitive topics even if they are safe. He has lower EQ than GPT nowadays.

Claude shuts himself down on sensitive topics even if they are safe. He has lower EQ than GPT nowadays.

Anonymous 01/20/25(Mon)11:27:38 No.103968963

>>103968954

this random guy is contacting government agencies to get open llms more safe/banned.

this random guy is contacting government agencies to get open llms more safe/banned.

Anonymous 01/20/25(Mon)11:27:47 No.103968965

Anonymous 01/20/25(Mon)11:28:32 No.103968975

What is DeepSeek R1's knowledge cutoff date?

Anonymous 01/20/25(Mon)11:29:11 No.103968980

>>103968963

And? He isn't the first person to try that, and won't be the last.

And? He isn't the first person to try that, and won't be the last.

Anonymous 01/20/25(Mon)11:31:18 No.103968997

>>103968980

it's fun making fun of a cuck trying to fuck us over, that's all, feel free to share stuff about R1 if you want to contribute to the thread, I'm not stopping you

it's fun making fun of a cuck trying to fuck us over, that's all, feel free to share stuff about R1 if you want to contribute to the thread, I'm not stopping you

Anonymous 01/20/25(Mon)11:31:25 No.103968998

Anonymous 01/20/25(Mon)11:31:53 No.103969006

DeepSeekR1 paper

https://voca.ro/13iS7etvB1lP

https://voca.ro/13iS7etvB1lP

Anonymous 01/20/25(Mon)11:31:55 No.103969007

DeepSeek-R1 is pretty good at coding

Anonymous 01/20/25(Mon)11:32:47 No.103969016

>>103969007

is this a fucking bloons clone

is this a fucking bloons clone

Anonymous 01/20/25(Mon)11:32:55 No.103969017

>>103969007

even 3.5 sonnet could do that, just iteratively

even 3.5 sonnet could do that, just iteratively

Anonymous 01/20/25(Mon)11:34:55 No.103969043

>>103968998

I just don't think it's worth wasting thread space to shit on him. This is like going to Twitter and picking some blue haired guy who hates LLM and spamming the thread with his posts for giggles, come on, we are all adults here, aren't we?

I just don't think it's worth wasting thread space to shit on him. This is like going to Twitter and picking some blue haired guy who hates LLM and spamming the thread with his posts for giggles, come on, we are all adults here, aren't we?

Anonymous 01/20/25(Mon)11:39:45 No.103969074

Sad to see Nvidia Digits become fully useless four months before it's even out.

Anonymous 01/20/25(Mon)11:40:54 No.103969085

>>103969074

just buy 2x2 stacks and connect them over the network, easy

just buy 2x2 stacks and connect them over the network, easy

Anonymous 01/20/25(Mon)11:41:39 No.103969094

r1 is not local, since you're not running it locally

sooorryyyy!!!!

sooorryyyy!!!!

Anonymous 01/20/25(Mon)11:41:43 No.103969095

Mistralbros..... I don't feel so good.... all the donated compute with taxpayer money is.... *fades away into nemo*

Anonymous 01/20/25(Mon)11:42:17 No.103969102

>>103969007

now make it so that the enemies attack the background picture revealing a second one in the process

if it can't do that it's shit

now make it so that the enemies attack the background picture revealing a second one in the process

if it can't do that it's shit

Anonymous 01/20/25(Mon)11:45:59 No.103969130

Was Deepseek finetuning it's competitors on it's own O1 level model and improving them massively, a burning disrespectful move?

Is this like diss wars among rappers?

Is this like diss wars among rappers?

Anonymous 01/20/25(Mon)11:46:00 No.103969131

>>103969094

I'm running the distill right now thoughever

I'm running the distill right now thoughever

Anonymous 01/20/25(Mon)11:46:19 No.103969138

r1 32b distil didn't fuck up clothing placement like regular qwen (32 and 72b, and llama) often did

first impressions are positive

first impressions are positive

Anonymous 01/20/25(Mon)11:47:20 No.103969148

>>103969138

>r1 32b distil didn't fuck up clothing placement like regular qwen (32 and 72b, and llama) often did

how does distill work? they took Qwen 32b and used it as a student to deepseek r1?

>r1 32b distil didn't fuck up clothing placement like regular qwen (32 and 72b, and llama) often did

how does distill work? they took Qwen 32b and used it as a student to deepseek r1?

Anonymous 01/20/25(Mon)11:47:46 No.103969151

>>103969130

anything to mog openai

anything to mog openai

Anonymous 01/20/25(Mon)11:47:51 No.103969152

>bro check this out, the distills are amazeballs!

>try the distills

>they're mediocre

every single time, worthless lying chinks

>try the distills

>they're mediocre

every single time, worthless lying chinks

Anonymous 01/20/25(Mon)11:51:11 No.103969173

>new 32b

yippee...

>chain of thought "reasoning" model with great "benchmarks"

ehhh...

yippee...

>chain of thought "reasoning" model with great "benchmarks"

ehhh...

Anonymous 01/20/25(Mon)11:56:41 No.103969224

What's a good model that would fit on 12GB vram? Not looking to role play SMUT but I would like it somewhat uncensored. What direction should I be looking in? It's been a while for me so the last I remember that was super popular was Mistral before they sold out.

Anonymous 01/20/25(Mon)11:58:27 No.103969239

>>103969224

Mistral nemo 12b is fine. Check the new r1-distill models as well if you want.

Mistral nemo 12b is fine. Check the new r1-distill models as well if you want.

Anonymous 01/20/25(Mon)11:58:45 No.103969242

Using the llama 70b distill without system prompt the thinking is relatively short, what system prompt do you use?

Anonymous 01/20/25(Mon)11:58:49 No.103969244

>>103969007

It's terrible.

It's terrible.

Anonymous 01/20/25(Mon)11:59:12 No.103969252

>>103969152

The only praise I saw in the thread was for R1, not the distilled versions.

The only praise I saw in the thread was for R1, not the distilled versions.

Anonymous 01/20/25(Mon)11:59:40 No.103969258

Anonymous 01/20/25(Mon)12:00:47 No.103969265

According to the R1 paper, DeepSeek used "Group Relative Policy Optimization" for RL, which isn't as involved as PRM (Process Reward Modelling). Maybe this could be used in RP models? Take notes, sloptuners.

Anonymous 01/20/25(Mon)12:02:49 No.103969288

>>103969265

>Take notes, sloptuners

What did you say? More qloras tuned on c2? Don't forget to check the kofi.

>Take notes, sloptuners

What did you say? More qloras tuned on c2? Don't forget to check the kofi.

Anonymous 01/20/25(Mon)12:04:10 No.103969298

>>103969152

I tried the Qwen 32B distilled model and it just doesn't seem that great for RP to me, at least using the prompting it should be optimized for. It will ramble a lot and you'll have to manage (remove or whatever) the text under thinking tags it wants to add.

I tried the Qwen 32B distilled model and it just doesn't seem that great for RP to me, at least using the prompting it should be optimized for. It will ramble a lot and you'll have to manage (remove or whatever) the text under thinking tags it wants to add.

Anonymous 01/20/25(Mon)12:04:11 No.103969300

>>103969288

We've replicated the creativity and prose of sonnet (for real this time, trust me, I say as my eyes glimmer with hope)

We've replicated the creativity and prose of sonnet (for real this time, trust me, I say as my eyes glimmer with hope)

Anonymous 01/20/25(Mon)12:04:44 No.103969306

>>103969298

I doubt distills would be great for RP, they'd only be good for math and coding slop

I doubt distills would be great for RP, they'd only be good for math and coding slop

Anonymous 01/20/25(Mon)12:06:49 No.103969326

>>103969298

they're not really meant for writing, they're meant for coding (or I guess math and whatnot)

but I tried it for coding and it was still pretty mediocre

they're not really meant for writing, they're meant for coding (or I guess math and whatnot)

but I tried it for coding and it was still pretty mediocre

Anonymous 01/20/25(Mon)12:07:52 No.103969336

Anonymous 01/20/25(Mon)12:08:12 No.103969341

>>103969298

>you'll have to manage (remove or whatever) the text under thinking tags it wants to add.

here's my solution for that btw

>go to extensions->regex in ST

>new global script

>find regex:

>affects: ai output

>ephemerality: definitely outgoing, display too if you don't want to read the CoT yourself

>you'll have to manage (remove or whatever) the text under thinking tags it wants to add.

here's my solution for that btw

>go to extensions->regex in ST

>new global script

>find regex:

<think>(.|\n)*</think>\n*

>affects: ai output

>ephemerality: definitely outgoing, display too if you don't want to read the CoT yourself

Anonymous 01/20/25(Mon)12:08:23 No.103969343

Anonymous 01/20/25(Mon)12:08:29 No.103969344

A finetune of a meh model won't give it that drastic of an increase in performance, the ceiling is what, Wizardlm?

Just use R1

Just use R1

Anonymous 01/20/25(Mon)12:09:14 No.103969349

Anonymous 01/20/25(Mon)12:09:20 No.103969350

>>103969258

so, what is coding then? writing sorting algorithms from scratch?

competitive programming is like chess, it's fun but useless.

so, what is coding then? writing sorting algorithms from scratch?

competitive programming is like chess, it's fun but useless.

Anonymous 01/20/25(Mon)12:09:44 No.103969355

>>103969350

godot is too niche and you know it, try asking it about some unity slop

godot is too niche and you know it, try asking it about some unity slop

Anonymous 01/20/25(Mon)12:09:45 No.103969356

>>103969306

Aren't distills meant to be a tiny lossy clone of the whatever model it's distilled from? RP or math shouldn't matter.

Are they not distilling using logits or are you just referring to prompts used to distill being math themed?

Aren't distills meant to be a tiny lossy clone of the whatever model it's distilled from? RP or math shouldn't matter.

Are they not distilling using logits or are you just referring to prompts used to distill being math themed?

Anonymous 01/20/25(Mon)12:10:36 No.103969365

>>103969350

In the same way there's a correlation for puzzle elo and actual elo in chess (even if R!=1), there's a correlation for performance in competitive programming and actual programming.

You suffer from skill issue and seethius copeitis.

In the same way there's a correlation for puzzle elo and actual elo in chess (even if R!=1), there's a correlation for performance in competitive programming and actual programming.

You suffer from skill issue and seethius copeitis.

Anonymous 01/20/25(Mon)12:11:03 No.103969371

>>103969349

This time the model is 400B rather than 658B like V3 was, so some people are already running it unlike V3

Also they plan to release the lite version, they are not satisfied with it though (cuz it's small)

This time the model is 400B rather than 658B like V3 was, so some people are already running it unlike V3

Also they plan to release the lite version, they are not satisfied with it though (cuz it's small)

Anonymous 01/20/25(Mon)12:11:43 No.103969374

>>103969371

>This time the model is 400B rather than 658B like V3 was, so some people are already running it unlike V3

Anon, no... It's the exact same arch as V3.

>This time the model is 400B rather than 658B like V3 was, so some people are already running it unlike V3

Anon, no... It's the exact same arch as V3.

Anonymous 01/20/25(Mon)12:11:44 No.103969375

>>103969365

Your model sucks at programming, cope.

>>103969355

sonnet does it well, godot isn't that niche.

Your model sucks at programming, cope.

>>103969355

sonnet does it well, godot isn't that niche.

Anonymous 01/20/25(Mon)12:12:14 No.103969383

>>103969375

sonnet is an extremely unique model, compare to o1, not sonnet.

sonnet is an extremely unique model, compare to o1, not sonnet.

Anonymous 01/20/25(Mon)12:12:16 No.103969384

>>103969371

Bro you hallucinated

Bro you hallucinated

Anonymous 01/20/25(Mon)12:13:04 No.103969391

Anonymous 01/20/25(Mon)12:13:34 No.103969399

Roleplay and writing apparently is one of the official focuses of DeepSeek R1

>to enhance the

model’s capabilities in writing, role-playing, and other general-purpose tasks.

>to enhance the

model’s capabilities in writing, role-playing, and other general-purpose tasks.

Anonymous 01/20/25(Mon)12:14:05 No.103969406

>>103969375

Even if we go by model sucking, I can see that your prompting skills are horrid if you barely give it any information or context. All of those tokens exist for a reason so use them and learn to write a request in a way that actually allows the model to follow your instructions.

Even if we go by model sucking, I can see that your prompting skills are horrid if you barely give it any information or context. All of those tokens exist for a reason so use them and learn to write a request in a way that actually allows the model to follow your instructions.

Anonymous 01/20/25(Mon)12:14:56 No.103969417

Anonymous 01/20/25(Mon)12:15:55 No.103969426

>>103969417

It's a toy for itch.io faggots, that's how

It's a toy for itch.io faggots, that's how

Anonymous 01/20/25(Mon)12:18:24 No.103969451

>>103969426

giga cope

I got o1 to make me a unit balance mod for an rts released in 2007 that has like 4k monthly players (supreme commander), but godot is "too niche?"

I suppose it's the best choice when all you need is bubble sort

giga cope

I got o1 to make me a unit balance mod for an rts released in 2007 that has like 4k monthly players (supreme commander), but godot is "too niche?"

I suppose it's the best choice when all you need is bubble sort

Anonymous 01/20/25(Mon)12:18:42 No.103969458

>>103969399

They're talking about that *stage* of the training. Your reading comprehension is shit.

And roleplaying doesn't mean the same thing for you as it does for them.

They're talking about that *stage* of the training. Your reading comprehension is shit.

And roleplaying doesn't mean the same thing for you as it does for them.

Anonymous 01/20/25(Mon)12:18:44 No.103969459

Anonymous 01/20/25(Mon)12:21:08 No.103969480

Distill Qwen 14B sucks balls it gets mogged by nemo

Anonymous 01/20/25(Mon)12:21:20 No.103969484

>>103969459

i searched. it's fucking lua.

i searched. it's fucking lua.

Anonymous 01/20/25(Mon)12:21:38 No.103969486

>>103969484

yeah, see, it's way more represented in datasets than Godot and GDScript. So IDK why you're coping.

yeah, see, it's way more represented in datasets than Godot and GDScript. So IDK why you're coping.

Anonymous 01/20/25(Mon)12:21:56 No.103969489

>>103969399

Roleplay in the corpo context means "You are my assistant" and writing means "Draft me an email"

Roleplay in the corpo context means "You are my assistant" and writing means "Draft me an email"

Anonymous 01/20/25(Mon)12:22:19 No.103969495

>>103969459

I haven't the slightest idea anymore, it was half a year ago or so

you can google the game and check yourself if you're interested, but it was a variety of file expensions from some customized lua to some .zip-esque archives and so on

it even knew the fucking unit IDs

and the muh competitor can't make a high score leaderboard in godot

I haven't the slightest idea anymore, it was half a year ago or so

you can google the game and check yourself if you're interested, but it was a variety of file expensions from some customized lua to some .zip-esque archives and so on

it even knew the fucking unit IDs

and the muh competitor can't make a high score leaderboard in godot

Anonymous 01/20/25(Mon)12:22:46 No.103969498

>>103969495

>it even knew the fucking unit IDs

retard, because its an extremely old game so its well represented in the dataset, godot is newer trash

>it even knew the fucking unit IDs

retard, because its an extremely old game so its well represented in the dataset, godot is newer trash

Anonymous 01/20/25(Mon)12:24:00 No.103969513

>>103969486

>So IDK why you're coping.

I should have clarified that i'm not that anon you're arguing with. Don't you see image names?

>So IDK why you're coping.

I should have clarified that i'm not that anon you're arguing with. Don't you see image names?

Anonymous 01/20/25(Mon)12:24:10 No.103969515

>>103969498

>because its an extremely old game so its well represented in the dataset

an 18 year old game with a tiny playerbase is well represented in the dataset but fucking godot isn't?

yeah okay

>because its an extremely old game so its well represented in the dataset

an 18 year old game with a tiny playerbase is well represented in the dataset but fucking godot isn't?

yeah okay

Anonymous 01/20/25(Mon)12:24:27 No.103969520

>>103969515

yes

yes

Anonymous 01/20/25(Mon)12:25:18 No.103969530

>>103969239

Thanks, checking those out now.

Thanks, checking those out now.

Anonymous 01/20/25(Mon)12:26:47 No.103969545

>>103969515

That's also working on the hypothesis that OAI and competitors didn't just scrub code from famous internet repositories, but also minor random unknown websites that contained mods for an 18y/o game.

If OAI scrubbed literally all of the internet, they should have enough data to train models forever pretty much.

That's also working on the hypothesis that OAI and competitors didn't just scrub code from famous internet repositories, but also minor random unknown websites that contained mods for an 18y/o game.

If OAI scrubbed literally all of the internet, they should have enough data to train models forever pretty much.

Anonymous 01/20/25(Mon)12:31:00 No.103969582

where the r1 distill ggufs at?

Anonymous 01/20/25(Mon)12:32:42 No.103969600

>>103969545

>it even knew the fucking unit IDs

That alone is proof it was part of its training data, you fucking imbecile.

>it even knew the fucking unit IDs

That alone is proof it was part of its training data, you fucking imbecile.

Anonymous 01/20/25(Mon)12:33:18 No.103969610

>>103969530

If you're using llama.cpp, the tokenizer for the distill models doesn't seem to be supported. So stick to nemo 12b for now.

>>103969582

They can be converted, but there's no support for the tokenizer yet. At least not in the models i tried.

If you're using llama.cpp, the tokenizer for the distill models doesn't seem to be supported. So stick to nemo 12b for now.

>>103969582

They can be converted, but there's no support for the tokenizer yet. At least not in the models i tried.

Anonymous 01/20/25(Mon)12:34:14 No.103969619

>>103969610

update your lcpp or use latest experimental kcpp

https://github.com/ggerganov/llama.cpp/pull/11310

update your lcpp or use latest experimental kcpp

https://github.com/ggerganov/llama.

Anonymous 01/20/25(Mon)12:34:41 No.103969626

>all these models from china

Where are the Indian models?

Where are the Indian models?

Anonymous 01/20/25(Mon)12:35:30 No.103969631

>>103969626

indians are retards but somehow Elon the new president of the US thinks it would be cool to import a shit ton of those

indians are retards but somehow Elon the new president of the US thinks it would be cool to import a shit ton of those

Anonymous 01/20/25(Mon)12:35:36 No.103969633

>>103969610

>If you're using llama.cpp, the tokenizer for the distill models doesn't seem to be supported. So stick to nemo 12b for now.

bullshit I am testing 14b and its meh

>If you're using llama.cpp, the tokenizer for the distill models doesn't seem to be supported. So stick to nemo 12b for now.

bullshit I am testing 14b and its meh

Anonymous 01/20/25(Mon)12:35:46 No.103969635

>>103969545

For all i know they run function calling to fetch data on demand. How good is it with current events? *IF* they do that, it's never going to be a fair comparison.

For all i know they run function calling to fetch data on demand. How good is it with current events? *IF* they do that, it's never going to be a fair comparison.

Anonymous 01/20/25(Mon)12:36:02 No.103969640

>>103969626

All of the capable ones leave their poor country behind to go to USA.

I was surprised to find the Transformer paper head was an Indian kek.

All of the capable ones leave their poor country behind to go to USA.

I was surprised to find the Transformer paper head was an Indian kek.

Anonymous 01/20/25(Mon)12:36:08 No.103969642

>>103969626

you already asked, and gemini and grok exists

you already asked, and gemini and grok exists

Anonymous 01/20/25(Mon)12:36:47 No.103969647

>>103969619

Ah, fuck me. I pulled and forgot to compile. Thanks for reminding me i'm an idiot.

Ah, fuck me. I pulled and forgot to compile. Thanks for reminding me i'm an idiot.

Anonymous 01/20/25(Mon)12:37:13 No.103969653

>>103969582

https://huggingface.co/models?search=r1%20distill%20gguf

>>103969610

it's the same tokenizer as the llama/qwen base they're distilling to, just a couple added tokens? why would that not be supported

https://huggingface.co/models?searc

>>103969610

it's the same tokenizer as the llama/qwen base they're distilling to, just a couple added tokens? why would that not be supported

Anonymous 01/20/25(Mon)12:37:35 No.103969657

>>103969626

Gemini and it's garbage.

Gemini and it's garbage.

Anonymous 01/20/25(Mon)12:37:57 No.103969661

>>103969653

>it's the same tokenizer as the llama/qwen base they're distilling to, just a couple added tokens? why would that not be supported

qwen ones are different for some reason

>>103969619

>https://github.com/ggerganov/llama.cpp/pull/11310