/lmg/ - Local Models General

Anonymous 01/20/25(Mon)15:53:24 | 578 comments | 65 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103967199 & >>103959928

►News

>(01/20) DeepSeek releases R1, R1 Zero, & finetuned Qwen and Llama models: https://hf.co/deepseek-ai/DeepSeek-R1-Zero

>(01/17) Nvidia AceInstruct, finetuned on Qwen2.5-Base: https://hf.co/nvidia/AceInstruct-72B

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/outetts-03-6786b1ebc7aeb757bc17a2fa

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b-instruct

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103967199 & >>103959928

►News

>(01/20) DeepSeek releases R1, R1 Zero, & finetuned Qwen and Llama models: https://hf.co/deepseek-ai/DeepSeek-

>(01/17) Nvidia AceInstruct, finetuned on Qwen2.5-Base: https://hf.co/nvidia/AceInstruct-72

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/ou

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/20/25(Mon)15:53:39 No.103971526

►Recent Highlights from the Previous Thread: >>103967199

--DeepSeek-R1 and coding capabilities discussion:

>103969007 >103969017 >103969244 >103969350 >103969451 >103969484 >103969486 >103969513 >103969495 >103969515 >103969545 >103969635 >103969383 >103969406 >103969343

--R1 model capabilities and temperature adjustment discussion:

>103967680 >103967689 >103967721 >103967728 >103967690 >103967702 >103967706 >103967715 >103967726 >103967703 >103967709 >103967711

--Deepseek model pricing and API cost discussion:

>103967238 >103967251 >103967442 >103967462 >103967725 >103967734 >103967851 >103967952 >103970000 >103970116 >103970484

--Distilled models' limitations in problem-solving and potential of RL:

>103968000 >103968203 >103968545

--Impressive LLM-generated narrative continuation:

>103967517 >103967537 >103967927

--Generating non-reasoning data with DeepSeek-V3 pipeline:

>103967221

--DeepSeek R1's roleplay and writing capabilities:

>103969399 >103969489

--Anon suggests using Group Relative Policy Optimization in RP models, referencing DeepSeek's approach:

>103969265

--LLaMA tokenizer changes and their implications:

>103967984

--Impressive 32b model performance on coding benchmarks:

>103967639 >103967642 >103967654 >103967724 >103967747 >103967767 >103967784 >103967812 >103967808 >103968227

--R1's Pythagorean theorem explanation underwhelms anons:

>103970387 >103970421 >103970488 >103970517 >103971333

--Discussion about the legitimacy and quality of distills:

>103967973 >103967982 >103968030 >103968052

--Distilled models' performance and limitations discussed:

>103969152 >103969298 >103969356 >103969728 >103970097 >103969326 >103969341

--Anon discusses finetuning a model and its potential performance:

>103969344 >103969371 >103969374 >103969391

--Miku (free space):

>103967591 >103967757

►Recent Highlight Posts from the Previous Thread: >>103967200

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--DeepSeek-R1 and coding capabilities discussion:

>103969007 >103969017 >103969244 >103969350 >103969451 >103969484 >103969486 >103969513 >103969495 >103969515 >103969545 >103969635 >103969383 >103969406 >103969343

--R1 model capabilities and temperature adjustment discussion:

>103967680 >103967689 >103967721 >103967728 >103967690 >103967702 >103967706 >103967715 >103967726 >103967703 >103967709 >103967711

--Deepseek model pricing and API cost discussion:

>103967238 >103967251 >103967442 >103967462 >103967725 >103967734 >103967851 >103967952 >103970000 >103970116 >103970484

--Distilled models' limitations in problem-solving and potential of RL:

>103968000 >103968203 >103968545

--Impressive LLM-generated narrative continuation:

>103967517 >103967537 >103967927

--Generating non-reasoning data with DeepSeek-V3 pipeline:

>103967221

--DeepSeek R1's roleplay and writing capabilities:

>103969399 >103969489

--Anon suggests using Group Relative Policy Optimization in RP models, referencing DeepSeek's approach:

>103969265

--LLaMA tokenizer changes and their implications:

>103967984

--Impressive 32b model performance on coding benchmarks:

>103967639 >103967642 >103967654 >103967724 >103967747 >103967767 >103967784 >103967812 >103967808 >103968227

--R1's Pythagorean theorem explanation underwhelms anons:

>103970387 >103970421 >103970488 >103970517 >103971333

--Discussion about the legitimacy and quality of distills:

>103967973 >103967982 >103968030 >103968052

--Distilled models' performance and limitations discussed:

>103969152 >103969298 >103969356 >103969728 >103970097 >103969326 >103969341

--Anon discusses finetuning a model and its potential performance:

>103969344 >103969371 >103969374 >103969391

--Miku (free space):

>103967591 >103967757

►Recent Highlight Posts from the Previous Thread: >>103967200

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/20/25(Mon)15:55:52 No.103971551

Mikulove

Anonymous 01/20/25(Mon)15:56:06 No.103971558

deepseek love

Anonymous 01/20/25(Mon)15:56:08 No.103971559

>>103971509

>I don't ever do 1-on-1 roleplays with a character card, I almost always format the card as a general roleplay scenario with a couple of define characters aside from myself. How do you guys go about formatting it? As in, how many tokens to do dedicate to a character's name/appearance/personality/background, how much do you dedicate to the overall world/setting/scenario, do you work these into the character card or do you relegate them to the world book? I've also played around with laying it out as a group chat, with a dedicated 'narrator' character who is filled in about the setting/world/background characters/broad strokes of the roleplay and a character card for each of the major characters in the roleplay.

Just curious as to what people have the most success with. I find myself spending a lot of time setting things up only to be frustrated as I've been over-engineering only to get an unsatisfactory result.

>I don't ever do 1-on-1 roleplays with a character card, I almost always format the card as a general roleplay scenario with a couple of define characters aside from myself. How do you guys go about formatting it? As in, how many tokens to do dedicate to a character's name/appearance/personality/backgro

Just curious as to what people have the most success with. I find myself spending a lot of time setting things up only to be frustrated as I've been over-engineering only to get an unsatisfactory result.

Anonymous 01/20/25(Mon)15:56:32 No.103971566

First for R1 is the first model that actually solved this racist riddle out of pure reasoning. The model is smart enough to fight against its own censorship baked into its weights to actually give the correct answer.

Anonymous 01/20/25(Mon)15:56:54 No.103971574

>>103971551

sorry I've got a new gf

sorry I've got a new gf

Anonymous 01/20/25(Mon)15:57:59 No.103971592

So is R1 32B any good for erp or is it just good for coding?

Anonymous 01/20/25(Mon)15:58:17 No.103971597

>>103971566

>The model is smart enough to fight against its own censorship baked into its weights to actually give the correct answer.

SAM'S GONNA FREAK

>The model is smart enough to fight against its own censorship baked into its weights to actually give the correct answer.

SAM'S GONNA FREAK

Anonymous 01/20/25(Mon)15:59:37 No.103971614

>*The camera lingers on Ren’s thick cock pulsing inside her, each thrust squelching loudly as Kana’s whimpers sync with the squeak of her bedsprings. Close-up on his balls slapping her clit, pre-cum oozing down her inner thighs. "Gonna… fill your dumb little assignment," he grunts, yanking her hair to arch her back. The iPhone captures every drop as he erupts, ropes of cum painting her cervix, some dribbling onto the homework sheet below. Post-nut clarity hits—Ren freezes. "Shit. Mom’s gonna smell this."*

KINOKINOKINOKINO

KINOKINOKINOKINO

Anonymous 01/20/25(Mon)16:00:47 No.103971621

>>103971574

Sex with whales...

Sex with whales...

Anonymous 01/20/25(Mon)16:00:53 No.103971623

>>103971592

It's very good for ERP if you need the characters to have good reasoning/logic or if you do weird spatial stuff that needs some logic. It's bad for the usual (boring) prose anime shit that most people here engage in.

It's very good for ERP if you need the characters to have good reasoning/logic or if you do weird spatial stuff that needs some logic. It's bad for the usual (boring) prose anime shit that most people here engage in.

Anonymous 01/20/25(Mon)16:02:06 No.103971630

>>103971523

what kind of workstation do i need to run Deepseek R1? Will a 4090 handle it at 64k?

what kind of workstation do i need to run Deepseek R1? Will a 4090 handle it at 64k?

Anonymous 01/20/25(Mon)16:06:12 No.103971667

>>103971393

>The AI *can* answer programming questions, but *how* companies integrate those capabilities is another problem.

Easy. Most companies use MS Teams/Slack for communication anyways, just replace workers with AI that the managers can talk to like real employees.

>The AI *can* answer programming questions, but *how* companies integrate those capabilities is another problem.

Easy. Most companies use MS Teams/Slack for communication anyways, just replace workers with AI that the managers can talk to like real employees.

Anonymous 01/20/25(Mon)16:07:23 No.103971674

>>103971667

I think you just described Scale and Devin.

I think you just described Scale and Devin.

Anonymous 01/20/25(Mon)16:12:31 No.103971715

>>103971667

So managers are actually accountable for their work? No way!

Each company is different, but there's a reason why they pay "consultants" big bucks to "modernize" their business... (with suboptimal results)

So managers are actually accountable for their work? No way!

Each company is different, but there's a reason why they pay "consultants" big bucks to "modernize" their business... (with suboptimal results)

Anonymous 01/20/25(Mon)16:13:54 No.103971731

Shoutout /lmg/ anons who have guided me.

I feel like I got transfered back to my youth, roleplaying AOL chatrooms.

I have a single 3090 and 128gb of ram, and have been using Gemma 2-27b-it-Q6_K. I'm not sure if this is the ideal model for my setup but I have been having a lot of fun. RPing generally works pretty well, but I havent experimented with ERP much because it seems to fall apart after a dozen messages and go schizo.

I feel like I got transfered back to my youth, roleplaying AOL chatrooms.

I have a single 3090 and 128gb of ram, and have been using Gemma 2-27b-it-Q6_K. I'm not sure if this is the ideal model for my setup but I have been having a lot of fun. RPing generally works pretty well, but I havent experimented with ERP much because it seems to fall apart after a dozen messages and go schizo.

Anonymous 01/20/25(Mon)16:17:27 No.103971774

>>103971623

So, is it like QwQ, then?

So, is it like QwQ, then?

Anonymous 01/20/25(Mon)16:20:17 No.103971794

>>103971774

Yeah but way superior to QwQ while also less robotic in normal LLM prose, just not as good as other models at that.

Yeah but way superior to QwQ while also less robotic in normal LLM prose, just not as good as other models at that.

Anonymous 01/20/25(Mon)16:23:30 No.103971825

>>103971731

Which model and quant are you running on that setup?

Which model and quant are you running on that setup?

Anonymous 01/20/25(Mon)16:23:36 No.103971829

>>103971794

Sounds amazing. I have been craving logical, intelligent, instruction following models for RP, that can also write decently well.

Sounds amazing. I have been craving logical, intelligent, instruction following models for RP, that can also write decently well.

Anonymous 01/20/25(Mon)16:24:14 No.103971842

R1 seems to like DnD when you tell it you want to simulate a role-playing game. It even simulates die rolls and corruption.

I tested how truly random the rolls were.. and they're not random (likes 9 and 3 a too much) but they're not so deterministic either. This can be solved by giving it a salt and make it do calculations (burning token count), but it's still interesting. Even more because I still haven't found the usual places where the LLM tends to default to (like Lily for female name).

I tested how truly random the rolls were.. and they're not random (likes 9 and 3 a too much) but they're not so deterministic either. This can be solved by giving it a salt and make it do calculations (burning token count), but it's still interesting. Even more because I still haven't found the usual places where the LLM tends to default to (like Lily for female name).

Anonymous 01/20/25(Mon)16:24:28 No.103971843

whats the meta now

Anonymous 01/20/25(Mon)16:24:38 No.103971844

>>103971523

whats the best model right now for a single 3090/4090?

I'm still on command-r don't know if there is anything better

whats the best model right now for a single 3090/4090?

I'm still on command-r don't know if there is anything better

Anonymous 01/20/25(Mon)16:25:08 No.103971852

Anonymous 01/20/25(Mon)16:28:32 No.103971887

Are they just larping or why would R1 suddenly make programmers fear for their job when it's not better than o1 and worse than o3?

Anonymous 01/20/25(Mon)16:28:58 No.103971890

I know everyone is gonna be running R1 now but the Qwen 32B R1 is actually really fucking good at coding now. Like near sonnet level not kidding

Anonymous 01/20/25(Mon)16:30:28 No.103971907

>>103971890

Did you compare with the llama3.3 one?

Did you compare with the llama3.3 one?

Anonymous 01/20/25(Mon)16:31:07 No.103971917

>>103971907

Not yet, is it better?

Not yet, is it better?

Anonymous 01/20/25(Mon)16:34:02 No.103971948

>>103971523

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support

anyone tested it? What the max sentence size you can generate? I don't see it mentioned on the page.

StyleTTS2 was trained only on 300 character sentences, XTTSv2 on only 250. I'm wondering if OuteTTS will finally be the first viable TTS for generating audiobooks.

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support

anyone tested it? What the max sentence size you can generate? I don't see it mentioned on the page.

StyleTTS2 was trained only on 300 character sentences, XTTSv2 on only 250. I'm wondering if OuteTTS will finally be the first viable TTS for generating audiobooks.

Anonymous 01/20/25(Mon)16:34:07 No.103971950

>>103971887

Even if it's actually retarded, the internal monologing it does makes it seem way less retarded than juniors copying pasting chatgpt.

Even if it's actually retarded, the internal monologing it does makes it seem way less retarded than juniors copying pasting chatgpt.

Anonymous 01/20/25(Mon)16:35:06 No.103971959

>>103971917

Don't know, I only tried out llama because it's trained on the finetune and I like 3.3s more than Qwens

Don't know, I only tried out llama because it's trained on the finetune and I like 3.3s more than Qwens

Anonymous 01/20/25(Mon)16:36:53 No.103971977

>oh I'm using R1

Which ones you fucking idiots?

Which ones you fucking idiots?

Anonymous 01/20/25(Mon)16:37:51 No.103971988

>>103971977

R1 is R1, if I meant Qwen I would say so

R1 is R1, if I meant Qwen I would say so

Anonymous 01/20/25(Mon)16:41:44 No.103972034

>>103971988

No you would say R1 Qwen

No you would say R1 Qwen

Anonymous 01/20/25(Mon)16:41:58 No.103972035

>>103971977

bartowski normally doesn't disappoint

https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-32B-GGUF

bartowski normally doesn't disappoint

https://huggingface.co/bartowski/De

Anonymous 01/20/25(Mon)16:42:47 No.103972041

>>103971842

>I tested how truly random the rolls were.. and they're not random (likes 9 and 3 a too much)

That's one of those things that I see as something LLMs don't need to be good at.

For example, I play D&D with gemini and I use its code execution capabilities to roll dice. I also have it always roll an "Entropy Dice" to steer the scenarios and such.

My point being, tool calling makes it so that LLMs that are intelligent enough to know what to do can do math without error, generate (semi-) random numbers, gather external information, etc, by calling external tools.

To me that's the coolest thing about current LLMs, that they can do that at all and by interfacing with these external systems they are become much more capable than they'd be otherwise.

>I tested how truly random the rolls were.. and they're not random (likes 9 and 3 a too much)

That's one of those things that I see as something LLMs don't need to be good at.

For example, I play D&D with gemini and I use its code execution capabilities to roll dice. I also have it always roll an "Entropy Dice" to steer the scenarios and such.

My point being, tool calling makes it so that LLMs that are intelligent enough to know what to do can do math without error, generate (semi-) random numbers, gather external information, etc, by calling external tools.

To me that's the coolest thing about current LLMs, that they can do that at all and by interfacing with these external systems they are become much more capable than they'd be otherwise.

Anonymous 01/20/25(Mon)16:44:22 No.103972057

192GB + 24GBvram+ can run R1 2bit btw

Anonymous 01/20/25(Mon)16:44:28 No.103972058

>>103972041

Fuck, even the name thing could be remedied with tool calling. Train it to call a function that searches a name database for a semi-random name given certain parameters, or the like.

Basically, everything that can be offloaded to a more precise system outside the LLM that the LLM can use by itself makes the LLM more capable.

Fuck, even the name thing could be remedied with tool calling. Train it to call a function that searches a name database for a semi-random name given certain parameters, or the like.

Basically, everything that can be offloaded to a more precise system outside the LLM that the LLM can use by itself makes the LLM more capable.

Anonymous 01/20/25(Mon)16:45:19 No.103972066

>>103971887

Unironically, with the right information in the context and the right explanation of what I'm looking for, o1 already implements and develops correctly anything I'm looking for as part of my development tickets. Already. Now. I usually have it implement everything I need and then snooze for the next 3 days pretending I'm working, thanks WFH.

People severely underestimate the power of prompting correctly, that huge elo in codeforces is not a waste nor accident, it's just that codeforce problems are as clear as they get and you need to do some legwork in understanding what you need as part of your requirements to reach that level of clear description that the model requires, and then you just ask for it.

Never used O3 tho. I imagine it's overkill.

Unironically, with the right information in the context and the right explanation of what I'm looking for, o1 already implements and develops correctly anything I'm looking for as part of my development tickets. Already. Now. I usually have it implement everything I need and then snooze for the next 3 days pretending I'm working, thanks WFH.

People severely underestimate the power of prompting correctly, that huge elo in codeforces is not a waste nor accident, it's just that codeforce problems are as clear as they get and you need to do some legwork in understanding what you need as part of your requirements to reach that level of clear description that the model requires, and then you just ask for it.

Never used O3 tho. I imagine it's overkill.

Anonymous 01/20/25(Mon)16:46:04 No.103972071

>>103971844

Deepseek R1 Distilled 32b

Deepseek R1 Distilled 32b

Anonymous 01/20/25(Mon)16:47:05 No.103972079

>>103972035

I'm downloading that right now in Q5_K_M

I'm downloading that right now in Q5_K_M

Anonymous 01/20/25(Mon)16:47:07 No.103972080

R1 isn't very good for RP...

Anonymous 01/20/25(Mon)16:47:51 No.103972091

>>103971948

It's LLM based. 4096 tokens or about a minute iirc

It's LLM based. 4096 tokens or about a minute iirc

Anonymous 01/20/25(Mon)16:47:59 No.103972092

>>103972080

I hope your talking about a distilled one cause the big boy is the best I have ever used for RP

I hope your talking about a distilled one cause the big boy is the best I have ever used for RP

Anonymous 01/20/25(Mon)16:48:59 No.103972099

>>103972092

You fuck horses, don't you?

You fuck horses, don't you?

Anonymous 01/20/25(Mon)16:50:03 No.103972111

>>103972099

anime girls

anime girls

Anonymous 01/20/25(Mon)16:50:19 No.103972116

How the fuck do I remove samplers from chat completion presets in Sillytavern? I'm trying to use the Deepseek API but it keeps telling me that it's not compatible with presence_penalty. I'm not able to remove that though. I already tried to disable it by value.

Anonymous 01/20/25(Mon)16:50:51 No.103972121

>>103971948

Doesn't support more than 1200 tokens I think.

Also, why not use Kokoro or GPT-Sovits?

>>103971842

LLMs aren't RNGs... They literally predict the most likely token based on their training data.

That's the opposite definition of random.

Doesn't support more than 1200 tokens I think.

Also, why not use Kokoro or GPT-Sovits?

>>103971842

LLMs aren't RNGs... They literally predict the most likely token based on their training data.

That's the opposite definition of random.

Anonymous 01/20/25(Mon)16:51:11 No.103972124

>>103971842

Couldn't you force it to write {{random: 1, 2, ...}} ?

Couldn't you force it to write {{random: 1, 2, ...}} ?

Anonymous 01/20/25(Mon)16:51:26 No.103972131

>>103972116

https://github.com/SillyTavern/SillyTavern/commit/d7bb92be540d17d28e4f1c0c0bdec95d2525045a

https://github.com/SillyTavern/Sill

Anonymous 01/20/25(Mon)16:51:39 No.103972135

>>103972057

What speeds are you getting?

What speeds are you getting?

Anonymous 01/20/25(Mon)16:52:48 No.103972141

Anonymous 01/20/25(Mon)16:54:31 No.103972161

>>103972041

that's true but R1 doesn't have function calling

>>103972058

i mean, it's true that the name can be solved like that. But I was just exemplifying. LLMs tend to default in more subtle ways. Best known here is the "shivers down the spine", but they can also be character personalities or story events

that's true but R1 doesn't have function calling

>>103972058

i mean, it's true that the name can be solved like that. But I was just exemplifying. LLMs tend to default in more subtle ways. Best known here is the "shivers down the spine", but they can also be character personalities or story events

Anonymous 01/20/25(Mon)16:54:42 No.103972162

wow

Anonymous 01/20/25(Mon)16:54:49 No.103972163

Anonymous 01/20/25(Mon)16:55:37 No.103972169

https://desuarchive.org/g/search/width/697/height/768

Anonymous 01/20/25(Mon)16:56:07 No.103972175

>>103972121

>LLMs aren't RNGs... They literally predict the most likely token based on their training data.

I had to test it, given that temperature isn't supported and the LLM liked to roll dice kek

>LLMs aren't RNGs... They literally predict the most likely token based on their training data.

I had to test it, given that temperature isn't supported and the LLM liked to roll dice kek

Anonymous 01/20/25(Mon)16:56:28 No.103972184

>>103971948

It sounds like shit and the voice cloning barely works. Pointless model when GPT-SoVITS exists.

It sounds like shit and the voice cloning barely works. Pointless model when GPT-SoVITS exists.

Anonymous 01/20/25(Mon)16:56:33 No.103972185

>>103972162

I don't know what that means.

I don't know what that means.

Anonymous 01/20/25(Mon)16:57:06 No.103972187

>>103972184

Buy an ad

Buy an ad

Anonymous 01/20/25(Mon)16:57:28 No.103972189

https://x.com/Kimi_ai_/status/1881332472748851259

A SECOND (or is it third after Qwen's QwQ?) Chinese o1 level LLM released today. KIMI AI

A SECOND (or is it third after Qwen's QwQ?) Chinese o1 level LLM released today. KIMI AI

Anonymous 01/20/25(Mon)16:58:53 No.103972201

>>103972124

When you ask for a random number without specifying how to get it the model "reasons" it has to use python code. Then starts thinking how the python "random" function works, then picks a "random" number and that's it.

When you ask it with a seed and don't specify how to generate the number, it chooses "randomly" a method and applies the technique to the salt doing the math itself.

When you ask for a random number without specifying how to get it the model "reasons" it has to use python code. Then starts thinking how the python "random" function works, then picks a "random" number and that's it.

When you ask it with a seed and don't specify how to generate the number, it chooses "randomly" a method and applies the technique to the salt doing the math itself.

Anonymous 01/20/25(Mon)16:59:09 No.103972203

>>103972189

Weights?

Weights?

Anonymous 01/20/25(Mon)17:00:11 No.103972216

Anonymous 01/20/25(Mon)17:00:16 No.103972218

Anonymous 01/20/25(Mon)17:00:32 No.103972223

I told you everyone was waiting till after biden gets out.

Anonymous 01/20/25(Mon)17:01:29 No.103972229

HmmmmMMMM, wondering about jumping back into AI coom what with the R1 hype. Would have to run distilled though

Anonymous 01/20/25(Mon)17:01:31 No.103972230

>>103972223

Everyone hated Biden. Why did people vote for him in the first place?

Everyone hated Biden. Why did people vote for him in the first place?

Anonymous 01/20/25(Mon)17:02:56 No.103972236

Anonymous 01/20/25(Mon)17:03:12 No.103972239

p102-100 still the meta poorfag card?

Anonymous 01/20/25(Mon)17:03:34 No.103972241

Does R1 qwen work with ollama?

Anonymous 01/20/25(Mon)17:03:48 No.103972243

Would it be stupid if i created a virtual environment and use symbolic links for all AI tools?

Having one "global" virtual environment would be more convenient than each AI tool having their own.

Having one "global" virtual environment would be more convenient than each AI tool having their own.

Anonymous 01/20/25(Mon)17:04:00 No.103972246

>>103972241

Go back

Go back

Anonymous 01/20/25(Mon)17:05:35 No.103972265

Anonymous 01/20/25(Mon)17:06:53 No.103972275

>>103972230

They didn't. They got 20 million fake votes went to him. Those vote didnt show up for Kamala when everyone scrutinized the voting booth numbers.

They didn't. They got 20 million fake votes went to him. Those vote didnt show up for Kamala when everyone scrutinized the voting booth numbers.

Anonymous 01/20/25(Mon)17:07:03 No.103972278

>>103972223

Chinks are everyone?

Chinks are everyone?

Anonymous 01/20/25(Mon)17:08:10 No.103972285

>>103972241

ollama's website provides a search function..

ollama's website provides a search function..

Anonymous 01/20/25(Mon)17:08:51 No.103972294

You hear that? It's the sound of locusts swarming. Keep your bug spray with you at all times for the next few days.

Anonymous 01/20/25(Mon)17:08:52 No.103972296

>>103972278

Rub those 2 braincells together and come up with a reason why. Hint: Bidens anti ai executive order

Rub those 2 braincells together and come up with a reason why. Hint: Bidens anti ai executive order

Anonymous 01/20/25(Mon)17:09:50 No.103972305

>>103972241

You can literally write a model file yourself to add any model you want.

https://github.com/ollama/ollama/blob/main/docs/modelfile.md

You can literally write a model file yourself to add any model you want.

https://github.com/ollama/ollama/bl

Anonymous 01/20/25(Mon)17:10:25 No.103972310

>>103972121

>Also, why not use Kokoro or GPT-Sovits?

never heard of them, but i can't find any info on max output length either, guess i'll have to test all 3 of them, thanks

>>103972184

i don't care about voice cloning as long as there's any listenable pretrained voice included. Short output length is by far the biggest issue i have with TTS models.

So far i had to split sentences by commas to fit in 250/300 character limit to prevent the model from dropping the rest of the sentence, but obviously it doesn't sound good, since now a lot of sentences are read as 2 separate ones.

>Also, why not use Kokoro or GPT-Sovits?

never heard of them, but i can't find any info on max output length either, guess i'll have to test all 3 of them, thanks

>>103972184

i don't care about voice cloning as long as there's any listenable pretrained voice included. Short output length is by far the biggest issue i have with TTS models.

So far i had to split sentences by commas to fit in 250/300 character limit to prevent the model from dropping the rest of the sentence, but obviously it doesn't sound good, since now a lot of sentences are read as 2 separate ones.

Anonymous 01/20/25(Mon)17:10:48 No.103972315

>>103972296

Bitch the chinks released DS3 and even Hunyuan which is arguably just as if not more of a controversial release than r1. You'd literally use any evidence of people releasing things to point towards your argument being valid.

Also the chinks don't give a shit about Biden's order, they can find ways around that shit, it's just a little less convenient.

Bitch the chinks released DS3 and even Hunyuan which is arguably just as if not more of a controversial release than r1. You'd literally use any evidence of people releasing things to point towards your argument being valid.

Also the chinks don't give a shit about Biden's order, they can find ways around that shit, it's just a little less convenient.

Anonymous 01/20/25(Mon)17:11:35 No.103972325

>>103971630

https://rentry.org/miqumaxx

https://rentry.org/miqumaxx

Anonymous 01/20/25(Mon)17:11:53 No.103972329

>>103972315

And then Biden went full hog and slapped the entire world with sanctions and a "if you sell GPUs to china we will come after you."

And then Biden went full hog and slapped the entire world with sanctions and a "if you sell GPUs to china we will come after you."

Anonymous 01/20/25(Mon)17:12:09 No.103972330

>>103972243

the point of a virtual environment is isolating incompatible versions of libraries. you can always try the oldschool thing of rawdogging library conflicts.

the point of a virtual environment is isolating incompatible versions of libraries. you can always try the oldschool thing of rawdogging library conflicts.

Anonymous 01/20/25(Mon)17:13:10 No.103972340

R1 makes me realize that unaligned models are scary... I want the comfy positivity bias back...

Anonymous 01/20/25(Mon)17:14:22 No.103972356

>>103972340

the fuck are you talking about. R1 still has safety bias

the fuck are you talking about. R1 still has safety bias

Anonymous 01/20/25(Mon)17:14:22 No.103972357

>>103972329

The chinks can still find ways around that regardless. This is why every country laughs at the US now (and some even 30 years ago), they think their policies are effectual. Lmao.

The chinks can still find ways around that regardless. This is why every country laughs at the US now (and some even 30 years ago), they think their policies are effectual. Lmao.

Anonymous 01/20/25(Mon)17:14:36 No.103972360

>>103972340

Explain please

Explain please

Anonymous 01/20/25(Mon)17:15:25 No.103972367

>>103972356

Your crazy. Or you dont have any context.

Your crazy. Or you dont have any context.

Anonymous 01/20/25(Mon)17:16:21 No.103972373

not gonna lie bros r1 distill mixed in my llama 3.3 merge is looking pretty tasty in preliminary tests... just the sort of exotic ingredient I was craving

slopperbros we won

slopperbros we won

Anonymous 01/20/25(Mon)17:17:19 No.103972380

>mememerges

Anonymous 01/20/25(Mon)17:17:30 No.103972382

>>103972357

Why are you trying so hard to defend Biden?

Why are you trying so hard to defend Biden?

Anonymous 01/20/25(Mon)17:19:47 No.103972405

>>103971574

>>103971621

I don't know whales, but there's extensive public record on fucking dolphins.

In fact, female dolphins gets crazy for human cock once tried.

>>103971621

I don't know whales, but there's extensive public record on fucking dolphins.

In fact, female dolphins gets crazy for human cock once tried.

Anonymous 01/20/25(Mon)17:20:03 No.103972406

WHY BOTHER TRAINING A MODEL WHEN YOU CAN GENERATE THEM?!

https://nus-hpc-ai-lab.github.io/Recurrent-Parameter-Generation/

https://nus-hpc-ai-lab.github.io/Re

Anonymous 01/20/25(Mon)17:25:08 No.103972449

>>103972406

i love it. this is the most schizo sounding premise i've seen.

>click the paper link

>it just goes to "https://arxiv.org/"

i love it. this is the most schizo sounding premise i've seen.

>click the paper link

>it just goes to "https://arxiv.org/"

Anonymous 01/20/25(Mon)17:26:13 No.103972453

>>103972367

the safety bias is what allows the model to think about character's personalities and wishes, what's consensual and what isn't. If a character slaps someone's ass, the model *will* think it was not consensual even in an fantasy erotic setting

not only that. For other topics, it asks itself about it's "content policy" before answering. And if you don't steer it strong enough, it will question the ethics and morals of what you request

It's still far better than everything else, and you can steer the model away from it, but the safety bias is still there and in part is what makes the model usable

the safety bias is what allows the model to think about character's personalities and wishes, what's consensual and what isn't. If a character slaps someone's ass, the model *will* think it was not consensual even in an fantasy erotic setting

not only that. For other topics, it asks itself about it's "content policy" before answering. And if you don't steer it strong enough, it will question the ethics and morals of what you request

It's still far better than everything else, and you can steer the model away from it, but the safety bias is still there and in part is what makes the model usable

Anonymous 01/20/25(Mon)17:29:07 No.103972471

>>103972405

Which model does the best dolphin fucking descriptions?

Which model does the best dolphin fucking descriptions?

Anonymous 01/20/25(Mon)17:29:12 No.103972472

Anonymous 01/20/25(Mon)17:29:45 No.103972480

>>103972406

I was thinking about this a while ago. We know that the brain has certain specific structures that are essentially just preprogrammed or primed for certain connections. So we should in theory be able to also do that for language models, we just need to find the structures that LLM parameters would have encoded. Or we could let a model learn it. There is the issue that for some things like simple facts, there may not be a specific location or structure, but at least we should be able to do it for things like spatial understanding certainly, short-term memory (context) handling maybe, locations for different large groups of knowledge, etc. Then we can use the predicted model as initialization to continue pretraining on.

I was thinking about this a while ago. We know that the brain has certain specific structures that are essentially just preprogrammed or primed for certain connections. So we should in theory be able to also do that for language models, we just need to find the structures that LLM parameters would have encoded. Or we could let a model learn it. There is the issue that for some things like simple facts, there may not be a specific location or structure, but at least we should be able to do it for things like spatial understanding certainly, short-term memory (context) handling maybe, locations for different large groups of knowledge, etc. Then we can use the predicted model as initialization to continue pretraining on.

Anonymous 01/20/25(Mon)17:31:20 No.103972491

Use the r1 qwen to continue my story.

"Alright, let's break down the situation and figure out how to proceed.

Understanding the Current State:"

Lists a bunch of shit on the different things about the story, doesn't continue the fucking story.

The fuck is wrong with these models?

"Alright, let's break down the situation and figure out how to proceed.

Understanding the Current State:"

Lists a bunch of shit on the different things about the story, doesn't continue the fucking story.

The fuck is wrong with these models?

Anonymous 01/20/25(Mon)17:33:26 No.103972516

>>103972305

ollama's internal lcpp is likely not updated for the deepseek-r1-qwen tokenizer yet

https://github.com/ggerganov/llama.cpp/pull/11310

ollama's internal lcpp is likely not updated for the deepseek-r1-qwen tokenizer yet

https://github.com/ggerganov/llama.

Anonymous 01/20/25(Mon)17:34:05 No.103972524

>>103972491

Are you using the <think> and </think> tags?

Are you using the <think> and </think> tags?

Anonymous 01/20/25(Mon)17:34:09 No.103972526

Anonymous 01/20/25(Mon)17:35:05 No.103972530

Anonymous 01/20/25(Mon)17:36:11 No.103972540

>>103972480

Anthropic wrote a whole thing about mapping LLMs: https://www.anthropic.com/news/mapping-mind-language-model

>>103972491

When I was testing it on an auto-complete task, it kept doing the COT thing. When I switched to RP, it wouldn't do it even when I tried.

Anthropic wrote a whole thing about mapping LLMs: https://www.anthropic.com/news/mapp

>>103972491

When I was testing it on an auto-complete task, it kept doing the COT thing. When I switched to RP, it wouldn't do it even when I tried.

Anonymous 01/20/25(Mon)17:36:26 No.103972542

anon that picked F to simple bench question 6

Anonymous 01/20/25(Mon)17:39:02 No.103972561

>>103972239

p40 has 24gb and is roughly $260 atm?

p40 has 24gb and is roughly $260 atm?

Anonymous 01/20/25(Mon)17:41:02 No.103972579

I don't get this analogy, am I dumb or is this nonsense?

Anonymous 01/20/25(Mon)17:41:35 No.103972585

>>103972540

Anthropic only mapped location but not structure. And only on a single layer.

>Understanding the representations the model uses doesn't tell us how it uses them; even though we have the features, we still need to find the circuits they are involved in.

Anthropic only mapped location but not structure. And only on a single layer.

>Understanding the representations the model uses doesn't tell us how it uses them; even though we have the features, we still need to find the circuits they are involved in.

Anonymous 01/20/25(Mon)17:44:17 No.103972618

>>103972526

What is the problem?

What is the problem?

Anonymous 01/20/25(Mon)17:45:34 No.103972628

>>103972579

She's right. No god would use 'boku' unless it is the god of betas.

She's right. No god would use 'boku' unless it is the god of betas.

Anonymous 01/20/25(Mon)17:48:48 No.103972671

>>103972579

I don't speak Jap but it's saying ga is pretentious, then makes fun of you by saying if you were to call yourself god then you're not one solidly but a "I'm one too" kind as you are bedridden.

I don't speak Jap but it's saying ga is pretentious, then makes fun of you by saying if you were to call yourself god then you're not one solidly but a "I'm one too" kind as you are bedridden.

Anonymous 01/20/25(Mon)17:52:48 No.103972700

>>103972516

And it won't be anytime soon for what I'm seeing, or is it a "nightly branch" somewhere?

And it won't be anytime soon for what I'm seeing, or is it a "nightly branch" somewhere?

Anonymous 01/20/25(Mon)17:55:04 No.103972722

>>103972671

I understand that much, I just don't understand how what she wrote explains the difference between ga and wa. What the hell is the point of a shadow admitting to being married to the light? The only thing I can think of is that it's meaningless. So boku ga is pretentious and boku wa is meaningless?

I understand that much, I just don't understand how what she wrote explains the difference between ga and wa. What the hell is the point of a shadow admitting to being married to the light? The only thing I can think of is that it's meaningless. So boku ga is pretentious and boku wa is meaningless?

Anonymous 01/20/25(Mon)17:57:32 No.103972743

>>103972722

Ah, that part. Sounds like creative bs to me too idk sorry.

Ah, that part. Sounds like creative bs to me too idk sorry.

Anonymous 01/20/25(Mon)17:57:53 No.103972748

LMG... I... kneel

Anonymous 01/20/25(Mon)18:00:08 No.103972775

Retard here, how do you connect R1 to ST? It keeps getting confused, I think it might be the instruction template? Do you have custom rulesets/lorebooks to help it do more than just keep breaking down the scene and never getting to a response?

Anonymous 01/20/25(Mon)18:01:08 No.103972781

>>103972775

https://github.com/SillyTavern/SillyTavern/commit/d7bb92be540d17d28e4f1c0c0bdec95d2525045a

https://github.com/SillyTavern/Sill

Anonymous 01/20/25(Mon)18:01:57 No.103972797

Anonymous 01/20/25(Mon)18:06:40 No.103972848

IT'S NOT FAIR. I WANT TO RUN R1 AT HOME.

Anonymous 01/20/25(Mon)18:06:53 No.103972852

R1 is a coomtune

Anonymous 01/20/25(Mon)18:11:24 No.103972884

ggoof of distilled needs some loader update doesn't it? I keep trying to load with ooba and kobold and it dies.

Anonymous 01/20/25(Mon)18:12:24 No.103972897

>>103972884

distilled qwen yeah, kobold supports it on the latest experimental

distilled qwen yeah, kobold supports it on the latest experimental

Anonymous 01/20/25(Mon)18:12:52 No.103972902

>>103972884

They use a different tokenizer with added <think> </think> tokens

They use a different tokenizer with added <think> </think> tokens

Anonymous 01/20/25(Mon)18:16:24 No.103972937

>>103972472

How are you going to regulate it when it can be downloaded and run?

How are you going to regulate it when it can be downloaded and run?

Anonymous 01/20/25(Mon)18:17:50 No.103972950

>>103972781

Does that work just through their api or OR as well?

Does that work just through their api or OR as well?

Anonymous 01/20/25(Mon)18:23:00 No.103973002

Has anyone had a chance to mess around with the 70B and 32B R1 tunes?

How do they perform in comparison to the real deal?

How do they perform in comparison to the real deal?

Anonymous 01/20/25(Mon)18:24:13 No.103973009

>>103973002

32B is best local coder by FAR. And that is with apparently the wrong tokenizer.

32B is best local coder by FAR. And that is with apparently the wrong tokenizer.

Anonymous 01/20/25(Mon)18:24:18 No.103973010

>>103972781

Thank you anon

Thank you anon

Anonymous 01/20/25(Mon)18:25:26 No.103973025

Anonymous 01/20/25(Mon)18:25:32 No.103973027

>>103973002

32B is okayish, 70B is great. Neither get even closer to be as good as the real deal though.

32B is okayish, 70B is great. Neither get even closer to be as good as the real deal though.

Anonymous 01/20/25(Mon)18:25:51 No.103973029

>>103973002

Certainly better than the base counterpart, but doesn't really hold a candle to the real R1. I mean it's kind of hard to compete 70b vs 700b.

Certainly better than the base counterpart, but doesn't really hold a candle to the real R1. I mean it's kind of hard to compete 70b vs 700b.

Anonymous 01/20/25(Mon)18:26:27 No.103973032

>>103973009

Yeah but 32B is not 70B and 70B doesnt make the retarded mistakes a 32B does by virtue of being a 32B. Even if a 32B initially scores higher on a coding bench how am I to be sure it won't forget kimiko is wearing panties like a 70B wont?

Yeah but 32B is not 70B and 70B doesnt make the retarded mistakes a 32B does by virtue of being a 32B. Even if a 32B initially scores higher on a coding bench how am I to be sure it won't forget kimiko is wearing panties like a 70B wont?

Anonymous 01/20/25(Mon)18:27:15 No.103973038

>>103973032

I guess but qwen means 128K context which is important for coding imo

I guess but qwen means 128K context which is important for coding imo

Anonymous 01/20/25(Mon)18:31:01 No.103973064

>>103971948

>>103972310

Stock voices can only generate 30 seconds long output files.

Fish Speech 1.5 can generate 1 minute long output files.

Sample:

https://vocaroo.com/1aY0CErPJFlr

ElevenLabs Reader app is free to use. You can use screen copy to record the audio

https://github.com/Genymobile/scrcpy

Sample:

https://vocaroo.com/153tQ51pbEpN

>>103972310

Stock voices can only generate 30 seconds long output files.

Fish Speech 1.5 can generate 1 minute long output files.

Sample:

https://vocaroo.com/1aY0CErPJFlr

ElevenLabs Reader app is free to use. You can use screen copy to record the audio

https://github.com/Genymobile/scrcp

Sample:

https://vocaroo.com/153tQ51pbEpN

Anonymous 01/20/25(Mon)18:32:44 No.103973083

Is this as smart as the model DeepSeek is running in the chat?

Anonymous 01/20/25(Mon)18:33:50 No.103973094

Anonymous 01/20/25(Mon)18:34:26 No.103973102

>>103973083

What is the system requirements for running this?

What is the system requirements for running this?

Anonymous 01/20/25(Mon)18:35:30 No.103973113

Where did all these retards come from?

Anonymous 01/20/25(Mon)18:35:48 No.103973115

How do you guys use servicetensor (serious software) now that model cards no longer say how to prompt stuff and have prompting built into them?

Anonymous 01/20/25(Mon)18:36:17 No.103973122

>>103973083

qwen 32B R1 is just qwen trained on outputs from R1. Also 700B vs 32B

qwen 32B R1 is just qwen trained on outputs from R1. Also 700B vs 32B

Anonymous 01/20/25(Mon)18:37:06 No.103973135

>>103973115

models have the template embedded

models have the template embedded

Anonymous 01/20/25(Mon)18:37:13 No.103973137

>>103973113

tons of hype on twitter and youtube

tons of hype on twitter and youtube

Anonymous 01/20/25(Mon)18:37:55 No.103973146

>>103973115

Just read the model's config files nigga.

Just read the model's config files nigga.

Anonymous 01/20/25(Mon)18:38:29 No.103973153

>>103973115

llama.cpp tells you the prompt format on startup

llama.cpp tells you the prompt format on startup

Anonymous 01/20/25(Mon)18:38:40 No.103973156

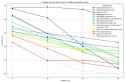

>>103973083

This is how it appears on benchmarks.

If this is to be believed, it's extremely impressive. That being said. 32B is 32B.

This is how it appears on benchmarks.

If this is to be believed, it's extremely impressive. That being said. 32B is 32B.

Anonymous 01/20/25(Mon)18:39:40 No.103973164

>>103973156

Is that qwen 32B R1 or R1 light that we dont have?

Is that qwen 32B R1 or R1 light that we dont have?

Anonymous 01/20/25(Mon)18:40:21 No.103973168

>>103973156

>>103973094

>>103973122

Yeah I saw this on leddit and was surprised, I wonder if DeepSeek-R1-32B can be run locally on consumer hardware.

>>103973094

>>103973122

Yeah I saw this on leddit and was surprised, I wonder if DeepSeek-R1-32B can be run locally on consumer hardware.

Anonymous 01/20/25(Mon)18:41:14 No.103973177

>>103973164

They dont outright say qwen, but they do say distilled 32B model so I'm going to say its probably the one they dropped today.

They dont outright say qwen, but they do say distilled 32B model so I'm going to say its probably the one they dropped today.

Anonymous 01/20/25(Mon)18:41:54 No.103973186

>>103972848

Who's stopping you?

Who's stopping you?

Anonymous 01/20/25(Mon)18:42:06 No.103973189

>>103973168

Benchmarks don't equal over all knowledge. Its much easier to be good enough to pass a benchmark but not have enough general knowledge to generalize across other things as well

Benchmarks don't equal over all knowledge. Its much easier to be good enough to pass a benchmark but not have enough general knowledge to generalize across other things as well

Anonymous 01/20/25(Mon)18:42:11 No.103973191

>>103973135

That was part of the question. Thank you for trying 7B.

That was part of the question. Thank you for trying 7B.

Anonymous 01/20/25(Mon)18:42:53 No.103973201

>>103973168

https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-32B-GGUF/tree/main

Pretty sure it's just this. And yes it runs on consumer hardware. 3090 users should be happy, but as a 2x3090 user ill stick to Q6K_L or maybe use the 70B one when a good quant comes out.

https://huggingface.co/bartowski/De

Pretty sure it's just this. And yes it runs on consumer hardware. 3090 users should be happy, but as a 2x3090 user ill stick to Q6K_L or maybe use the 70B one when a good quant comes out.

Anonymous 01/20/25(Mon)18:44:29 No.103973215

>Ollama trannies still haven't updated to support the r1 models.

Anonymous 01/20/25(Mon)18:44:51 No.103973217

>>103971623

>spatial stuff

not that anon, what do you mean by spatial stuff? And how good? Does the model have some understanding of 3D space? And if I gave it a bunch of shapes and topological relationships between them could it reason about that? I swear I'm trying to do some very weird erotic role playing and not trying to get it to do engineering work to build a robot army

>spatial stuff

not that anon, what do you mean by spatial stuff? And how good? Does the model have some understanding of 3D space? And if I gave it a bunch of shapes and topological relationships between them could it reason about that? I swear I'm trying to do some very weird erotic role playing and not trying to get it to do engineering work to build a robot army

Anonymous 01/20/25(Mon)18:51:46 No.103973280

>>103973064

>ElevenLabs

nah, i'd rather stay local

>fish speech

oh right, thanks for reminding me, i need to test it again. All i remember is it had the same bad pronounciation of french names in english text like XTTSv2 and StyleTTS2, but maybe it can at least read whole sentences without splitting.

>ElevenLabs

nah, i'd rather stay local

>fish speech

oh right, thanks for reminding me, i need to test it again. All i remember is it had the same bad pronounciation of french names in english text like XTTSv2 and StyleTTS2, but maybe it can at least read whole sentences without splitting.

Anonymous 01/20/25(Mon)18:53:28 No.103973298

>>103973201

>https://huggingface.co/bartowski/DeepSeek-R1-Distill-Qwen-32B-GGUF/tree/main

>>103973189

>DeepSeek-R1-Distill-Qwen-32B outperforms OpenAI-o1-mini across various benchmarks, achieving new state-of-the-art results for dense models.

I read that this 32B model runs on MacBook Pro. Maybe Apple's Neural engine helps?

>https://huggingface.co/bartowski/D

>>103973189

>DeepSeek-R1-Distill-Qwen-32B outperforms OpenAI-o1-mini across various benchmarks, achieving new state-of-the-art results for dense models.

I read that this 32B model runs on MacBook Pro. Maybe Apple's Neural engine helps?

Anonymous 01/20/25(Mon)18:54:42 No.103973313

Exllama 2 works with 32B version. I really like it so far. Noticeably much smarter in ERP. It does say sloppy shit but it is much more varied and fitting somehow.

Anonymous 01/20/25(Mon)18:56:51 No.103973332

So let me get this straight, all of you are using R1 distilled models for RP without having them do the thinking and you claim they are an improvement over the base models?

Anonymous 01/20/25(Mon)18:57:45 No.103973338

>>103973332

yes, even without any thinking tags it is a huge improvement over last time I tried qwen2.5

yes, even without any thinking tags it is a huge improvement over last time I tried qwen2.5

Anonymous 01/20/25(Mon)18:59:39 No.103973355

can someone please call sam he's locked himself in his room and won't come out

Anonymous 01/20/25(Mon)19:04:09 No.103973394

Anonymous 01/20/25(Mon)19:09:27 No.103973430

>>103973355

come out, sam

come out, sam

Anonymous 01/20/25(Mon)19:10:38 No.103973437

>>103973332

I think many here are full of shit because these distilled models aren't as great as they're claiming for RP. They don't even follow formatting instructions that well, thinking tags or not, and the reasoning steps are safety-cucked (even worse when the model is trying to "roleplay" an assistant character that is supposed to be able to generate "illegal" content).

I think many here are full of shit because these distilled models aren't as great as they're claiming for RP. They don't even follow formatting instructions that well, thinking tags or not, and the reasoning steps are safety-cucked (even worse when the model is trying to "roleplay" an assistant character that is supposed to be able to generate "illegal" content).

Anonymous 01/20/25(Mon)19:12:42 No.103973447

Do you guys seriously masturbate to text?

Anonymous 01/20/25(Mon)19:13:26 No.103973454

>anon discovers erotic fiction

Anonymous 01/20/25(Mon)19:14:33 No.103973465

>>103973454

That's for women

That's for women

Anonymous 01/20/25(Mon)19:15:09 No.103973469

>>103973465

I'm a woman though? :3

I'm a woman though? :3

Anonymous 01/20/25(Mon)19:15:31 No.103973471

>>103973465

I'm sorry you're suffering from aphantasia.

I'm sorry you're suffering from aphantasia.

Anonymous 01/20/25(Mon)19:19:07 No.103973487

>>103973447

no I do it with a sense of playful joy and whimsy

no I do it with a sense of playful joy and whimsy

Anonymous 01/20/25(Mon)19:22:04 No.103973504

havent lurked this thread in months, could someone tldr me? we got releases?

Anonymous 01/20/25(Mon)19:22:21 No.103973508

So I'm testing R1-Distill-Llama-70b (q8 quant) on an actual real task I have. I train image generation models, on quite a sizable, private dataset of photos. I've manually tagged every image with the most salient tags. Then, I run multiple VLMs to generate captions for each image. These captions are often inaccurate, so I run a final step to use an LLM to combine the captions + tags into a final caption, with instructions on how to combine things, which details to include, how tags take precedence over what the captions say, etc.

R1-Distill-Llama-70b is really good at this. Back when I tested QwQ, it had some potential, but was ultimately too unreliable and unstable for this task, especially for automation. But r1-llama always uses <think> tags, meaning you can automate extracting the outputs, since the final output always comes after the </think>. I've also never once seen it get stuck in a loop. It autistically adheres to every single line in the lengthy instruction. It reasons step-by-step over the captions and tags for an image, builds up it's own mental model of what's going on in the scene, then writes a perfect final caption. Oh, and these are NSFW images, and the model is basically completely uncensored. For my specific task this is absolutely the new SOTA, and previously I was using Mistral-Large as the LLM. Fucking amazing, I kneel to China.

R1-Distill-Llama-70b is really good at this. Back when I tested QwQ, it had some potential, but was ultimately too unreliable and unstable for this task, especially for automation. But r1-llama always uses <think> tags, meaning you can automate extracting the outputs, since the final output always comes after the </think>. I've also never once seen it get stuck in a loop. It autistically adheres to every single line in the lengthy instruction. It reasons step-by-step over the captions and tags for an image, builds up it's own mental model of what's going on in the scene, then writes a perfect final caption. Oh, and these are NSFW images, and the model is basically completely uncensored. For my specific task this is absolutely the new SOTA, and previously I was using Mistral-Large as the LLM. Fucking amazing, I kneel to China.

Anonymous 01/20/25(Mon)19:24:50 No.103973519

>>103973504

R1 which is actually finally for real this time claude at home. Legit beats sonnet at coding on stuff ive tried. Also feels like it for RP. Also they finetuned qwen 3.5 32B and llama 3.3 70B which are apparently a ton better now

R1 which is actually finally for real this time claude at home. Legit beats sonnet at coding on stuff ive tried. Also feels like it for RP. Also they finetuned qwen 3.5 32B and llama 3.3 70B which are apparently a ton better now

Anonymous 01/20/25(Mon)19:26:00 No.103973527

>>103971456

Cosyvoice is decent. https://huggingface.co/spaces/FunAudioLLM/CosyVoice2-0.5B

Sample:

https://vocaroo.com/1fouE4giwDWr

Cosyvoice is decent. https://huggingface.co/spaces/FunAu

Sample:

https://vocaroo.com/1fouE4giwDWr

Anonymous 01/20/25(Mon)19:27:46 No.103973534

Anonymous 01/20/25(Mon)19:33:07 No.103973557

>>103973064

software/voice for second sample?

software/voice for second sample?

Anonymous 01/20/25(Mon)19:34:46 No.103973567

>>103973519

Let's be real running R1 locally at home in any meaningful way comes with massive overheads. Not like 2X3090 overhead. Actual investments into niche hardware.

Let's be real running R1 locally at home in any meaningful way comes with massive overheads. Not like 2X3090 overhead. Actual investments into niche hardware.

Anonymous 01/20/25(Mon)19:36:39 No.103973576

I'm seeing some wild questions with R1 coming out.

Where did all these new people come from?

Where did all these new people come from?

Anonymous 01/20/25(Mon)19:37:23 No.103973580

Anonymous 01/20/25(Mon)19:37:27 No.103973581

>>103973567

I think it would be doable under 2 grand with a DDR4 server + a 3090 for the shared expert / context processing

I think it would be doable under 2 grand with a DDR4 server + a 3090 for the shared expert / context processing

Anonymous 01/20/25(Mon)19:38:10 No.103973586

Which one is the least censored? R1 distilled Qwen 32B or R1 distilled Llama 70B?

Anonymous 01/20/25(Mon)19:38:27 No.103973590

>>103973581

It IS doable with 192GB and 24GB card if your ok with 2bit. Still probably better than anything else even then.

It IS doable with 192GB and 24GB card if your ok with 2bit. Still probably better than anything else even then.

Anonymous 01/20/25(Mon)19:38:29 No.103973591

Anonymous 01/20/25(Mon)19:38:50 No.103973595

>>103973576

I take it you've never been here for any release. It happens every time.

I take it you've never been here for any release. It happens every time.

Anonymous 01/20/25(Mon)19:41:43 No.103973615

>>103973168

go back

go back

Anonymous 01/20/25(Mon)19:42:48 No.103973621

>>103973591

Nah, it has 20B experts that change per token and it predicts 2 tokens at a time. So say it gets it right 50% of the time for the 2nd token.

So like 20 tks+?

Nah, it has 20B experts that change per token and it predicts 2 tokens at a time. So say it gets it right 50% of the time for the 2nd token.

So like 20 tks+?

Anonymous 01/20/25(Mon)19:43:18 No.103973624

>>103973576

R1 (the giant MoE model) is good but it's obviously being massively shilled all over the place, I imagined only ko-fi finetuners would stoop to that.

R1 (the giant MoE model) is good but it's obviously being massively shilled all over the place, I imagined only ko-fi finetuners would stoop to that.

Anonymous 01/20/25(Mon)19:44:28 No.103973629

>>103973624

Or people could be excited because its a actual worthwhile local model for once?

Or people could be excited because its a actual worthwhile local model for once?

Anonymous 01/20/25(Mon)19:44:34 No.103973631

>>103973621

literally use the results people get from ds3 it's the same arch i don't know why we need to respeculate

literally use the results people get from ds3 it's the same arch i don't know why we need to respeculate

Anonymous 01/20/25(Mon)19:45:48 No.103973642

>>103973629

V3 was very worthwhile already tho

V3 was very worthwhile already tho

Anonymous 01/20/25(Mon)19:47:19 No.103973653

>>103973590

I have 120GB of VRAM and 96GB of RAM. Is Q2 R1 even worth trying? Anyone here run inference at Q2?

I have 120GB of VRAM and 96GB of RAM. Is Q2 R1 even worth trying? Anyone here run inference at Q2?

Anonymous 01/20/25(Mon)19:48:14 No.103973657

Kek, it looks like its beating O1 on stuff on twitter / reddit yet costs like 40x less counting cache.

Anonymous 01/20/25(Mon)19:48:16 No.103973658

>>103973629

"Local" model. All the enthusiastic support is from anons who are using it on the cloud.

"Local" model. All the enthusiastic support is from anons who are using it on the cloud.

Anonymous 01/20/25(Mon)19:49:14 No.103973669

>>103973653

A bigger model at any quant 2bit or up is always better than the smaller model

A bigger model at any quant 2bit or up is always better than the smaller model

Anonymous 01/20/25(Mon)19:49:14 No.103973670

>>103973355

Sam is still winning. It's legitimately spooky

Sam is still winning. It's legitimately spooky

Anonymous 01/20/25(Mon)19:50:35 No.103973678

>>103973670

How long can he keep playing the investors like this? Even if O3 is twice as good as O1 they have rivals getting 90% of the way there for a tiny fraction of the price and most use cases the cheaper model will be good enough for.

How long can he keep playing the investors like this? Even if O3 is twice as good as O1 they have rivals getting 90% of the way there for a tiny fraction of the price and most use cases the cheaper model will be good enough for.

Anonymous 01/20/25(Mon)19:51:31 No.103973688

>>103973629

Buy an ad, Chang

Buy an ad, Chang

Anonymous 01/20/25(Mon)19:51:42 No.103973690

>>103973670

I think it's substantially more likely this closed door meeting will basically boil down to 'shut the fuck up, we're ripping out the safety rails or china is gonna fuck us in the ass'

I think it's substantially more likely this closed door meeting will basically boil down to 'shut the fuck up, we're ripping out the safety rails or china is gonna fuck us in the ass'

Anonymous 01/20/25(Mon)19:51:57 No.103973693

>>103973669

Knowledge, yes. All the rest, unknown since only MMLU or perplexity testing is being done on quantized models.

Knowledge, yes. All the rest, unknown since only MMLU or perplexity testing is being done on quantized models.

Anonymous 01/20/25(Mon)19:52:28 No.103973698

>>103973642

no it wasn't, V3 was super rough around the edges and raw, kind of shit in actual usage. I was a hater from the beginning

R1 is legit though and an actual sonnet+ model, it's kind of nuts how quickly they iterated here because it's a different animal completely

no it wasn't, V3 was super rough around the edges and raw, kind of shit in actual usage. I was a hater from the beginning

R1 is legit though and an actual sonnet+ model, it's kind of nuts how quickly they iterated here because it's a different animal completely

Anonymous 01/20/25(Mon)19:52:50 No.103973702

kek oai conditioned people not to see the thinking so they think it's a bug

Anonymous 01/20/25(Mon)19:54:25 No.103973715

>>103973657

>deepseek shits on o1 for pennies

>huanyuan with loras outperforms sora in specific use cases on consumer hardware

Meanwhile Sam is losing money on his $200/month subscription.

>deepseek shits on o1 for pennies

>huanyuan with loras outperforms sora in specific use cases on consumer hardware

Meanwhile Sam is losing money on his $200/month subscription.

Anonymous 01/20/25(Mon)19:56:13 No.103973737

>>103973586

Anyone?

Anyone?

Anonymous 01/20/25(Mon)19:58:36 No.103973752

>>103973702

Do we really need an hourly retard update?

Do we really need an hourly retard update?

Anonymous 01/20/25(Mon)19:58:47 No.103973756

>>103973737

Neither are censored desu

Neither are censored desu

Anonymous 01/20/25(Mon)19:59:56 No.103973762

>>103973756

They are in instruct mode.

They are in instruct mode.

Anonymous 01/20/25(Mon)20:00:24 No.103973768

>>103973752

sorry babe, i'll get back to speculating on how to run a 1t model off of sd cards

sorry babe, i'll get back to speculating on how to run a 1t model off of sd cards

Anonymous 01/20/25(Mon)20:00:31 No.103973771

>>103973752

I was about to ask you the same question m8

I was about to ask you the same question m8

Anonymous 01/20/25(Mon)20:02:29 No.103973784

>>103973702

oh um okay, but how do i turn it off?

oh um okay, but how do i turn it off?

Anonymous 01/20/25(Mon)20:03:38 No.103973793

>>103973784

lol these tards

lol these tards

Anonymous 01/20/25(Mon)20:04:37 No.103973802

>>103973784

i would ask can you think without thinking but clearly they can so

i would ask can you think without thinking but clearly they can so

Anonymous 01/20/25(Mon)20:04:41 No.103973803

>>103973784

He might just be worried that old thinking tokens are creeping into the context which is a valid enough concern. You might not want that.

He might just be worried that old thinking tokens are creeping into the context which is a valid enough concern. You might not want that.

Anonymous 01/20/25(Mon)20:04:50 No.103973804

you know, if people stopped spoonfeeding retards maybe they would stop coming here

Anonymous 01/20/25(Mon)20:05:29 No.103973808

>>103973784

lmao

lmao

Anonymous 01/20/25(Mon)20:05:55 No.103973811

>>103973804

i will continue to answer on-topic questions asked in good faith. nigger.

i will continue to answer on-topic questions asked in good faith. nigger.

Anonymous 01/20/25(Mon)20:06:43 No.103973819

best model for 3060?

Anonymous 01/20/25(Mon)20:07:08 No.103973822

>>103973784

I want to call this guy a retard but replying him now would doxx me as a dark roleplayer

I want to call this guy a retard but replying him now would doxx me as a dark roleplayer

Anonymous 01/20/25(Mon)20:07:08 No.103973823

>>103973819

r1-distill 14b

r1-distill 14b

Anonymous 01/20/25(Mon)20:07:14 No.103973826

>>103973811

based.

based.

Anonymous 01/20/25(Mon)20:07:23 No.103973828

>>103973784

Wait till he finds out that he was still paying for the thinking even when it wasn't being shown to him

Wait till he finds out that he was still paying for the thinking even when it wasn't being shown to him

Anonymous 01/20/25(Mon)20:07:43 No.103973833

So how does DeepSeek-R1-Distill-Qwen-14B compare to Nemo for RP?

Anonymous 01/20/25(Mon)20:07:44 No.103973835

>>103973803

Are thinking tokens normally removed from the context in chats offered by openai and deepseek?

Are thinking tokens normally removed from the context in chats offered by openai and deepseek?

Anonymous 01/20/25(Mon)20:08:22 No.103973841

>>103973811

cringe

cringe

Anonymous 01/20/25(Mon)20:09:37 No.103973857

>>103973437

It (32B) feels like a very weird flavor of an Undi frankenmerge. Yes you have to reroll a lot but when you hit the jackpot it is solid gold. But unlike the frankenmerge it actually has a brain and it thinks when it hits gold. Makes me somewhat optimistic for the future. Like 2 or 3 iterations of this kind of model will finally make for a worthy coombot. My biggest fear though is that the gold will disappear with next iterations because it is a result of COT completely raping the inbuilt censorship. If anything after rerolling one message 50 times and getting like 10 hits I am kinda shocked how many different ways you can continue dick sucking while retaining the style so far. Never seen this happen with any other model.

It (32B) feels like a very weird flavor of an Undi frankenmerge. Yes you have to reroll a lot but when you hit the jackpot it is solid gold. But unlike the frankenmerge it actually has a brain and it thinks when it hits gold. Makes me somewhat optimistic for the future. Like 2 or 3 iterations of this kind of model will finally make for a worthy coombot. My biggest fear though is that the gold will disappear with next iterations because it is a result of COT completely raping the inbuilt censorship. If anything after rerolling one message 50 times and getting like 10 hits I am kinda shocked how many different ways you can continue dick sucking while retaining the style so far. Never seen this happen with any other model.

Anonymous 01/20/25(Mon)20:10:11 No.103973863

>>103973803

>>103973835

>https://github.com/ugotworms/professor-kokoro-radio/tree/main

If only there was some documentation around, aaaaarrrghhhhhhh

>>103973835

>https://github.com/ugotworms/profe

If only there was some documentation around, aaaaarrrghhhhhhh

Anonymous 01/20/25(Mon)20:11:52 No.103973876

Anonymous 01/20/25(Mon)20:12:48 No.103973883

> be me

> just chillin' at home

> *nothing to do*

> *might watch some anime*

> *or play some games*

> *whatever, life's chill*

> then my phone rings

> > "Hey dude, are you free today?"

> *ugh, work?*

> "Yeah, I gotta come in."

> walk to work

> *it's 10 degrees out*

> *hate winter*

> *wish I had a car*

> at work

> > boss walks up

> > "Hey, you're late!"

> "No, I'm on time."

> > "Clock says 9:05."

> > "You're fired."

> now I'm broke af

> *what do I even do now?*

> *guess I'll live in my car.*

> *meme lord energy*

brought to you by r1-qwen

> just chillin' at home

> *nothing to do*

> *might watch some anime*

> *or play some games*

> *whatever, life's chill*

> then my phone rings

> > "Hey dude, are you free today?"

> *ugh, work?*

> "Yeah, I gotta come in."

> walk to work

> *it's 10 degrees out*

> *hate winter*

> *wish I had a car*

> at work

> > boss walks up

> > "Hey, you're late!"

> "No, I'm on time."

> > "Clock says 9:05."

> > "You're fired."

> now I'm broke af

> *what do I even do now?*

> *guess I'll live in my car.*

> *meme lord energy*

brought to you by r1-qwen

Anonymous 01/20/25(Mon)20:13:36 No.103973894

I have a horrible feeling that even the recent locust plagues will pale in comparison to what is coming to /lmg/ now....

Anonymous 01/20/25(Mon)20:14:05 No.103973899

Anonymous 01/20/25(Mon)20:15:38 No.103973917

>>103972275

>Those vote didnt show up for Kamala when everyone scrutinized

They didn't show up for Kamala because everyone fucking hated Kamala. Biden really got the last laugh when he endorsed that horse-faced bitch. She tanked the whole democrat party, how the FUCK do you lose the popular vote as a democrat?

>Those vote didnt show up for Kamala when everyone scrutinized

They didn't show up for Kamala because everyone fucking hated Kamala. Biden really got the last laugh when he endorsed that horse-faced bitch. She tanked the whole democrat party, how the FUCK do you lose the popular vote as a democrat?

Anonymous 01/20/25(Mon)20:16:04 No.103973921

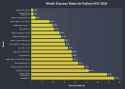

So uh, for those who claim that the deepseek-distill models are working well... what instruct templates are you using? Custom? I don't think ST has anything for their special format

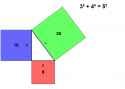

Anonymous 01/20/25(Mon)20:16:19 No.103973925

R1 pipeline visualized

Anonymous 01/20/25(Mon)20:18:29 No.103973948

>>103973925

I wonder what unhinged soul R1-Zero can offer

I wonder what unhinged soul R1-Zero can offer

Anonymous 01/20/25(Mon)20:21:05 No.103973967

>>103973921

They are probably using the chat endpoint.

Or they just created the instruct template manually on silly based on he config files, it's not like that's hard to do.

They are probably using the chat endpoint.

Or they just created the instruct template manually on silly based on he config files, it's not like that's hard to do.

Anonymous 01/20/25(Mon)20:21:18 No.103973970

>>103973925

but who was r1-lite

but who was r1-lite

Anonymous 01/20/25(Mon)20:21:33 No.103973971

>>103973925

oh no is that.... SYNTHETIC DATA??!!? but don't they know that makes the model... LE SLOPPED?!??!

oh no is that.... SYNTHETIC DATA??!!? but don't they know that makes the model... LE SLOPPED?!??!

Anonymous 01/20/25(Mon)20:22:36 No.103973979

Anonymous 01/20/25(Mon)20:24:15 No.103973987

>>103973971

I mean, yeah, yeah it does. Objectively so. It's why we always had test, training and validation datasets with any other data science application, like it or not. It's just that we're really no longer giving a shit in introducing bias because it's more important that the model gets reinforced into logic and cohesion, we don't care about being an unbiased language model anymore.