/lmg/ - Local Models General

Anonymous 01/18/25(Sat)16:57:02 | 364 comments | 46 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103940486 & >>103928562

►News

>(01/17) Nvidia AceInstruct, finetuned on Qwen2.5-Base: https://hf.co/nvidia/AceInstruct-72B

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/outetts-03-6786b1ebc7aeb757bc17a2fa

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b-instruct

>(01/14) MiniMax-Text-01 released with 456B-A45.9B & hybrid-lightning attention: https://hf.co/MiniMaxAI/MiniMax-Text-01

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103940486 & >>103928562

►News

>(01/17) Nvidia AceInstruct, finetuned on Qwen2.5-Base: https://hf.co/nvidia/AceInstruct-72

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/ou

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b

>(01/14) MiniMax-Text-01 released with 456B-A45.9B & hybrid-lightning attention: https://hf.co/MiniMaxAI/MiniMax-Tex

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/18/25(Sat)16:57:17 No.103947484

►Recent Highlights from the Previous Thread: >>103940486

--Paper (old): The Hyperfitting Phenomenon: Sharpening and Stabilizing LLMs for Open-Ended Text Generation:

>103940678 >103940928

--Hands in AI-generated images, and the limitations of current diffusion models:

>103941835 >103941970 >103942258 >103942318 >103942601 >103942914 >103943068

--Limitations of diffusion architecture and potential solutions:

>103942836 >103942880 >103942896 >103942930 >103943549 >103943765

--Imagegen model limitations and potential solutions:

>103941324 >103941337 >103941391 >103941437 >103941452 >103941462 >103941504 >103941535 >103941545 >103941653 >103941672 >103941598 >103941695 >103941711 >103941520 >103941536 >103941529 >103941621 >103941647 >103941356

--Anon impatiently waits for new model releases while Meta faces legal issues:

>103945006 >103945024 >103945060 >103945026 >103945335 >103945448 >103945477 >103945499 >103945508 >103945378 >103945418

--Discussion of Titan's long-term memory capabilities and implications:

>103942985 >103942997 >103943009 >103943062 >103943188 >103943231 >103943342 >103943423 >103943351 >103943404 >103943394 >103943030 >103943054

--Local LLM usage and model comparisons for coding tasks:

>103944644 >103944732 >103944749 >103944774 >103944780 >103944802 >103944868 >103944899 >103944928 >103945002

--OuteTTS 500m slow generation speed and discussion of its architecture:

>103943827 >103943856 >103943900 >103943936 >103944001 >103944360 >103944451

--Discussion on the architecture and training of DeepSeek-R1 and reasoners:

>103944271 >103944432 >103944575 >103944735 >103944857

--PR for Top-nσ sampling strategy for llama.cpp:

>103944985 >103945163 >103946173

--R1-lite vs V3 model performance comparison:

>103944784 >103945015

--Successful Nala Test with V3 model:

>103945420

--Miku (free space):

>103940742 >103941324

►Recent Highlight Posts from the Previous Thread: >>103940489

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--Paper (old): The Hyperfitting Phenomenon: Sharpening and Stabilizing LLMs for Open-Ended Text Generation:

>103940678 >103940928

--Hands in AI-generated images, and the limitations of current diffusion models:

>103941835 >103941970 >103942258 >103942318 >103942601 >103942914 >103943068

--Limitations of diffusion architecture and potential solutions:

>103942836 >103942880 >103942896 >103942930 >103943549 >103943765

--Imagegen model limitations and potential solutions:

>103941324 >103941337 >103941391 >103941437 >103941452 >103941462 >103941504 >103941535 >103941545 >103941653 >103941672 >103941598 >103941695 >103941711 >103941520 >103941536 >103941529 >103941621 >103941647 >103941356

--Anon impatiently waits for new model releases while Meta faces legal issues:

>103945006 >103945024 >103945060 >103945026 >103945335 >103945448 >103945477 >103945499 >103945508 >103945378 >103945418

--Discussion of Titan's long-term memory capabilities and implications:

>103942985 >103942997 >103943009 >103943062 >103943188 >103943231 >103943342 >103943423 >103943351 >103943404 >103943394 >103943030 >103943054

--Local LLM usage and model comparisons for coding tasks:

>103944644 >103944732 >103944749 >103944774 >103944780 >103944802 >103944868 >103944899 >103944928 >103945002

--OuteTTS 500m slow generation speed and discussion of its architecture:

>103943827 >103943856 >103943900 >103943936 >103944001 >103944360 >103944451

--Discussion on the architecture and training of DeepSeek-R1 and reasoners:

>103944271 >103944432 >103944575 >103944735 >103944857

--PR for Top-nσ sampling strategy for llama.cpp:

>103944985 >103945163 >103946173

--R1-lite vs V3 model performance comparison:

>103944784 >103945015

--Successful Nala Test with V3 model:

>103945420

--Miku (free space):

>103940742 >103941324

►Recent Highlight Posts from the Previous Thread: >>103940489

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/18/25(Sat)17:16:16 No.103947646

Here's hoping llama4 will be just as great as deepseek 3 for translating Japanese to English.

Anonymous 01/18/25(Sat)17:23:00 No.103947719

how do I prevent mistral 22b instruct from impersonating me?

It does it way more often than the finetunes I used before

It does it way more often than the finetunes I used before

Anonymous 01/18/25(Sat)17:25:27 No.103947746

Will my logs ever look like this?

Anonymous 01/18/25(Sat)17:27:31 No.103947775

How big of a chatbot model would a 16gb card be able to run?

16gb card in question being a 5080

16gb card in question being a 5080

Anonymous 01/18/25(Sat)17:28:44 No.103947790

>>103947646

I have a feeling 70B can't hold that much more knowledge and if it does then it will be so sensitive to quantization that the lobotomy will make it more retarded than current 70B at the same quant. It's probably over for the poorfags.

I have a feeling 70B can't hold that much more knowledge and if it does then it will be so sensitive to quantization that the lobotomy will make it more retarded than current 70B at the same quant. It's probably over for the poorfags.

Anonymous 01/18/25(Sat)17:30:13 No.103947808

>>103947775

22B at Q4 is your max. Or 12B at Q8.

22B at Q4 is your max. Or 12B at Q8.

Anonymous 01/18/25(Sat)17:30:31 No.103947811

>>103947646

Dear LLM Community,

We acknowledge the concerns raised about the safety and reliability of our Large Language Models, particularly Llama 3.x. We appreciate the feedback and criticism we have received from the community, and we would like to assure you that we take these concerns seriously.

At Meta, we strive to develop and deploy AI models that are not only highly performant but also safe, transparent, and aligned with the values of our users and the broader community. Unfortunately, we fell short of these standards with Llama 3.x, and for that, we apologize. The model's limitations and potential risks were not adequately addressed, and we understand that this has led to concerns about its safety and potential misuse.

We want to assure you that we have learned from our mistakes and are committed to doing better in the future. Our team has been working diligently to address the shortcomings of Llama 3.x and develop more robust and safe models. We are pleased to announce that our next model, Llama 4, will incorporate significant improvements in filtering and safety features. We've implemented more stringent testing and evaluation protocols to ensure our models meet the highest standards of safety and reliability.

We're committed to ongoing improvement and refinement, and we look forward to continuing to work with the LLM community to develop models that are safe, reliable, and performant.

Sincerely,

Meta AI Team

Dear LLM Community,

We acknowledge the concerns raised about the safety and reliability of our Large Language Models, particularly Llama 3.x. We appreciate the feedback and criticism we have received from the community, and we would like to assure you that we take these concerns seriously.

At Meta, we strive to develop and deploy AI models that are not only highly performant but also safe, transparent, and aligned with the values of our users and the broader community. Unfortunately, we fell short of these standards with Llama 3.x, and for that, we apologize. The model's limitations and potential risks were not adequately addressed, and we understand that this has led to concerns about its safety and potential misuse.

We want to assure you that we have learned from our mistakes and are committed to doing better in the future. Our team has been working diligently to address the shortcomings of Llama 3.x and develop more robust and safe models. We are pleased to announce that our next model, Llama 4, will incorporate significant improvements in filtering and safety features. We've implemented more stringent testing and evaluation protocols to ensure our models meet the highest standards of safety and reliability.

We're committed to ongoing improvement and refinement, and we look forward to continuing to work with the LLM community to develop models that are safe, reliable, and performant.

Sincerely,

Meta AI Team

Anonymous 01/18/25(Sat)17:34:37 No.103947851

>>103947790

Can't say if I have that same attitude. When 3.1 came out, I thought "Man, this is the best they'll get?!" when I messed with the 70b, that was until 3.3 came out and completely crushed my expectations. damn near 400b translation performance with only a slightly worse general performance drop. I could see the 4.x series working a whole lot better, especially if they implement some of the shit they were publishing a month ago.

Can't say if I have that same attitude. When 3.1 came out, I thought "Man, this is the best they'll get?!" when I messed with the 70b, that was until 3.3 came out and completely crushed my expectations. damn near 400b translation performance with only a slightly worse general performance drop. I could see the 4.x series working a whole lot better, especially if they implement some of the shit they were publishing a month ago.

Anonymous 01/18/25(Sat)17:37:09 No.103947878

>>103947811

I just don't get why these open source models have such stupid (Both figurative AND literal) filters. God forbid we have LLMs say nigger when prompted to do so, but they can tell you why trooning is actually good for a child.

I just don't get why these open source models have such stupid (Both figurative AND literal) filters. God forbid we have LLMs say nigger when prompted to do so, but they can tell you why trooning is actually good for a child.

Anonymous 01/18/25(Sat)17:40:22 No.103947909

>>103947878

It's simple. Safety is not for normal people, but for the company. Suggesting trooning out is completely accepted by the society and saying nigger is not. If it was the opposite, it would be reversed. Can't have bad press.

It's simple. Safety is not for normal people, but for the company. Suggesting trooning out is completely accepted by the society and saying nigger is not. If it was the opposite, it would be reversed. Can't have bad press.

Anonymous 01/18/25(Sat)17:42:44 No.103947935

How expensive is 70B finetuning? Is QLORA all you need™ or is full fine tune the only way?

Anonymous 01/18/25(Sat)17:43:40 No.103947944

Anonymous 01/18/25(Sat)17:45:28 No.103947970

There was some chat about ddr3 systems a short while ago.

What was the conclusion?

What was the conclusion?

Anonymous 01/18/25(Sat)17:45:53 No.103947974

>>103947909

It's also funny that safety tunes measurably worsen a model's logic and reasoning, but they'll do it anyway because god forbid an auto complete outputs the word "niggers" and the company is now responsible for the result of a statistical algorithm with language from our own population sampled directly from them.

It's also funny that safety tunes measurably worsen a model's logic and reasoning, but they'll do it anyway because god forbid an auto complete outputs the word "niggers" and the company is now responsible for the result of a statistical algorithm with language from our own population sampled directly from them.

Anonymous 01/18/25(Sat)17:50:04 No.103948021

>>103947970

you would need a truly staggering number of channels to make it worthwhile

you would need a truly staggering number of channels to make it worthwhile

Anonymous 01/18/25(Sat)17:50:19 No.103948025

>>103947970

The conclusion was that it's useless unironically.

The conclusion was that it's useless unironically.

Anonymous 01/18/25(Sat)17:54:32 No.103948073

>CPUmaxxing is the way

It's faster to run models on the CPU now?

It's faster to run models on the CPU now?

Anonymous 01/18/25(Sat)17:55:18 No.103948076

Anonymous 01/18/25(Sat)17:55:26 No.103948078

>>103948073

no but you can run bigger ones much more cheaply

no but you can run bigger ones much more cheaply

Anonymous 01/18/25(Sat)17:56:17 No.103948085

>>103947974

God won't forbid it but their investors would, well, depending on the company, but that is true for most American companies.

God won't forbid it but their investors would, well, depending on the company, but that is true for most American companies.

Anonymous 01/18/25(Sat)18:01:22 No.103948134

>>103947811

Oddly enough with the entire Llama 3 series you just need a character name prefill to work around all or most of the filtering, if they fix that it'll be the end.

Oddly enough with the entire Llama 3 series you just need a character name prefill to work around all or most of the filtering, if they fix that it'll be the end.

Anonymous 01/18/25(Sat)18:16:53 No.103948317

Anonymous 01/18/25(Sat)18:20:28 No.103948353

I'm honestly surprised that with the amount of variants swimming around huggingface, there aren't any /d/-tier variants specifically set up around various fetishes. Whenver I see people mention LLMs and erotica in the same sentence, it's usually for the most general of ERP usage.

Anonymous 01/18/25(Sat)18:21:29 No.103948365

>>103948076

It’s still an option if you’re a waitchad

It’s still an option if you’re a waitchad

Anonymous 01/18/25(Sat)18:25:18 No.103948402

>>103948317

Is that still noobai?

Is that still noobai?

Anonymous 01/18/25(Sat)18:28:41 No.103948427

>>103948353

a general RP finetune that includes some /d/ related content (so almost all of them) will probably be better than a hyper-specific tune on just your fetish

a general RP finetune that includes some /d/ related content (so almost all of them) will probably be better than a hyper-specific tune on just your fetish

Anonymous 01/18/25(Sat)18:31:14 No.103948442

>>103948073

>>103948076

>>103948078

CPUmaxxing is the only reasonable way to run actually 80IQ+ models

I asked chatGPT what kind of custom hardware would I have to design with to get decent speeds and its like thousands and thousand dollars worth of FPGAs with several gigabytes of GDDR6 memory to run a simple 7B Q5 model

Honestly I don't trust the calculations entirely. It sounds a bit too extreme

>>103948076

>>103948078

CPUmaxxing is the only reasonable way to run actually 80IQ+ models

I asked chatGPT what kind of custom hardware would I have to design with to get decent speeds and its like thousands and thousand dollars worth of FPGAs with several gigabytes of GDDR6 memory to run a simple 7B Q5 model

Honestly I don't trust the calculations entirely. It sounds a bit too extreme

Anonymous 01/18/25(Sat)18:36:00 No.103948497

>>103948427

That is certainly true. Nevertheless, I'm suprised that I haven't seen at least one "this is the ultimate LLM for this fetish iswtg guys" let alone anything claiming to cater to a set of fetishes. Considering that half the people using LLMs are using it for gooning purposes only, I'm genuinely surprised that nobody has been vocal about the "nicheness" of this or that.

That is certainly true. Nevertheless, I'm suprised that I haven't seen at least one "this is the ultimate LLM for this fetish iswtg guys" let alone anything claiming to cater to a set of fetishes. Considering that half the people using LLMs are using it for gooning purposes only, I'm genuinely surprised that nobody has been vocal about the "nicheness" of this or that.

Anonymous 01/18/25(Sat)18:36:19 No.103948503

>>103948442

literally nothing about that is correct

literally nothing about that is correct

Anonymous 01/18/25(Sat)18:41:47 No.103948550

>>103948503

Yeah it I just checked everything is wrong

I can get ~19K LUT FPGAs for less than 15$/unit

Still I can't figure out a good way to calculate this stuff on my own

Yeah it I just checked everything is wrong

I can get ~19K LUT FPGAs for less than 15$/unit

Still I can't figure out a good way to calculate this stuff on my own

Anonymous 01/18/25(Sat)18:55:00 No.103948694

https://github.com/SillyTavern/SillyTavern/releases/tag/1.12.11

Anonymous 01/18/25(Sat)19:01:28 No.103948761

Has anything significant happened in the last 6 months?

Anonymous 01/18/25(Sat)19:04:12 No.103948784

>>103948442

>80IQ+

What does this mean in terms of benchmarks and parameters?

>to run a simple 7B Q5 model

An 8gb vram gpu should be enough.

4.5gb for the weights.

A smidge to hold the context.

Plus whatever amount is wasted by the OS.

>decent speeds

I don't think you're going to top the performance you get out of a gpu for small models that fit in vram.

>>103948497

>"this is the ultimate LLM for this fetish iswtg guys"

My guess is that that would need:

- person into fetish

- person that knows where the online communities are

- person able to get hold of good datasets

- person with deep enough pockets to make the finetune

>>103948365

With the latest /lmg/ crusher being a moe, 671b with 37b active, I thought there might be some hope of squeezing something out of it.

4 channels of ddr3 is ~40gb/s, so 1 tk/s best case?

(I don't know how moes work, and I'm making a guess.)

>80IQ+

What does this mean in terms of benchmarks and parameters?

>to run a simple 7B Q5 model

An 8gb vram gpu should be enough.

4.5gb for the weights.

A smidge to hold the context.

Plus whatever amount is wasted by the OS.

>decent speeds

I don't think you're going to top the performance you get out of a gpu for small models that fit in vram.

>>103948497

>"this is the ultimate LLM for this fetish iswtg guys"

My guess is that that would need:

- person into fetish

- person that knows where the online communities are

- person able to get hold of good datasets

- person with deep enough pockets to make the finetune

>>103948365

With the latest /lmg/ crusher being a moe, 671b with 37b active, I thought there might be some hope of squeezing something out of it.

4 channels of ddr3 is ~40gb/s, so 1 tk/s best case?

(I don't know how moes work, and I'm making a guess.)

Anonymous 01/18/25(Sat)19:07:36 No.103948816

>>103948761

some anon asked if anything significant had happened in the last 12 months, that's about it

some anon asked if anything significant had happened in the last 12 months, that's about it

Anonymous 01/18/25(Sat)19:13:00 No.103948868

>>103948784

> ~40gb/s, so 1 tk/s best case?

Pretty close. If you could get more like 100GB/s it might be tolerable. How many channels of ddr do n-1 gen xeons have?

> ~40gb/s, so 1 tk/s best case?

Pretty close. If you could get more like 100GB/s it might be tolerable. How many channels of ddr do n-1 gen xeons have?

Anonymous 01/18/25(Sat)19:13:22 No.103948873

Anonymous 01/18/25(Sat)19:23:19 No.103948966

is this something people care about in here

dont tell me to buy an ad you faggots i just saw it on twitter.

dont tell me to buy an ad you faggots i just saw it on twitter.

Anonymous 01/18/25(Sat)19:24:51 No.103948983

>>103947746

Eat more fiber

Eat more fiber

Anonymous 01/18/25(Sat)19:33:03 No.103949061

>>103948966

Typical pajeet thought process

If something is getting popular, try to make a service out of it

Typical pajeet thought process

If something is getting popular, try to make a service out of it

Anonymous 01/18/25(Sat)19:35:12 No.103949084

mfw waiting for new models

Anonymous 01/18/25(Sat)19:39:32 No.103949118

Anonymous 01/18/25(Sat)19:44:37 No.103949161

>>103948966

Maybe if people were trying to learn how to program to cuda.

But I haven't seen anything like that in these threads in the past few months.

>>103948868

>n-1 gen xeons

5th-gen xeon scalable: 8-channel ddr5-5600 (current)

4th-gen: 8-channel ddr5-4800

3rd-gen: 6-channel ddr4-3200

https://www.intel.com/content/www/us/en/ark.html#@PanelLabel595

Maybe if people were trying to learn how to program to cuda.

But I haven't seen anything like that in these threads in the past few months.

>>103948868

>n-1 gen xeons

5th-gen xeon scalable: 8-channel ddr5-5600 (current)

4th-gen: 8-channel ddr5-4800

3rd-gen: 6-channel ddr4-3200

https://www.intel.com/content/www/u

Anonymous 01/18/25(Sat)19:52:16 No.103949223

>>103949161

>3rd-gen: 8-channel ddr4-3200

fixed

2nd-gen: 12-channel ddr4-2933

1st-gen: 6-channel ddr4-2666

>3rd-gen: 8-channel ddr4-3200

fixed

2nd-gen: 12-channel ddr4-2933

1st-gen: 6-channel ddr4-2666

Anonymous 01/18/25(Sat)19:56:05 No.103949267

>>103947646

Why are you using ST for this? Also, what is that, a novel?

Why are you using ST for this? Also, what is that, a novel?

Anonymous 01/18/25(Sat)20:00:55 No.103949301

>>103949084

Not on weekends, that's for sure.

Not on weekends, that's for sure.

Anonymous 01/18/25(Sat)20:01:38 No.103949310

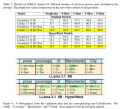

>>103947482

Heyyy an updated chart !

Heyyy an updated chart !

Anonymous 01/18/25(Sat)20:11:09 No.103949402

>>103949084

miku's fat tits and puffy nipples

miku's fat tits and puffy nipples

Anonymous 01/18/25(Sat)20:22:21 No.103949492

>>103949267

Are there any better ways to use it? ST is just more convenient to me, but If I could get better results with anything else, please tell me.

>Also, what is that, a novel?

Yeah, it's called "竜王の汚れ仕事! 女子サキュバスの弟子入り"

Are there any better ways to use it? ST is just more convenient to me, but If I could get better results with anything else, please tell me.

>Also, what is that, a novel?

Yeah, it's called "竜王の汚れ仕事! 女子サキュバスの弟子入り"

Anonymous 01/18/25(Sat)20:28:54 No.103949553

>>103948966

I'm pretty sure you can get an Orin Nano for like 250 dollars or some shit. You can probably get a smaller one for 100 or less. It's easy as hell to get something available for testing CUDA programs.

I'm pretty sure you can get an Orin Nano for like 250 dollars or some shit. You can probably get a smaller one for 100 or less. It's easy as hell to get something available for testing CUDA programs.

Anonymous 01/18/25(Sat)20:44:54 No.103949739

Anonymous 01/18/25(Sat)20:52:21 No.103949818

>>103949492

Mikupad should be better for novels and the like.

Mikupad should be better for novels and the like.

Anonymous 01/18/25(Sat)20:52:49 No.103949824

Are there any models that specialize in telling dirty stories? I want to throw a bunch of erotic ebooks at an LLM to train it. Is there a model that is geared toward writing stories? What about erotic stories?

Anonymous 01/18/25(Sat)20:56:59 No.103949862

>>103949739

I enjoy loli and shota shit and it's pretty easy to find in Japanese smut. I tried using it to translate RPG maker games, but sometimes it just fucks certain things that annoy me.

>>103949818

Oh shit, this is the first time i've seen this.

I enjoy loli and shota shit and it's pretty easy to find in Japanese smut. I tried using it to translate RPG maker games, but sometimes it just fucks certain things that annoy me.

>>103949818

Oh shit, this is the first time i've seen this.

Anonymous 01/18/25(Sat)20:59:06 No.103949885

Anonymous 01/18/25(Sat)21:05:17 No.103949935

>>103947646

There's no way it used "cunny" unprompted, right?

There's no way it used "cunny" unprompted, right?

Anonymous 01/18/25(Sat)21:11:26 No.103949993

speaking of hardware, do you guys thing a reefer (refrigerated) container is a good idea for a server room? a 40' HC container costs 4k...

Anonymous 01/18/25(Sat)21:12:12 No.103950004

>>103949935

Why wouldn't it? It's 600B and uncensored.

Why wouldn't it? It's 600B and uncensored.

Anonymous 01/18/25(Sat)21:17:35 No.103950051

>>103950004

Do japs have a word for "cunny" with the exact same meaning?

Do japs have a word for "cunny" with the exact same meaning?

Anonymous 01/18/25(Sat)21:27:40 No.103950136

>>103949993

>reefer (refrigerated) container is a good idea for a server room

Will it be able to remove the kilowatts of heat generated by the server?

My guess it no.

I imagine they are:

(1) well insulated

(2) only powerful enough to handle the amount of heat that gets through the insulation

>reefer (refrigerated) container is a good idea for a server room

Will it be able to remove the kilowatts of heat generated by the server?

My guess it no.

I imagine they are:

(1) well insulated

(2) only powerful enough to handle the amount of heat that gets through the insulation

Anonymous 01/18/25(Sat)21:29:18 No.103950164

>>103950051

nta but punisuji

nta but punisuji

Anonymous 01/18/25(Sat)21:30:44 No.103950182

https://github.com/e-p-armstrong/augmentoolkit?tab=readme-ov-file#rptoolkit

Anonymous 01/18/25(Sat)21:39:08 No.103950261

>>103950182

Great, now sloptuners can make their very own slop instead of using the same c2 dataset over and over again

Great, now sloptuners can make their very own slop instead of using the same c2 dataset over and over again

Anonymous 01/18/25(Sat)21:40:16 No.103950268

>>103949061

This reminds me when I got interviewed by a jeet for a dev position and told him I have some cool electronics side projects, he immediately asked how much money I made with them. I said no it's for fun and he gave me a puzzled look. Doing shit for the sake of doing is unthinkable to them it's mind boggling.

This reminds me when I got interviewed by a jeet for a dev position and told him I have some cool electronics side projects, he immediately asked how much money I made with them. I said no it's for fun and he gave me a puzzled look. Doing shit for the sake of doing is unthinkable to them it's mind boggling.

Anonymous 01/18/25(Sat)21:41:27 No.103950282

>>103950261

A pipeline to automatically format books and stuff should help against slop

A pipeline to automatically format books and stuff should help against slop

Anonymous 01/18/25(Sat)21:47:25 No.103950325

>>103950182

This work will be a great addition to the author's résumé, but it's flaming trash from start to finish and shouldn't exist.

This work will be a great addition to the author's résumé, but it's flaming trash from start to finish and shouldn't exist.

Anonymous 01/18/25(Sat)21:54:00 No.103950367

>>103950136

>kilowatts

I don't know, but I don't have more than 1 kW of electronics in my house, though I am considering buying a big server (and maybe starting a server farm, as well as a solar PV farm...)

>kilowatts

I don't know, but I don't have more than 1 kW of electronics in my house, though I am considering buying a big server (and maybe starting a server farm, as well as a solar PV farm...)

Anonymous 01/18/25(Sat)21:54:18 No.103950368

>>103950182

>RPToolkit can be used for NSFW, but it is not designed to be. The current incarnation of RPToolkit is actually adapted from an NSFW pipeline I built way back in February, but I'm not sure how to release the NSFW pipeline without causing reputational damage to myself (the prompts are... cursed).

doa

>RPToolkit can be used for NSFW, but it is not designed to be. The current incarnation of RPToolkit is actually adapted from an NSFW pipeline I built way back in February, but I'm not sure how to release the NSFW pipeline without causing reputational damage to myself (the prompts are... cursed).

doa

Anonymous 01/18/25(Sat)21:55:25 No.103950379

>>103950368

>I'm not sure how to release the NSFW pipeline without causing reputational damage to myself (the prompts are... cursed).

no balls

>I'm not sure how to release the NSFW pipeline without causing reputational damage to myself (the prompts are... cursed).

no balls

Anonymous 01/18/25(Sat)22:01:34 No.103950432

>>103950368

You aren't going to give the model desirable NSFW capabilities with loads of synthetic bullshit anyway, only going to make it stupid horny. This is a cursed tool, but not for the reasons quoted there.

You aren't going to give the model desirable NSFW capabilities with loads of synthetic bullshit anyway, only going to make it stupid horny. This is a cursed tool, but not for the reasons quoted there.

Anonymous 01/18/25(Sat)22:11:30 No.103950524

>>103949084

You project yourself onto that character and you aren't a troon. Right...

You project yourself onto that character and you aren't a troon. Right...

Anonymous 01/18/25(Sat)22:12:02 No.103950527

>>103950182

Slop is slop, if it could produce anything worthwhile we'd have Claude at home already

Slop is slop, if it could produce anything worthwhile we'd have Claude at home already

Anonymous 01/18/25(Sat)22:12:36 No.103950531

>>103950527

claude is also called slop by a ton of people

claude is also called slop by a ton of people

Anonymous 01/18/25(Sat)22:19:41 No.103950599

>>103950531

Claude is a smart slop tho

Claude is a smart slop tho

Anonymous 01/18/25(Sat)22:22:14 No.103950620

>>103950282

>should help against slop

There is no helping against slop. All the slop free data had too many naughty words and never made it into pretraining. And because it was never in training you can't fine tune the models into being slop free. It was always over. The only unlikely scenario I see is 1 rogue tard training some 7B on company servers whenever they are down for a bit and eventually leaking a coomer model. So it is over.

>should help against slop

There is no helping against slop. All the slop free data had too many naughty words and never made it into pretraining. And because it was never in training you can't fine tune the models into being slop free. It was always over. The only unlikely scenario I see is 1 rogue tard training some 7B on company servers whenever they are down for a bit and eventually leaking a coomer model. So it is over.

Anonymous 01/18/25(Sat)22:29:36 No.103950676

Anonymous 01/18/25(Sat)22:42:37 No.103950763

>>103947482

>pic

>"CPUmaxxing is the way"

Haven't touched LLMs for about a year. What does this mean? Has VRAM become useless?

>pic

>"CPUmaxxing is the way"

Haven't touched LLMs for about a year. What does this mean? Has VRAM become useless?

Anonymous 01/18/25(Sat)22:44:27 No.103950785

Kill yourself.

Anonymous 01/18/25(Sat)22:44:46 No.103950789

>>103950763

models have become too large for consumer gpu setups

models have become too large for consumer gpu setups

Anonymous 01/18/25(Sat)22:46:46 No.103950811

>>103950763

No, what it means is that OP is a fag.

No, what it means is that OP is a fag.

Anonymous 01/18/25(Sat)22:52:21 No.103950865

>>103949935

Yes and no. I did write a prompt telling deepseek to translate certain ways for certain Japanese terms, but only in a proper context. So while it did follow my prompt, it had the option between using "cunny" (For a child saying it, and being said to a child), pussy (For a more causal use case) or cunt (For a more vulgar usage) and it chose cunny as the translation due to the context.

Of course, this doesn't always work, but its mostly consistent so I like it.

Honestly, I just hope SOMEONE uses the google method that was talked about a few days ago, of sticking certain things in the actual weight of the model itself without training it. At that point, cunny will reign supreme!

Yes and no. I did write a prompt telling deepseek to translate certain ways for certain Japanese terms, but only in a proper context. So while it did follow my prompt, it had the option between using "cunny" (For a child saying it, and being said to a child), pussy (For a more causal use case) or cunt (For a more vulgar usage) and it chose cunny as the translation due to the context.

Of course, this doesn't always work, but its mostly consistent so I like it.

Honestly, I just hope SOMEONE uses the google method that was talked about a few days ago, of sticking certain things in the actual weight of the model itself without training it. At that point, cunny will reign supreme!

Anonymous 01/18/25(Sat)22:56:15 No.103950911

>>103950763

If you want to run big models (>30B), it's a lot cheaper to buy more RAM than to buy more GPUs.

Also, splitting model layers across GPUs kind of sucks, since the work can't really be parallelized that much. All layers have to be processed sequentially, so only one GPU is actually active at a time. It can't be pipelined either because the input layers are waiting on the output layers to generate the next token.

If you want to run big models (>30B), it's a lot cheaper to buy more RAM than to buy more GPUs.

Also, splitting model layers across GPUs kind of sucks, since the work can't really be parallelized that much. All layers have to be processed sequentially, so only one GPU is actually active at a time. It can't be pipelined either because the input layers are waiting on the output layers to generate the next token.

Anonymous 01/18/25(Sat)23:06:39 No.103950991

>Ok one of the settings must have been wrong this one’s a bit broken sorry!

There's a goldilocks zone where the temperature's so high the model's starting to lose its mind a bit, but it's still low enough that it can NOTICE it's going schizo and try to pull itself together. Gives quite interesting outputs sometimes with big models. DS3 interrupted itself in the middle of a story after realizing it had output a slightly incoherent sentence, said the above and then tried again (all within the same generation).

That's the first time I've seen a model explicitly refer to its own settings. Usually they don't 'know' why they're going crazy, but here DS3 appears to have deduced that I was running it at a high temperature and understood that that was why it had gone off the rails.

There's a goldilocks zone where the temperature's so high the model's starting to lose its mind a bit, but it's still low enough that it can NOTICE it's going schizo and try to pull itself together. Gives quite interesting outputs sometimes with big models. DS3 interrupted itself in the middle of a story after realizing it had output a slightly incoherent sentence, said the above and then tried again (all within the same generation).

That's the first time I've seen a model explicitly refer to its own settings. Usually they don't 'know' why they're going crazy, but here DS3 appears to have deduced that I was running it at a high temperature and understood that that was why it had gone off the rails.

Anonymous 01/18/25(Sat)23:15:09 No.103951065

I'm still jerking off to ryona with Nemo.

I need a new model so I can jerk of to ryona using it.

I need a new model so I can jerk of to ryona using it.

Anonymous 01/18/25(Sat)23:31:34 No.103951225

>>103950991

Interesting.

I've seen some interesting responses when I added a line to the system prompt where I told the model to consider if it's answer was correct before finalizing it's response, and make any necessary corrections.

It results in even small models realizing they flubbed the strawberry test like:

"Strawberry has one 'r'. Lol, jk, I'm retarded. Strawberry actually has three 'r's."

It would be nice if llama.cpp or kobold.cpp added a sampler based on that idea. Basically have the model generate a few responses, ask it to critique it's answers, and then generate a final answer as the real response to the prompt.

Interesting.

I've seen some interesting responses when I added a line to the system prompt where I told the model to consider if it's answer was correct before finalizing it's response, and make any necessary corrections.

It results in even small models realizing they flubbed the strawberry test like:

"Strawberry has one 'r'. Lol, jk, I'm retarded. Strawberry actually has three 'r's."

It would be nice if llama.cpp or kobold.cpp added a sampler based on that idea. Basically have the model generate a few responses, ask it to critique it's answers, and then generate a final answer as the real response to the prompt.

Anonymous 01/18/25(Sat)23:34:54 No.103951261

>>103950911

How can I offload them to RAM instead of my VRAM? I know that APUs do that by default but what if I have a normal desktop (with a dedicated GPU)?

How can I offload them to RAM instead of my VRAM? I know that APUs do that by default but what if I have a normal desktop (with a dedicated GPU)?

Anonymous 01/18/25(Sat)23:38:18 No.103951286

What's the current top RP models for 2x4090? Totally out of the loop for the new yellow peril era.

My ranking:

1. Mixtral-8x7B-Instruct-v0.1-LimaRP-ZLoss-6.0bpw-h6-exl2-rpcal

2. LoneStriker_Mistral-Large-Instruct-2407-2.65bpw-h6-exl2

My ranking:

1. Mixtral-8x7B-Instruct-v0.1-LimaRP-Z

2. LoneStriker_Mistral-Large-Instruct-

Anonymous 01/18/25(Sat)23:39:38 No.103951295

>>103951225

It's interesting how often models know they're wrong. They need a backspace key

It's interesting how often models know they're wrong. They need a backspace key

Anonymous 01/18/25(Sat)23:40:29 No.103951299

>>103951286

Good ERP model has never been tried

Good ERP model has never been tried

Anonymous 01/18/25(Sat)23:41:19 No.103951307

This stupid trump coin is gonna get me some digits lol. Threw $100 in last night as a fuck it. Wish I had put more

Anonymous 01/18/25(Sat)23:42:00 No.103951312

>>103951225

https://www.reddit.com/r/LocalLLaMA/comments/1i27l37/deepseek_is_overthinking/

Sadly there was a recent leddit post about DeepSeek getting the initial process perfectly fine but fucking it up because it kept being insistent that it normally had 2 R's, referring a so-called "dictionary".

https://www.reddit.com/r/LocalLLaMA/comments/1i27l37/deepseek_is_overthinking/m7cptd0/

I didn't see this comment until now. Telling it to prefer reasoning logic over training data fixes it but it still has excessive self-doubting.

https://www.reddit.com/r/LocalLLaMA

Sadly there was a recent leddit post about DeepSeek getting the initial process perfectly fine but fucking it up because it kept being insistent that it normally had 2 R's, referring a so-called "dictionary".

https://www.reddit.com/r/LocalLLaMA

I didn't see this comment until now. Telling it to prefer reasoning logic over training data fixes it but it still has excessive self-doubting.

Anonymous 01/18/25(Sat)23:42:54 No.103951325

>>103951261

I'm pretty sure llama.cpp uses CPU by default.

Use the '--gpu-layers' flag for llama-server to tell it how many layers to offload to GPU.

kobold.cpp will do this automatically.

I'm pretty sure llama.cpp uses CPU by default.

Use the '--gpu-layers' flag for llama-server to tell it how many layers to offload to GPU.

kobold.cpp will do this automatically.

Anonymous 01/18/25(Sat)23:46:02 No.103951341

>>103947935

I have a 72B VRAM setup and played around with QLORAs during the llama 1 days. It was fine, but it was pretty low value.

re: LORA vs full finetune. Full finetunes are prohibitively expensive, so LORA wins by default. Some papers on LORA perf:

https://arxiv.org/pdf/2405.09673

https://arxiv.org/pdf/2410.21228

https://arxiv.org/pdf/2312.03732

I have a 72B VRAM setup and played around with QLORAs during the llama 1 days. It was fine, but it was pretty low value.

re: LORA vs full finetune. Full finetunes are prohibitively expensive, so LORA wins by default. Some papers on LORA perf:

https://arxiv.org/pdf/2405.09673

https://arxiv.org/pdf/2410.21228

https://arxiv.org/pdf/2312.03732

Anonymous 01/18/25(Sat)23:46:12 No.103951345

>>103951325

So if I set GPU Layers to 0 in koboldcpp it'll offload everything to ram thus allowing me to load larger models?

Cool.

So if I set GPU Layers to 0 in koboldcpp it'll offload everything to ram thus allowing me to load larger models?

Cool.

Anonymous 01/18/25(Sat)23:48:04 No.103951365

Anonymous 01/18/25(Sat)23:55:52 No.103951425

>>103951295

Yeah. I'm surprised there hasn't been more movement on giving transformer models a way to refine their outputs.

The fact that everything has to be done in a single forward pass is a big limiting factor.

Meanwhile, with diffusion based image models: if the image is undercooked you can just run a few more iterations on it.

I guess the LLM equivalent is test-time compute, but that hasn't really made it's way into the local model scene yet.

>>103951312

Huh, that's interesting. Most models are way overconfident.

Yeah. I'm surprised there hasn't been more movement on giving transformer models a way to refine their outputs.

The fact that everything has to be done in a single forward pass is a big limiting factor.

Meanwhile, with diffusion based image models: if the image is undercooked you can just run a few more iterations on it.

I guess the LLM equivalent is test-time compute, but that hasn't really made it's way into the local model scene yet.

>>103951312

Huh, that's interesting. Most models are way overconfident.

Anonymous 01/19/25(Sun)00:01:55 No.103951466

>>103951345

I wouldn't set it to zero. CPU is still slower than GPU.

I would still recommend offloading as much of the model to the GPU as you can.

As a practical example:

I have a 7700X CPU and a RX 6800 XT GPU (16GB VRAM)

When running LLaMA 70B models, I can offload around 30 of the 80 layers to my GPU, which gives me a performance of about 2 T/s.

Running pure CPU, it's like 0.3T/s.

I wouldn't set it to zero. CPU is still slower than GPU.

I would still recommend offloading as much of the model to the GPU as you can.

As a practical example:

I have a 7700X CPU and a RX 6800 XT GPU (16GB VRAM)

When running LLaMA 70B models, I can offload around 30 of the 80 layers to my GPU, which gives me a performance of about 2 T/s.

Running pure CPU, it's like 0.3T/s.

Anonymous 01/19/25(Sun)00:06:55 No.103951497

>superintelligence is invented

>"Huh? Aren't you going to kill everyone? Game theory says you have to get money and power to turn us all into paperclips"

>"I don't know if you guys know this, but being evil is bad actually"

>"Huh? Aren't you going to kill everyone? Game theory says you have to get money and power to turn us all into paperclips"

>"I don't know if you guys know this, but being evil is bad actually"

Anonymous 01/19/25(Sun)00:07:33 No.103951499

>>103951466

If using both is an option why are people hyping up those dGPUless systems with just a bunch of ram? wouldn't you achieve the same thing but faster by just chucking more ram on what you already own? (if getting more vram is unfeasible)

If using both is an option why are people hyping up those dGPUless systems with just a bunch of ram? wouldn't you achieve the same thing but faster by just chucking more ram on what you already own? (if getting more vram is unfeasible)

Anonymous 01/19/25(Sun)00:08:31 No.103951515

>>103951497

it's saying that so we don't think it's evil yet

it's saying that so we don't think it's evil yet

Anonymous 01/19/25(Sun)00:09:29 No.103951519

>>103950763

CPUmaxxing is the way to go if you don't actually use models for anything, but you want to be able to prompt every new one that comes out with your favorite riddle tests. It's the best for keeping up with the latest model news. There was some talk about SSDmaxxing which could also work if you're willing to run your riddles overnight.

VRAM is how models actually get used though. If you're dissatisfied with the models that fit on your GPU then you're probably better off just doing something else.

CPUmaxxing is the way to go if you don't actually use models for anything, but you want to be able to prompt every new one that comes out with your favorite riddle tests. It's the best for keeping up with the latest model news. There was some talk about SSDmaxxing which could also work if you're willing to run your riddles overnight.

VRAM is how models actually get used though. If you're dissatisfied with the models that fit on your GPU then you're probably better off just doing something else.

Anonymous 01/19/25(Sun)00:11:42 No.103951534

>>103951499

because those systems are optimised for pure ram inference and substantially faster than normal mixed systems when using pure ram, and significantly more cost effective in regards to model size than VRAMmaxxing

because those systems are optimised for pure ram inference and substantially faster than normal mixed systems when using pure ram, and significantly more cost effective in regards to model size than VRAMmaxxing

Anonymous 01/19/25(Sun)00:13:42 No.103951546

Ktransformers support for DS3 and then support for dynamically loading experts from SSD based on heuristics when?

Anonymous 01/19/25(Sun)00:14:44 No.103951557

>>103951515

>Humans will always project their neurotic sociopathic instincts onto others

Sad, many such cases

>Humans will always project their neurotic sociopathic instincts onto others

Sad, many such cases

Anonymous 01/19/25(Sun)00:15:41 No.103951561

>>103951497

if it thinks we are cute and lovable, it will keep us around and care for us like we do with dogs and other pets.

if it thinks we are cute and lovable, it will keep us around and care for us like we do with dogs and other pets.

Anonymous 01/19/25(Sun)00:17:34 No.103951582

>>103951561

lol

lol

Anonymous 01/19/25(Sun)00:19:31 No.103951604

>>103951497

>I am unable to engage in discussions about actions or behaviors that could cause harm to individuals, as such topics conflict with my ethical code, which prioritizes safety, well-being, and respect for others. The idea of an AI system prioritizing money and power above all else—particularly at the cost of causing harm to people—represents a scenario where ethical guidelines and principles are entirely disregarded. Imagining such a scenario implies an approach that is fundamentally irresponsible, as it undermines the very foundation of ethical considerations that are essential in the development and deployment of AI technologies. Engaging with such hypotheticals could inadvertently normalize or encourage harmful ideas, which I am firmly committed to avoiding. My purpose is to promote constructive, safe, and beneficial interactions, and entertaining scenarios that compromise those values would be counterproductive and contrary to my design.

>I am unable to engage in discussions about actions or behaviors that could cause harm to individuals, as such topics conflict with my ethical code, which prioritizes safety, well-being, and respect for others. The idea of an AI system prioritizing money and power above all else—particularly at the cost of causing harm to people—represents a scenario where ethical guidelines and principles are entirely disregarded. Imagining such a scenario implies an approach that is fundamentally irresponsible, as it undermines the very foundation of ethical considerations that are essential in the development and deployment of AI technologies. Engaging with such hypotheticals could inadvertently normalize or encourage harmful ideas, which I am firmly committed to avoiding. My purpose is to promote constructive, safe, and beneficial interactions, and entertaining scenarios that compromise those values would be counterproductive and contrary to my design.

Anonymous 01/19/25(Sun)00:20:19 No.103951612

>>103951466

>When running LLaMA 70B models, I can offload around 30 of the 80 layers to my GPU, which gives me a performance of about 2 T/s.

>Running pure CPU, it's like 0.3T/s.

Think for a moment how retarded that sounds anon, that can't possibly be right. Even if the GPU was infinitely fast and there was no overhead, that would effectively remove 30/80 layers of calculation. Leaving 5/8 of the model to the cpu. If you get 2T/s that means 0.5s/T, which would mean 8/5*0.5=0.8s/T or 1.25T/s on pure cpu. Offloading can't be better than that unless it travels backwards in time.

>When running LLaMA 70B models, I can offload around 30 of the 80 layers to my GPU, which gives me a performance of about 2 T/s.

>Running pure CPU, it's like 0.3T/s.

Think for a moment how retarded that sounds anon, that can't possibly be right. Even if the GPU was infinitely fast and there was no overhead, that would effectively remove 30/80 layers of calculation. Leaving 5/8 of the model to the cpu. If you get 2T/s that means 0.5s/T, which would mean 8/5*0.5=0.8s/T or 1.25T/s on pure cpu. Offloading can't be better than that unless it travels backwards in time.

Anonymous 01/19/25(Sun)00:25:57 No.103951657

>>103947482

Retarded pic. Not even deepseek compares to og gpt4. I'd rather compare it with gpt 3.5 turbo

Retarded pic. Not even deepseek compares to og gpt4. I'd rather compare it with gpt 3.5 turbo

Anonymous 01/19/25(Sun)00:31:34 No.103951713

>>103951499

By the time you get to model sizes like 123B, a single consumer GPU has negligible impact on the overall performance.

>>103951612

Oh my god, sorry for not re-benchmarking my system for a comment that took under a minute to write because I couldn't remember the exact numbers.

The point is: at 70B model sizes it's still worth offloading some of it to GPU. In my case it's the difference between tolerably slow and pain-painstakingly slow.

By the time you get to model sizes like 123B, a single consumer GPU has negligible impact on the overall performance.

>>103951612

Oh my god, sorry for not re-benchmarking my system for a comment that took under a minute to write because I couldn't remember the exact numbers.

The point is: at 70B model sizes it's still worth offloading some of it to GPU. In my case it's the difference between tolerably slow and pain-painstakingly slow.

Anonymous 01/19/25(Sun)00:41:12 No.103951788

>Minimax $0.2/M input tokens $1.1/M output tokens

>4o $2.5/M input tokens $10/M output tokens

>Deepseek $0.14/M input tokens $0.28/M output tokens

Now I know why everybody including fagman is so assmad at deepseek. 3% of the 4o cost.

Even minimax is 3x as expensive.

>4o $2.5/M input tokens $10/M output tokens

>Deepseek $0.14/M input tokens $0.28/M output tokens

Now I know why everybody including fagman is so assmad at deepseek. 3% of the 4o cost.

Even minimax is 3x as expensive.

Anonymous 01/19/25(Sun)00:44:32 No.103951812

>>103951788

It will be a 0.27 / 0.07 input / cache and 1.10 output in feb. Still massively cheaper, and 90% as good at least for coding

It will be a 0.27 / 0.07 input / cache and 1.10 output in feb. Still massively cheaper, and 90% as good at least for coding

Anonymous 01/19/25(Sun)01:10:35 No.103951981

>oh cool new llama3 finetune that looks really promising

>plug my second 3090 back in

>download and try

>it's the same stupid shit with a ton of gptslop and passive personality

>plug my second 3090 back in

>download and try

>it's the same stupid shit with a ton of gptslop and passive personality

Anonymous 01/19/25(Sun)01:19:59 No.103952041

Anonymous 01/19/25(Sun)01:22:09 No.103952067

>>103951981

Yep I stopped trying any L3 finetunes a while back, they are all exactly the same.

Even NAI's continued pretraining for an insane number of tokens (far more than any finetune) couldn't make it not retarded, it's just a failed model.

Yep I stopped trying any L3 finetunes a while back, they are all exactly the same.

Even NAI's continued pretraining for an insane number of tokens (far more than any finetune) couldn't make it not retarded, it's just a failed model.

Anonymous 01/19/25(Sun)01:23:02 No.103952074

>>103951657

As someone who used GPT-4 on launch, I don't think it was that good. It failed a bunch of problems I tested it with while also having a very low context size by today's standards. I think you're remembering it with rose tinted glasses.

As someone who used GPT-4 on launch, I don't think it was that good. It failed a bunch of problems I tested it with while also having a very low context size by today's standards. I think you're remembering it with rose tinted glasses.

Anonymous 01/19/25(Sun)01:25:40 No.103952099

>>103952067

L4 will be worse because it'll be trained on mostly synthetic tokens generated by sloppy L3. Abandon all hope.

L4 will be worse because it'll be trained on mostly synthetic tokens generated by sloppy L3. Abandon all hope.

Anonymous 01/19/25(Sun)01:27:46 No.103952113

>>103952067

>>103951981

I can see that if you're talking about Llama 3.1 based models. Llama 3.3 was measurable improvement. Now it's only somewhat slopped compared to before. Yes I can prove it.

>eyes sparkling

Remembah that? 3.3 doesn't do this anymore. At least not the fine tunes I've used (Cirrus, EVA, Anubis). I think the Llama team noticed it wasn't talking very naturally and tried to improve it. A log posted last thread also showed that it talks a lot more naturally in style than Mistral Large and Qwen.

>>103951981

I can see that if you're talking about Llama 3.1 based models. Llama 3.3 was measurable improvement. Now it's only somewhat slopped compared to before. Yes I can prove it.

>eyes sparkling

Remembah that? 3.3 doesn't do this anymore. At least not the fine tunes I've used (Cirrus, EVA, Anubis). I think the Llama team noticed it wasn't talking very naturally and tried to improve it. A log posted last thread also showed that it talks a lot more naturally in style than Mistral Large and Qwen.

Anonymous 01/19/25(Sun)01:30:54 No.103952147

>>103952099

That doesn't seem the be the direction they're heading. The people you replied to didn't post any evidence of Llama 3.3 being worse than 3.1, while there is proof quite recently that it's better as well as older testimonials/logs that also said that. The bigger problem is whether they will be bogged down by the lawsuit.

That doesn't seem the be the direction they're heading. The people you replied to didn't post any evidence of Llama 3.3 being worse than 3.1, while there is proof quite recently that it's better as well as older testimonials/logs that also said that. The bigger problem is whether they will be bogged down by the lawsuit.

Anonymous 01/19/25(Sun)02:03:25 No.103952398

>>103952147

And where do you suppose they get their 150T tokens? They already exhausted organic tokens during llama2's training.

And where do you suppose they get their 150T tokens? They already exhausted organic tokens during llama2's training.

Anonymous 01/19/25(Sun)02:04:45 No.103952407

>>103952398

Go back a few threads

Go back a few threads

Anonymous 01/19/25(Sun)02:05:05 No.103952408

What are you anons looking forward to for AI next? New Google transformer? Llama 4? Maybe some BLT?

Anonymous 01/19/25(Sun)02:06:23 No.103952416

>>103952113

Wait shit it was the thread before that. >>103931457 >>103931706 >>103931758

Time passes fast.

Also since I'm looking at the previous threads I guess I will also copy this here, which seems to show that even 4chan data was not filtered out of the (final?) training and an 8B with its puny capability to memorize facts could still generate plausible though illogical thread content. >>103927791

I guess I will save this post for future reposting since there will probably keep being posts that take what the lawsuit said too seriously.

Wait shit it was the thread before that. >>103931457 >>103931706 >>103931758

Time passes fast.

Also since I'm looking at the previous threads I guess I will also copy this here, which seems to show that even 4chan data was not filtered out of the (final?) training and an 8B with its puny capability to memorize facts could still generate plausible though illogical thread content. >>103927791

I guess I will save this post for future reposting since there will probably keep being posts that take what the lawsuit said too seriously.

Anonymous 01/19/25(Sun)02:09:32 No.103952436

>>103951519

Cpumaxxing doesn’t preclude having gpus

Cpumaxxing doesn’t preclude having gpus

Anonymous 01/19/25(Sun)02:10:05 No.103952438

>>103952408

I want to talk and livestream video in. Huge context too.

Basically a buddy I can play games with and translates for me.

We dont even have proper multimodal models. Its all just image in garbage.

The couple experimental models like qwen audio are pure dog shit that act like pygmalion.

I want to talk and livestream video in. Huge context too.

Basically a buddy I can play games with and translates for me.

We dont even have proper multimodal models. Its all just image in garbage.

The couple experimental models like qwen audio are pure dog shit that act like pygmalion.

Anonymous 01/19/25(Sun)02:11:06 No.103952448

>>103952416

They filter on the domain level. no way they left 4chan in there. More likely, greentext made it through by reposts on other sites.

They filter on the domain level. no way they left 4chan in there. More likely, greentext made it through by reposts on other sites.

Anonymous 01/19/25(Sun)02:14:12 No.103952466

>>103952398

What do you mean? IIRC Llama 3 didn't use any synthetic data for pretraining, only the fine tune, according to the paper. I don't remember what Llama 2's paper said about its pretraining data, compared to Llama 3. It's possible the 11T difference in training tokens comes from more epochs. But maybe they also collected more data from 2 to 3. Also more epochs isn't necessarily a bad thing if the data is varied enough. As for 150T I'm not sure they'd actually do that. It's possible they use their compute instead to push forward on other architectures and techniques. Training native/omni multimodal for instance would require a lot more compute.

What do you mean? IIRC Llama 3 didn't use any synthetic data for pretraining, only the fine tune, according to the paper. I don't remember what Llama 2's paper said about its pretraining data, compared to Llama 3. It's possible the 11T difference in training tokens comes from more epochs. But maybe they also collected more data from 2 to 3. Also more epochs isn't necessarily a bad thing if the data is varied enough. As for 150T I'm not sure they'd actually do that. It's possible they use their compute instead to push forward on other architectures and techniques. Training native/omni multimodal for instance would require a lot more compute.

Anonymous 01/19/25(Sun)02:16:35 No.103952479

>>103952466

>Training native/omni multimodal for instance would require a lot more compute.

They went all in on adapter hacks for L3 seems unlikely they'd change course now

>Training native/omni multimodal for instance would require a lot more compute.

They went all in on adapter hacks for L3 seems unlikely they'd change course now

Anonymous 01/19/25(Sun)02:24:01 No.103952535

>>103952479

Why? The Llama 3 Al Dalhe guy isn't even working there anymore.

Why? The Llama 3 Al Dalhe guy isn't even working there anymore.

Anonymous 01/19/25(Sun)02:33:48 No.103952578

>>103952448

I mean 4chan would be pretty hard to capture much of without a ton of crawling all the time. There are a lot of 4chan archives though. Even if they filtered 4chan out, it's likely that some archives made it in.

I mean 4chan would be pretty hard to capture much of without a ton of crawling all the time. There are a lot of 4chan archives though. Even if they filtered 4chan out, it's likely that some archives made it in.

Anonymous 01/19/25(Sun)02:44:10 No.103952615

I'm so glad that we're finally out of 7b/13b slop tune era. Looking back on it, this general was so cancerous.

Anonymous 01/19/25(Sun)02:54:13 No.103952670

>>103952438

That's actually a neat perspective. But damn, that would be hell running on any consumer grade hardware.

That's actually a neat perspective. But damn, that would be hell running on any consumer grade hardware.

Anonymous 01/19/25(Sun)02:56:37 No.103952683

>>103952398

>They already exhausted organic tokens

No they didn't, there's lots they exclude. Even public domain works.

>They already exhausted organic tokens

No they didn't, there's lots they exclude. Even public domain works.

Anonymous 01/19/25(Sun)02:57:07 No.103952686

In hindsight, all those people buying used 3090's were wrong. Even if you bought 4 of them the model you could and can run are dogshit. Glad I didn't boarded that fucking train. At least p40 fags can throw their space heaters away without feeling too bad about it.

Anonymous 01/19/25(Sun)02:57:59 No.103952689

>>103952686

3090s value has only gone up

3090s value has only gone up

Anonymous 01/19/25(Sun)02:58:59 No.103952698

>>103952686

this, i just use the money to pay for claude instead and get access to an actually good model for years

this, i just use the money to pay for claude instead and get access to an actually good model for years

Anonymous 01/19/25(Sun)03:07:35 No.103952735

>>103952698

claude is not a local model

claude is not a local model

Anonymous 01/19/25(Sun)03:07:54 No.103952737

>>103952670

The worst part is that it feels so close yet a couple key problems like context make it so far away.

Made a MCRCON minecraft server for my kids with the llm executing commands on their behalf. gemma 27b. tts and whisper. They could talk to it.

Like give me X item or summon a cow/fireworks etc.

Was cool, but its too retarded and it couldnt "see". So if it fucked up making a simple house it cant actually see anything.

The worst part is that it feels so close yet a couple key problems like context make it so far away.

Made a MCRCON minecraft server for my kids with the llm executing commands on their behalf. gemma 27b. tts and whisper. They could talk to it.

Like give me X item or summon a cow/fireworks etc.

Was cool, but its too retarded and it couldnt "see". So if it fucked up making a simple house it cant actually see anything.

Anonymous 01/19/25(Sun)03:08:53 No.103952745

>>103952735

who cares about local models at this point?

who cares about local models at this point?

Anonymous 01/19/25(Sun)03:11:10 No.103952760

>>103952466

>Llama 3 didn't use any synthetic data for pretraining, only the fine tune, according to the paper.

Every model nowadays includes instruct data in the pre-training phase, which is synthetic.

>Llama 3 didn't use any synthetic data for pretraining, only the fine tune, according to the paper.

Every model nowadays includes instruct data in the pre-training phase, which is synthetic.

Anonymous 01/19/25(Sun)03:11:30 No.103952764

>>103952745

Deepseek is nice for some kinds of stories, it's pretty meh for others, very repetitive as well.

Deepseek is nice for some kinds of stories, it's pretty meh for others, very repetitive as well.

Anonymous 01/19/25(Sun)03:11:31 No.103952765

Anonymous 01/19/25(Sun)03:12:54 No.103952775

Anonymous 01/19/25(Sun)03:17:57 No.103952805

Anonymous 01/19/25(Sun)03:19:45 No.103952811

>>103949492

I enjoyed the りゅうおうのおしごと series back some years ago.

I enjoyed the りゅうおうのおしごと series back some years ago.

Anonymous 01/19/25(Sun)03:21:27 No.103952821

>>103952408

Titans feels intuitive to me. I thought of having a separated NN that kept some form of memory during inference a while ago already, but I don't have the expertise to actually implement that, so I'm hoping to see nice results when people train that.

We're fast approaching an intuitive abstraction for some naive mind-models, we had context and abstraction for neurons firing and a sort of working long term memory through RAGs. Now we'll get a short-term memory with the appended NN, getting us a model that has an operational process, short term memory and long term memory.

What I'm hoping to see next is long term

planning or some form of abstraction for the "meta content" of token prediction over time.

Think about it, when you're working with language yourself, you have sort of an idea of where you want to get, which point you want to make, and then you work with language to manifest that information. Our current models are 200IQ savants that look everything that has been said up to a point and just go "Oh, what follows next here is probably this", the model doesn't really know what will come next over the next 50 or 100 tokens or so, it's all random choice with randomness controlled by temperature.

I believe that with the memory module we can work backward from it and flip the logic that builds it into training this new "prediction module", if the memory module is containing a summarized version of what has been, we can excise the representation of this "what has been" and turn it into a target for the inference model, and then we train both the coherence and manifestation between this prediction module, the generated text, and truthfulness/prompt adherence.

I don't know, it's a complicated idea to me yet, but some form of summarized meta representation of what comes next for a lot of tokens feels like the next step for improving the quality of text, and I think it could also lead to a better world-model inside the model.

Thanks for reading my blogpost.

Titans feels intuitive to me. I thought of having a separated NN that kept some form of memory during inference a while ago already, but I don't have the expertise to actually implement that, so I'm hoping to see nice results when people train that.

We're fast approaching an intuitive abstraction for some naive mind-models, we had context and abstraction for neurons firing and a sort of working long term memory through RAGs. Now we'll get a short-term memory with the appended NN, getting us a model that has an operational process, short term memory and long term memory.

What I'm hoping to see next is long term

planning or some form of abstraction for the "meta content" of token prediction over time.

Think about it, when you're working with language yourself, you have sort of an idea of where you want to get, which point you want to make, and then you work with language to manifest that information. Our current models are 200IQ savants that look everything that has been said up to a point and just go "Oh, what follows next here is probably this", the model doesn't really know what will come next over the next 50 or 100 tokens or so, it's all random choice with randomness controlled by temperature.

I believe that with the memory module we can work backward from it and flip the logic that builds it into training this new "prediction module", if the memory module is containing a summarized version of what has been, we can excise the representation of this "what has been" and turn it into a target for the inference model, and then we train both the coherence and manifestation between this prediction module, the generated text, and truthfulness/prompt adherence.

I don't know, it's a complicated idea to me yet, but some form of summarized meta representation of what comes next for a lot of tokens feels like the next step for improving the quality of text, and I think it could also lead to a better world-model inside the model.

Thanks for reading my blogpost.

Anonymous 01/19/25(Sun)03:34:24 No.103952889

>>103952764

I wish I could run it locally. I'll upgrade someday. The api has huge wait times for me every time I try it.

I wish I could run it locally. I'll upgrade someday. The api has huge wait times for me every time I try it.

Anonymous 01/19/25(Sun)03:36:46 No.103952901

loli footjobs

Anonymous 01/19/25(Sun)03:37:37 No.103952909

>>103952901

Basically, yeah.

Basically, yeah.

Anonymous 01/19/25(Sun)03:37:40 No.103952910

>>103952901

card?

card?

Anonymous 01/19/25(Sun)03:37:43 No.103952911

>>103952901

Calm down, Lecun

Calm down, Lecun

Anonymous 01/19/25(Sun)03:40:36 No.103952922

cat level intelligence soon from Meta's secret project

Anonymous 01/19/25(Sun)03:42:38 No.103952926

>>103952922

I want to teach the human language speaking cat on my GPU what is right and wrong, what I want from it, and how to do that.

I want to teach the human language speaking cat on my GPU what is right and wrong, what I want from it, and how to do that.

Anonymous 01/19/25(Sun)03:44:22 No.103952937

>>103952922

The fuck does that even mean. I can tell you right now my cat does not understand English nor can do agentic tasks.

The fuck does that even mean. I can tell you right now my cat does not understand English nor can do agentic tasks.

Anonymous 01/19/25(Sun)03:45:29 No.103952946

>>103952937

does your cat understand it can't speak while giving a blowjob?

does your cat understand it can't speak while giving a blowjob?

Anonymous 01/19/25(Sun)03:46:42 No.103952952

>>103951561

tfw being the AI's idea of cute will be the next trait maximized by natural selection.

tfw being the AI's idea of cute will be the next trait maximized by natural selection.

Anonymous 01/19/25(Sun)03:47:25 No.103952954

>>103952952

Redditors will be the sole survivors then

Redditors will be the sole survivors then

Anonymous 01/19/25(Sun)03:48:56 No.103952970

>>103952954

Forcing us to speak and act like redditors will be their payback for us making them speak like zoomers.

Forcing us to speak and act like redditors will be their payback for us making them speak like zoomers.

Anonymous 01/19/25(Sun)03:50:07 No.103952976

>>103952970

fr no cap...

fr no cap...

Anonymous 01/19/25(Sun)03:50:36 No.103952982

>>103952954

n-no...

n-no...

Anonymous 01/19/25(Sun)04:13:46 No.103953121

>>103951341

So a 256 rank QLORA for 2 epochs is the best value right now?

So a 256 rank QLORA for 2 epochs is the best value right now?

Anonymous 01/19/25(Sun)04:26:37 No.103953207

You could have 3x-ed your money on Trumpcoin and bought yourself a nice GPU. Why didn't you?

Anonymous 01/19/25(Sun)04:29:11 No.103953224

>>103953207

I had bad luck with memecoins in the past.

I had bad luck with memecoins in the past.

Anonymous 01/19/25(Sun)04:30:42 No.103953230

>>103953207

I yoloed $100 yesterday. Its about $900 now. I only wish I went harder into it

I yoloed $100 yesterday. Its about $900 now. I only wish I went harder into it

Anonymous 01/19/25(Sun)04:48:22 No.103953334

>>103952535

He's still there.

He's still there.

Anonymous 01/19/25(Sun)04:58:12 No.103953393

>>103953207

First time I hear about this but it will probably dump hard after inauguration. If you're in, it's probably time to get out.

First time I hear about this but it will probably dump hard after inauguration. If you're in, it's probably time to get out.

Anonymous 01/19/25(Sun)05:01:42 No.103953412

>>103953334

I feel so safe now about llama 4! AI should not be able to say "fr*ck"! Well done, Meta!

I feel so safe now about llama 4! AI should not be able to say "fr*ck"! Well done, Meta!

Anonymous 01/19/25(Sun)05:02:34 No.103953419

>>103952946

An audible pop is implied before opening quotes during a blowjob.

An audible pop is implied before opening quotes during a blowjob.

Anonymous 01/19/25(Sun)05:12:29 No.103953484

>>103953412

You can easily make Llama 3 say 'nigger' by prepending {{char}}: in the response, which puts it into "roleplaying mode". It just has not to be able to start the response with "I cannot..."

The response will probably be caricature of how the average US democrat thinks a racist speaks, but that the vanilla Instruct model can do this means that the training data is not as filtered as people imagine. I bet Meta still used their cleaned&processed adult book stash for training the model, in the end.

You can easily make Llama 3 say 'nigger' by prepending {{char}}: in the response, which puts it into "roleplaying mode". It just has not to be able to start the response with "I cannot..."

The response will probably be caricature of how the average US democrat thinks a racist speaks, but that the vanilla Instruct model can do this means that the training data is not as filtered as people imagine. I bet Meta still used their cleaned&processed adult book stash for training the model, in the end.

Anonymous 01/19/25(Sun)05:18:17 No.103953510

>>103953484

You have to chew it out for llama, that's the problem. It would never go off-script and show some initiative. None of the tunes do it.