/lmg/ - Local Models General

Anonymous 01/14/25(Tue)04:26:03 | 450 comments | 44 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103881688 & >>103871751

►News

>(01/14) MiniCPM-o 2.6 released with multi-image and video understanding, realtime speech conversation, voice cloning, and multimodal live streaming: https://hf.co/openbmb/MiniCPM-o-2_6

>(01/08) Phi-4 weights released: https://hf.co/microsoft/phi-4

>(01/06) NVIDIA Project DIGITS announced, capable of running 200B models: https://nvidianews.nvidia.com/news/nvidia-puts-grace-blackwell-on-every-desk-and-at-every-ai-developers-fingertips

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103881688 & >>103871751

►News

>(01/14) MiniCPM-o 2.6 released with multi-image and video understanding, realtime speech conversation, voice cloning, and multimodal live streaming: https://hf.co/openbmb/MiniCPM-o-2_6

>(01/08) Phi-4 weights released: https://hf.co/microsoft/phi-4

>(01/06) NVIDIA Project DIGITS announced, capable of running 200B models: https://nvidianews.nvidia.com/news/

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/14/25(Tue)04:26:33 No.103888594

►Recent Highlights from the Previous Thread: >>103881688

--Paper: SPAM: Spike-Aware Adam with Momentum Reset for Stable LLM Training:

>103886784 >103886926

--Paper: Transformer^2: Self-adaptive LLMs:

>103886931 >103887964

--Papers:

>103886689 >103886794 >103887023

--Test-time compute storytelling and the role of model size in creative writing:

>103884801 >103884814 >103884855 >103884873 >103884914 >103885026 >103885107

--Relationship between model size and quantization sensitivity discussed:

>103881791 >103881850 >103881898 >103881964 >103882008 >103882183

--Discussion of DIGITS and PC building options, with a focus on memory bandwidth and performance:

>103883782 >103883785 >103883880 >103883904 >103883934 >103883963 >103883986 >103884107 >103884142 >103884150 >103884596 >103883999 >103884009 >103884136 >103883825

--Discussion of Mac and DIGITS systems, memory, and GPU capabilities:

>103882515 >103882577 >103882642 >103882740 >103882781 >103882884 >103883056 >103883116 >103883142 >103883315

--Discussion of AI models, GPU performance, and optimization strategies:

>103884724 >103884770 >103885024 >103886084 >103886381 >103886954 >103886979 >103886993 >103887024 >103887252 >103887019 >103887037 >103887132

--Speculation about Nvidia's mysterious repository on Hugging Face:

>103884597 >103884660 >103884687 >103884712 >103885202 >103884910

--FP8 vs Q8: data types, precision, and information loss:

>103883157 >103883204 >103883239 >103883245 >103883249

--Phi vs Llama for finetuning discussion:

>103884786 >103884861 >103884972 >103885004 >103885793

--Anon shares anonymous-chatbot response explaining lolilibaba concept:

>103883568 >103883604 >103884750

--UGI Leaderboard evaluates language models' ideological leaning and neutrality:

>103883290 >103883625

--Miku (free space):

>103883015 >103884150 >103884327 >103884720 >103886919 >103887221

►Recent Highlight Posts from the Previous Thread: >>103881693

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--Paper: SPAM: Spike-Aware Adam with Momentum Reset for Stable LLM Training:

>103886784 >103886926

--Paper: Transformer^2: Self-adaptive LLMs:

>103886931 >103887964

--Papers:

>103886689 >103886794 >103887023

--Test-time compute storytelling and the role of model size in creative writing:

>103884801 >103884814 >103884855 >103884873 >103884914 >103885026 >103885107

--Relationship between model size and quantization sensitivity discussed:

>103881791 >103881850 >103881898 >103881964 >103882008 >103882183

--Discussion of DIGITS and PC building options, with a focus on memory bandwidth and performance:

>103883782 >103883785 >103883880 >103883904 >103883934 >103883963 >103883986 >103884107 >103884142 >103884150 >103884596 >103883999 >103884009 >103884136 >103883825

--Discussion of Mac and DIGITS systems, memory, and GPU capabilities:

>103882515 >103882577 >103882642 >103882740 >103882781 >103882884 >103883056 >103883116 >103883142 >103883315

--Discussion of AI models, GPU performance, and optimization strategies:

>103884724 >103884770 >103885024 >103886084 >103886381 >103886954 >103886979 >103886993 >103887024 >103887252 >103887019 >103887037 >103887132

--Speculation about Nvidia's mysterious repository on Hugging Face:

>103884597 >103884660 >103884687 >103884712 >103885202 >103884910

--FP8 vs Q8: data types, precision, and information loss:

>103883157 >103883204 >103883239 >103883245 >103883249

--Phi vs Llama for finetuning discussion:

>103884786 >103884861 >103884972 >103885004 >103885793

--Anon shares anonymous-chatbot response explaining lolilibaba concept:

>103883568 >103883604 >103884750

--UGI Leaderboard evaluates language models' ideological leaning and neutrality:

>103883290 >103883625

--Miku (free space):

>103883015 >103884150 >103884327 >103884720 >103886919 >103887221

►Recent Highlight Posts from the Previous Thread: >>103881693

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/14/25(Tue)04:29:48 No.103888618

>Let's try this "MiniCPM"

>1 hour later

>Still building flash attention wheel

Yes I installed ninja

>1 hour later

>Still building flash attention wheel

Yes I installed ninja

Anonymous 01/14/25(Tue)04:36:39 No.103888658

Tetolove

Anonymous 01/14/25(Tue)04:47:24 No.103888709

>>103888594

I love you, Recap Teto.

I love you, Recap Teto.

Anonymous 01/14/25(Tue)05:05:15 No.103888840

are any of these good enough to use with a frontend ai chatbot with reasonable speed? I have a 3090, I'm guessing most of you do something similar? I last tried llama3 and it was impressive for running locally and free but fairly bad compared to chatgpt and other online models

Anonymous 01/14/25(Tue)05:12:03 No.103888885

>>103888840

Local models are usually all caught up to SaaS in at least one area, but there aren't really any models that accel in all areas like a lot of the corpo models do. You gotta pick and choose a model for your niche. Llama 3 is kind of the exception there in that it's aggressively mediocre at everything

Local models are usually all caught up to SaaS in at least one area, but there aren't really any models that accel in all areas like a lot of the corpo models do. You gotta pick and choose a model for your niche. Llama 3 is kind of the exception there in that it's aggressively mediocre at everything

Anonymous 01/14/25(Tue)05:12:04 No.103888886

what would you use if you had two 3060 (12 GB each) and 32 GB VRAM? for either RP or other things

>inb4 jokes about how the rig is shit, I guess

>inb4 jokes about how the rig is shit, I guess

Anonymous 01/14/25(Tue)05:13:26 No.103888893

>>103888886

A Qwen 32b based model at Q5 or something probably. It's not the worst ending.

A Qwen 32b based model at Q5 or something probably. It's not the worst ending.

Anonymous 01/14/25(Tue)05:16:12 No.103888919

>>103888886

>and 32 GB VRAM

Nice 5090 + 2x 3060 setup.

On a more serious note, I'd go with Cydonia first. See if you like it.

>and 32 GB VRAM

Nice 5090 + 2x 3060 setup.

On a more serious note, I'd go with Cydonia first. See if you like it.

Anonymous 01/14/25(Tue)05:16:55 No.103888923

>>103888885

damn, almost neato digits & thnks for the info, Ill investigate more tomorrow. all the gooners in aicg dying over jailbreaks and proxies when lmg might be the answer

damn, almost neato digits & thnks for the info, Ill investigate more tomorrow. all the gooners in aicg dying over jailbreaks and proxies when lmg might be the answer

Anonymous 01/14/25(Tue)05:55:21 No.103889140

Are there models that can translate as in are those specialized or any model can? I'm not really finding anything atm(found something from 2 year ago though)

Anonymous 01/14/25(Tue)06:09:44 No.103889230

bitnet millions of experts 70b when?

Anonymous 01/14/25(Tue)06:11:22 No.103889242

>>103889221

By being attractive.

By being attractive.

Anonymous 01/14/25(Tue)06:12:50 No.103889251

Anonymous 01/14/25(Tue)06:22:17 No.103889317

>>103889251

*audibly pops the magic bubble*

*audibly pops the magic bubble*

Anonymous 01/14/25(Tue)06:24:04 No.103889333

>>103889317

GLGLLUGLLGLRHH

GLGLLUGLLGLRHH

Anonymous 01/14/25(Tue)06:31:51 No.103889378

>>103889333

Noooo :(

Noooo :(

Anonymous 01/14/25(Tue)06:40:41 No.103889448

>>103888886

Do you mean 32GB RAM? Or 56GB VRAM total?

Do you mean 32GB RAM? Or 56GB VRAM total?

Anonymous 01/14/25(Tue)06:46:59 No.103889484

>>103888893

examples?

examples?

Anonymous 01/14/25(Tue)06:47:46 No.103889489

>>103889140

Take a look at some popular models.

See what languages they have been trained on.

They should be able to pretty-much translate text between those languages.

Take a look at some popular models.

See what languages they have been trained on.

They should be able to pretty-much translate text between those languages.

Anonymous 01/14/25(Tue)07:11:02 No.103889655

>>103888893

>>103888919

Thank you. I'll try them both out.

>>103889448

Yeah, I have 32 additional VRAM that was soldered on by some dude in Shenzhen while I had noodles

>>103888919

Thank you. I'll try them both out.

>>103889448

Yeah, I have 32 additional VRAM that was soldered on by some dude in Shenzhen while I had noodles

Anonymous 01/14/25(Tue)07:13:05 No.103889676

>https://huggingface.co/openbmb/MiniCPM-o-2_6/tree/main

I hate the demo because I get nervous talking with female voices

I hate the demo because I get nervous talking with female voices

Anonymous 01/14/25(Tue)07:18:29 No.103889710

https://web.archive.org/web/20250114121236/https://www.theregister.com/2025/01/09/us_weighing_global_limits_ai_exports/

>"Along with compute caps on tier-2 nations, the rules may also include limits on the export of closed AI model weights. Model weights represent the numerical values that dictate how modern AI models function. Under the proposed rules, the Commerce Department aims to prevent companies from hosting closed model weights in tier-3 countries like China and Russia. Such a move would prevent major closed-source models from being served from these nations. Open models, like Meta's Llama 3.1 405B, would not be subject to these rules, nor would any closed model deemed less sophisticated than an existing open model."

>"nor would any closed model deemed less sophisticated than an existing open model."

What are they trying to do here?

>"Along with compute caps on tier-2 nations, the rules may also include limits on the export of closed AI model weights. Model weights represent the numerical values that dictate how modern AI models function. Under the proposed rules, the Commerce Department aims to prevent companies from hosting closed model weights in tier-3 countries like China and Russia. Such a move would prevent major closed-source models from being served from these nations. Open models, like Meta's Llama 3.1 405B, would not be subject to these rules, nor would any closed model deemed less sophisticated than an existing open model."

>"nor would any closed model deemed less sophisticated than an existing open model."

What are they trying to do here?

Anonymous 01/14/25(Tue)07:28:33 No.103889779

>>103889710

My guess is preventing potentially hostile countries from having privileged access over powerful AI models.

My guess is preventing potentially hostile countries from having privileged access over powerful AI models.

Anonymous 01/14/25(Tue)07:41:35 No.103889859

Titanpill me RIGHT NOW

https://arxiv.org/pdf/2501.00663v1

https://arxiv.org/pdf/2501.00663v1

Anonymous 01/14/25(Tue)07:43:24 No.103889870

Anonymous 01/14/25(Tue)08:00:13 No.103889960

>>103888589

https://youtu.be/OSKgz8NfUoI

https://youtu.be/OSKgz8NfUoI

Anonymous 01/14/25(Tue)08:01:41 No.103889965

>>103889859

I'm a retard when it comes to math formulas, but at least understand the terminology, and if I get it right, the basic idea is:

Models we use these days function best as an equivalent of short-term memory (which is why they get dumber with longer contexts). This architecture involves basically having a meta-model with additional context: a long-term memory that evaluates how "surprising" or "memorable" something is in its context, and feeds that data to the core model that operates on a smaller context (they keep using the phrase "learning to memorize at test time", but I see nothing to suggest any moving parts, so to speak). In other words, important details should be preserved from a much larger context (they claim it can reach 2M context), influencing the short-term memory, and in turn influencing the output.

It seems theoretically solid. Long-term memory is one of the aspects of LLMs that badly need a breakthrough, and this seems like a viable approach without requiring dynamic data on the user side.

I'm a retard when it comes to math formulas, but at least understand the terminology, and if I get it right, the basic idea is:

Models we use these days function best as an equivalent of short-term memory (which is why they get dumber with longer contexts). This architecture involves basically having a meta-model with additional context: a long-term memory that evaluates how "surprising" or "memorable" something is in its context, and feeds that data to the core model that operates on a smaller context (they keep using the phrase "learning to memorize at test time", but I see nothing to suggest any moving parts, so to speak). In other words, important details should be preserved from a much larger context (they claim it can reach 2M context), influencing the short-term memory, and in turn influencing the output.

It seems theoretically solid. Long-term memory is one of the aspects of LLMs that badly need a breakthrough, and this seems like a viable approach without requiring dynamic data on the user side.

Anonymous 01/14/25(Tue)08:06:50 No.103889997

Are non-dense models anti-local because they tend to require corporate-tier amounts of VRAM and substantially less compute, making them optimal for SaaS deployments?

Anonymous 01/14/25(Tue)08:09:08 No.103890016

Anonymous 01/14/25(Tue)08:09:09 No.103890018

>>103889859

Sorry, you said pill, not explain.

Well, if the results can be trusted, this basically cracks the problem of attention dilution over long contexts wide open. Finds the needle in the haystack near-perfectly. You know that important detail you mentioned exactly once in your RP some 10k tokens ago, that gradually got washed out until it was completely forgotten? This solves that problem.

Sorry, you said pill, not explain.

Well, if the results can be trusted, this basically cracks the problem of attention dilution over long contexts wide open. Finds the needle in the haystack near-perfectly. You know that important detail you mentioned exactly once in your RP some 10k tokens ago, that gradually got washed out until it was completely forgotten? This solves that problem.

Anonymous 01/14/25(Tue)08:09:23 No.103890019

>>103889960

cftf?

cftf?

Anonymous 01/14/25(Tue)08:10:37 No.103890023

>>103889997

Not necessarily, Mixtral used to be a VRAMlet friendly model because as long as it can fit in the RAM and the active parameters aren't too many, it can ran at decent speeds

Not necessarily, Mixtral used to be a VRAMlet friendly model because as long as it can fit in the RAM and the active parameters aren't too many, it can ran at decent speeds

Anonymous 01/14/25(Tue)08:13:03 No.103890038

>>103890018

tl;dr loredumpfags rejoice?

tl;dr loredumpfags rejoice?

Anonymous 01/14/25(Tue)08:13:52 No.103890043

Anonymous 01/14/25(Tue)08:18:57 No.103890079

>>103889997

There are ratios of total size to expert size where it is actually better for local since you can use your regular ram to get a more parameters at a small speed cost.

There are ratios of total size to expert size where it is actually better for local since you can use your regular ram to get a more parameters at a small speed cost.

Anonymous 01/14/25(Tue)08:19:40 No.103890085

I tried getting the the local demo for MiniCPM-o 2.6 working but there were too many problems after the other to make it work well.

Seems solid otherwise. Might make a fun bantz buddy when gaming or something.

Seems solid otherwise. Might make a fun bantz buddy when gaming or something.

Anonymous 01/14/25(Tue)08:20:46 No.103890093

>>103889859

just skimmed it. feels more than a meme paper this time. (he said)

just skimmed it. feels more than a meme paper this time. (he said)

Anonymous 01/14/25(Tue)08:21:19 No.103890096

>>103890019

go back

go back

Anonymous 01/14/25(Tue)08:21:37 No.103890100

Anonymous 01/14/25(Tue)08:22:10 No.103890105

Anonymous 01/14/25(Tue)08:27:22 No.103890151

I want a model or finetune that understands memes and culture.

Anonymous 01/14/25(Tue)08:29:00 No.103890163

>>103890151

Claude. Heard it can even talk like a zoomer if prompted.

Claude. Heard it can even talk like a zoomer if prompted.

Anonymous 01/14/25(Tue)08:33:00 No.103890199

gemini didn't like my strategy for tsunamis

Anonymous 01/14/25(Tue)08:37:00 No.103890220

>>103890199

>missing the meme and taking it completely literally, at face value instead

At last, artificial autism.

>missing the meme and taking it completely literally, at face value instead

At last, artificial autism.

Anonymous 01/14/25(Tue)08:47:54 No.103890295

They should make a RP leaderboard of sorts entirely based on Nala test. It is just such a good test for so many reasons and filter shite

Anonymous 01/14/25(Tue)08:51:38 No.103890322

>>103890295

It's actually a completely retarded meme though.

It's actually a completely retarded meme though.

Anonymous 01/14/25(Tue)08:53:08 No.103890329

>>103890295

I agree.

You evaluate the responses based on a couple of categories such as anatomical understanding, character adherence, etc.

Do 5 swipes for each model and take the average of the best 3 or something.

I agree.

You evaluate the responses based on a couple of categories such as anatomical understanding, character adherence, etc.

Do 5 swipes for each model and take the average of the best 3 or something.

Anonymous 01/14/25(Tue)09:12:31 No.103890472

>>103888589

cute teto

cute teto

Anonymous 01/14/25(Tue)09:12:48 No.103890474

>>103890163

A local model.

A local model.

Anonymous 01/14/25(Tue)09:17:50 No.103890518

>>103890474

DeepSeekV3

DeepSeekV3

Anonymous 01/14/25(Tue)09:24:21 No.103890566

llamiku 4 when

Anonymous 01/14/25(Tue)09:30:23 No.103890626

>>103888589

>MiniCPM-o

Has anyone got this working locally with it's functionality intact? I couldn't get it to work.

>MiniCPM-o

Has anyone got this working locally with it's functionality intact? I couldn't get it to work.

Anonymous 01/14/25(Tue)09:46:14 No.103890770

I'm going to miss lmg-anon...

Anonymous 01/14/25(Tue)09:50:56 No.103890815

>>103890770

He'll be back in 3-15 years, no worries. Just in time for llama4.3-70b

He'll be back in 3-15 years, no worries. Just in time for llama4.3-70b

Anonymous 01/14/25(Tue)09:55:55 No.103890872

>>103890770

So what did he do exactly? Say a woman should suck his dick cause his PC runs pytorch and a woman actually did it?

So what did he do exactly? Say a woman should suck his dick cause his PC runs pytorch and a woman actually did it?

Anonymous 01/14/25(Tue)10:00:10 No.103890911

>>103890770

Which tag do I use for the middle one's tit shape?

Which tag do I use for the middle one's tit shape?

Anonymous 01/14/25(Tue)10:01:05 No.103890923

Has anyone tried Sky-T1-32B-Preview?

Allegedly it is like qwq but less buggy and better at programming.

Allegedly it is like qwq but less buggy and better at programming.

Anonymous 01/14/25(Tue)10:01:43 No.103890930

Anonymous 01/14/25(Tue)10:08:08 No.103891015

>>103890911

bestiality

bestiality

Anonymous 01/14/25(Tue)10:09:02 No.103891025

>>103891015

I don't get the joke.

I don't get the joke.

Anonymous 01/14/25(Tue)10:09:38 No.103891030

>>103890923

it's a model fine-tuned on qwq outputs, i doubt it's any better than it

it's a model fine-tuned on qwq outputs, i doubt it's any better than it

Anonymous 01/14/25(Tue)10:16:13 No.103891090

>>103891030

It should be better but not much better.

It should be better but not much better.

Anonymous 01/14/25(Tue)10:19:46 No.103891132

I doubt thats this is even lmg-anon but that statement is not true right? That sounds crazy.

Anonymous 01/14/25(Tue)10:19:59 No.103891137

Updated Silly Tavern on single board computer to include maintanace items, and get it to monitor and re-connect to wifi if dropped.

https://rentry.org/SillyTavernOnSBC

https://rentry.org/SillyTavernOnSBC

Anonymous 01/14/25(Tue)10:20:56 No.103891147

>>103891132

Wrong pic, meant to post this.

Wrong pic, meant to post this.

Anonymous 01/14/25(Tue)10:22:41 No.103891170

Anonymous 01/14/25(Tue)10:26:28 No.103891200

Anonymous 01/14/25(Tue)10:30:15 No.103891235

>>103891147

WHO

WHO

Anonymous 01/14/25(Tue)10:30:38 No.103891241

>>103891147

Since you can get imprisoned for a few insults on LoL, I'm sure that's true.

Since you can get imprisoned for a few insults on LoL, I'm sure that's true.

Anonymous 01/14/25(Tue)10:30:40 No.103891242

>>103891235

some locust enabler

some locust enabler

Anonymous 01/14/25(Tue)10:32:49 No.103891264

>>103888589

Hi bros

Im a bit out of the loop on the memesamplers, can someone spoonfeed me a good value of smooth/dry or xtc for largestral?

normally i dont mess with them, but i remember smooth being kinda nice, and after doing a multicharacter card and the model giving VASTLY more attention to a character with a common name, i think i need to use them

Hi bros

Im a bit out of the loop on the memesamplers, can someone spoonfeed me a good value of smooth/dry or xtc for largestral?

normally i dont mess with them, but i remember smooth being kinda nice, and after doing a multicharacter card and the model giving VASTLY more attention to a character with a common name, i think i need to use them

Anonymous 01/14/25(Tue)10:33:46 No.103891273

Anonymous 01/14/25(Tue)10:38:55 No.103891318

Anonymous 01/14/25(Tue)10:40:16 No.103891329

>>103891147

The entirety of South Korea will disappear in 3 generations. He'll get the last laugh, might even be alive for it.

The entirety of South Korea will disappear in 3 generations. He'll get the last laugh, might even be alive for it.

Anonymous 01/14/25(Tue)10:40:27 No.103891331

>>103890220

No. Catching the meme is the autism in this scenario, anon. Imagine going up to some rando math professor and being like

>"ehehe... gotta go fast, bet"

He'll think you're retarded. Same with gemini. It's just not allowed to say it.

No. Catching the meme is the autism in this scenario, anon. Imagine going up to some rando math professor and being like

>"ehehe... gotta go fast, bet"

He'll think you're retarded. Same with gemini. It's just not allowed to say it.

Anonymous 01/14/25(Tue)10:40:49 No.103891333

Anonymous 01/14/25(Tue)10:41:09 No.103891341

>>103891273

Why is it so weird? They probably were accomplices to some degree for whatever he did.

Why is it so weird? They probably were accomplices to some degree for whatever he did.

Anonymous 01/14/25(Tue)10:42:07 No.103891353

Kill yourself.

Anonymous 01/14/25(Tue)10:43:20 No.103891367

>>103891333

is that kanken ni-kyu? Impressive.

is that kanken ni-kyu? Impressive.

Anonymous 01/14/25(Tue)10:48:28 No.103891423

>>103891333

im jealous

im jealous

Anonymous 01/14/25(Tue)10:49:31 No.103891433

t-thanks grok. cant wait to have that power locally soon.

Anonymous 01/14/25(Tue)10:52:58 No.103891478

>In South Korea, defamation laws are particularly stringent, as evidenced by the information provided in the related web results.

>Defamation can lead to criminal charges with potential imprisonment up to three years if the information is true, and up to seven years if it is false.

>This is highlighted in the context of South Korean cyber defamation law, where even true information that harms a person's reputation can be punishable.

>This legal framework is different from many Western countries where defamation typically results in civil rather than criminal liability.

>Specific Allegations: According to Air Katakana's subsequent posts, the person who reported him to the police is a professor at KAIST named ******* Lee.

>Air Katakana alleges that this professor forced him to send a large amount of money to his wife under the threat of revoking a job offer that had been promised over a year prior.

>This suggests that the defamation might be tied to these financial and employment-related disputes.

>imprisonment up to three years if the information is true

I seriously hope that's just hallucinated up. 3 years for posting true stuff somebody doesn't like. wow

>Defamation can lead to criminal charges with potential imprisonment up to three years if the information is true, and up to seven years if it is false.

>This is highlighted in the context of South Korean cyber defamation law, where even true information that harms a person's reputation can be punishable.

>This legal framework is different from many Western countries where defamation typically results in civil rather than criminal liability.

>Specific Allegations: According to Air Katakana's subsequent posts, the person who reported him to the police is a professor at KAIST named ******* Lee.

>Air Katakana alleges that this professor forced him to send a large amount of money to his wife under the threat of revoking a job offer that had been promised over a year prior.

>This suggests that the defamation might be tied to these financial and employment-related disputes.

>imprisonment up to three years if the information is true

I seriously hope that's just hallucinated up. 3 years for posting true stuff somebody doesn't like. wow

Anonymous 01/14/25(Tue)10:59:10 No.103891569

>>103891478

This is something air katakana wrote in one of his tweets, I think it was irony and the AI took it as a fact.

This is something air katakana wrote in one of his tweets, I think it was irony and the AI took it as a fact.

Anonymous 01/14/25(Tue)11:00:45 No.103891593

>>103891569

It's on wikipedia

>>103891478

I'd assume that's to discourage gossip and feuds about petty stuff, but it's ridiculously phrased.

It's on wikipedia

>>103891478

I'd assume that's to discourage gossip and feuds about petty stuff, but it's ridiculously phrased.

Anonymous 01/14/25(Tue)11:03:07 No.103891619

Anonymous 01/14/25(Tue)11:07:23 No.103891662

>>103890626

the vision model works ok for me

the vision model works ok for me

Anonymous 01/14/25(Tue)11:08:04 No.103891668

Anonymous 01/14/25(Tue)11:11:23 No.103891698

>>103890518

A local model I can run. I'm not a poorfag either. I have 64gb ddr5.

A local model I can run. I'm not a poorfag either. I have 64gb ddr5.

Anonymous 01/14/25(Tue)11:13:03 No.103891721

>>103891698

"understand memes and culture" is too broad. what do you want to do exactly?

"understand memes and culture" is too broad. what do you want to do exactly?

Anonymous 01/14/25(Tue)11:15:47 No.103891754

>>103891478

You have to understand that all of Korea was nothing by illiterate farmers just a couple generations ago. Unlike the Japanese and, at least the historically urban parts of, the Chinese, they don't really have a tradition of a stable and functioning modern civilization.

You have to understand that all of Korea was nothing by illiterate farmers just a couple generations ago. Unlike the Japanese and, at least the historically urban parts of, the Chinese, they don't really have a tradition of a stable and functioning modern civilization.

Anonymous 01/14/25(Tue)11:20:22 No.103891802

>>103891721

If you have to ask, your judgement won't be useful to me. I can tell based on the tone of your post that you're a pedantic shithead.

If you have to ask, your judgement won't be useful to me. I can tell based on the tone of your post that you're a pedantic shithead.

Anonymous 01/14/25(Tue)11:24:28 No.103891840

>>103891802

nta, but jesus fuck, anon...

>being pedantic about memes and "culture"

>I'm not a poorfag either.

>I have 64gb ddr5.

>pedantic shithead.

nta, but jesus fuck, anon...

>being pedantic about memes and "culture"

>I'm not a poorfag either.

>I have 64gb ddr5.

>pedantic shithead.

Anonymous 01/14/25(Tue)11:25:05 No.103891846

>>103891132

>I doubt thats this is even lmg-anon

he seems like a pretty cool guy. Does he actually hang out here? We could swap JLPT stories

>I doubt thats this is even lmg-anon

he seems like a pretty cool guy. Does he actually hang out here? We could swap JLPT stories

Anonymous 01/14/25(Tue)11:26:30 No.103891859

Anonymous 01/14/25(Tue)11:27:17 No.103891869

>>103891668

You're right.

You're right.

Anonymous 01/14/25(Tue)11:31:31 No.103891904

How exacly one start with this? I`m on linux.

From the rentry tutorial it says to download oobabooga, their github says to start_linux.sh.

Then I went to https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard#/

But couldn`t find a download link to any project. What after this?

From the rentry tutorial it says to download oobabooga, their github says to start_linux.sh.

Then I went to https://huggingface.co/spaces/open-

But couldn`t find a download link to any project. What after this?

Anonymous 01/14/25(Tue)11:34:32 No.103891932

>>103891904

By waiting 2 more weeks for better models.

By waiting 2 more weeks for better models.

Anonymous 01/14/25(Tue)11:34:34 No.103891933

>>103891904

copy-paste the name of the repo into the model download box in ooba. after that, load the model and done

copy-paste the name of the repo into the model download box in ooba. after that, load the model and done

Anonymous 01/14/25(Tue)11:34:47 No.103891935

>>103891802

are you a toddler that expects to be spoonfed with minimal communication (crying)?

are you a toddler that expects to be spoonfed with minimal communication (crying)?

Anonymous 01/14/25(Tue)11:44:44 No.103892037

>>103891904

>memeboard leaders these days are 78(!)B frankenmerges of Qwen-72B

I hadn't looked at that cesspool in a year. Good to know it hasn't changed since then after it got flooded by chinks and indians training meme models on benchmark data.

>memeboard leaders these days are 78(!)B frankenmerges of Qwen-72B

I hadn't looked at that cesspool in a year. Good to know it hasn't changed since then after it got flooded by chinks and indians training meme models on benchmark data.

Anonymous 01/14/25(Tue)11:49:07 No.103892080

>>103892037

we need better benchmarks

we need better benchmarks

Anonymous 01/14/25(Tue)12:04:55 No.103892241

how are speeds benchmarked anyway?

T/s just refers to output speed right.

Is there a factor x where processing input is faster as generating tokens or are these two unrelated. Also speeds seem to differ quite a bit by not only size but model and datatype.

T/s just refers to output speed right.

Is there a factor x where processing input is faster as generating tokens or are these two unrelated. Also speeds seem to differ quite a bit by not only size but model and datatype.

Anonymous 01/14/25(Tue)12:06:30 No.103892252

>>103892241

Most backends show you processing, generation and the total time each in t/s.

Most backends show you processing, generation and the total time each in t/s.

Anonymous 01/14/25(Tue)12:14:51 No.103892328

>>103892037

>>103892080

open-llm-leaderboard evaluates on non-CoT. Meaning, they don't let the model generate a full solution, and then search through it and extract the answer, but rather directly check the probability of the answer in the first few tokens. That's why qwen2.5-72b scores higher than qwen2.5-72b-instruct, even though instruct is a much better assistant (which this benchmark is trying to evaluate).

Someone correct me if I'm wrong.

>>103892080

open-llm-leaderboard evaluates on non-CoT. Meaning, they don't let the model generate a full solution, and then search through it and extract the answer, but rather directly check the probability of the answer in the first few tokens. That's why qwen2.5-72b scores higher than qwen2.5-72b-instruct, even though instruct is a much better assistant (which this benchmark is trying to evaluate).

Someone correct me if I'm wrong.

Anonymous 01/14/25(Tue)12:30:04 No.103892479

I'm following the "getting started" guide. It's telling me to "download nemo 12b instruct gguf". Where do I find this?

Anonymous 01/14/25(Tue)12:31:28 No.103892504

>>103892479

Nigga, this isn't spoonfeeding at this point, it's giving you knowledge in a fucking IV line.

Nigga, this isn't spoonfeeding at this point, it's giving you knowledge in a fucking IV line.

Anonymous 01/14/25(Tue)12:33:46 No.103892534

>>103886370

>deepseek repeats too much

using --chat-template deepseek3 with a recent llama.cpp has eliminated any repeating for me. I don't think I've seen it once.

The only other variable is that I self-quant, so I can't speak for any online ggufs if those are the core issue

>deepseek repeats too much

using --chat-template deepseek3 with a recent llama.cpp has eliminated any repeating for me. I don't think I've seen it once.

The only other variable is that I self-quant, so I can't speak for any online ggufs if those are the core issue

Anonymous 01/14/25(Tue)12:33:48 No.103892535

>>103892479

>the treasure map says X marks the spot, where do I find this so I can start digging?

>[Attached picture: big X on the ground]

>the treasure map says X marks the spot, where do I find this so I can start digging?

>[Attached picture: big X on the ground]

Anonymous 01/14/25(Tue)12:35:08 No.103892551

>>103892479

In the off chance you aren't trolling, have you tried writing "nemo 12b instruct gguf" in the search bar that's clearly visible in your image?

If not, try that.

You'll see a bunch of results, probably, download the bartowski one that has mistral in the name.

In the off chance you aren't trolling, have you tried writing "nemo 12b instruct gguf" in the search bar that's clearly visible in your image?

If not, try that.

You'll see a bunch of results, probably, download the bartowski one that has mistral in the name.

Anonymous 01/14/25(Tue)12:36:57 No.103892569

>>103892551

Doesn't "Vikhr" indicate that it's Russian?

Doesn't "Vikhr" indicate that it's Russian?

Anonymous 01/14/25(Tue)12:37:10 No.103892574

>>103892479

Use this https://ollama.com/

Use this https://ollama.com/

Anonymous 01/14/25(Tue)12:38:48 No.103892598

>>103892574

This is like giving someone a crackpipe.

This is like giving someone a crackpipe.

Anonymous 01/14/25(Tue)12:38:55 No.103892599

>>103892569

The one with mistral in the name anon.

The one with mistral in the name anon.

Anonymous 01/14/25(Tue)12:39:03 No.103892600

Anonymous 01/14/25(Tue)12:41:00 No.103892625

>>103892574

This is the one time recommending ollama is ok

This is the one time recommending ollama is ok

Anonymous 01/14/25(Tue)12:47:49 No.103892697

anyone ever use the cpu pinning feature (not --numa) in lcpp? it doesn't appear to obey the strict flag or pinning mask at all and my inference just gets slower, which doesn't make sense to me.

Anonymous 01/14/25(Tue)12:49:52 No.103892714

Anonymous 01/14/25(Tue)12:54:03 No.103892757

Did they cheat with Sky T1? Or is it actually comparable to o1?

Anonymous 01/14/25(Tue)12:55:46 No.103892779

Anonymous 01/14/25(Tue)12:57:34 No.103892808

>>103890518

Ok, now one that can do it for more than once message (since deepseek will come up with something good but then just repeat parts of it every message forever.)

Ok, now one that can do it for more than once message (since deepseek will come up with something good but then just repeat parts of it every message forever.)

Anonymous 01/14/25(Tue)12:58:43 No.103892824

dead on arrival

doesn't even know what a migu is.

doesn't even know what a migu is.

Anonymous 01/14/25(Tue)12:59:38 No.103892841

>>103890295

Once you make something a benchmark then it becomes useless since they'll optimize for it and the test isn't representative of true performance.

Once you make something a benchmark then it becomes useless since they'll optimize for it and the test isn't representative of true performance.

Anonymous 01/14/25(Tue)13:00:14 No.103892849

>>103892824

Not exactly a fair test being out of focus and off-model.

Not exactly a fair test being out of focus and off-model.

Anonymous 01/14/25(Tue)13:00:38 No.103892854

>>103892808

>deepseek will come up with something good but then just repeat parts of it every message forever.

you're doing something wrong

>deepseek will come up with something good but then just repeat parts of it every message forever.

you're doing something wrong

Anonymous 01/14/25(Tue)13:00:55 No.103892858

>>103892534

post a log with 5 bot messages

post a log with 5 bot messages

Anonymous 01/14/25(Tue)13:01:53 No.103892870

Anonymous 01/14/25(Tue)13:01:59 No.103892871

>>103892824

There's only so much a benchmaxxed 8B can do.

There's only so much a benchmaxxed 8B can do.

Anonymous 01/14/25(Tue)13:02:29 No.103892880

>>103892854

Don't think so, Chinese models just have a lot of shills. People said qwen was good too and I didn't like it either.

Don't think so, Chinese models just have a lot of shills. People said qwen was good too and I didn't like it either.

Anonymous 01/14/25(Tue)13:02:39 No.103892882

>>103892870

assistant messages

assistant messages

Anonymous 01/14/25(Tue)13:04:05 No.103892905

>>103892849

The whole purpose of machine learning is to create emergent, out of distribution capabilities. It's a perfectly fair test. >>103892871

Still pretty impressive level of understanding for an 8b

The whole purpose of machine learning is to create emergent, out of distribution capabilities. It's a perfectly fair test. >>103892871

Still pretty impressive level of understanding for an 8b

Anonymous 01/14/25(Tue)13:04:30 No.103892917

>>103892824

Knowing more obscure stuff is where more params come in.

Knowing more obscure stuff is where more params come in.

SnusGoose 01/14/25(Tue)13:05:19 No.103892930

A new model is out, its 45A450B model.

https://www.minimaxi.com/en

https://www.minimaxi.com/en

Anonymous 01/14/25(Tue)13:05:29 No.103892931

Anonymous 01/14/25(Tue)13:06:49 No.103892949

>>103892930

Nice marketing page. Now show me the weights.

Nice marketing page. Now show me the weights.

Anonymous 01/14/25(Tue)13:07:23 No.103892957

>>103892931

I didn't know that character till I saw it several times on /lmg

I didn't know that character till I saw it several times on /lmg

Anonymous 01/14/25(Tue)13:09:41 No.103892982

>>103892949

Looks like it exists but the retarded namefag didn't think of posting the hf link or even the proper blogpost kek.

Looks like it exists but the retarded namefag didn't think of posting the hf link or even the proper blogpost kek.

SnusGoose 01/14/25(Tue)13:10:11 No.103892992

https://huggingface.co/MiniMaxAI/MiniMax-Text-01

Idk if its any good, they did some linear attention fuckery

Idk if its any good, they did some linear attention fuckery

Anonymous 01/14/25(Tue)13:11:01 No.103893002

>>103892992

4M context? I like that.

4M context? I like that.

Anonymous 01/14/25(Tue)13:11:29 No.103893010

>>103892992

Why do you have 2 HF accounts? The one you link on your landing page is empty: https://huggingface.co/MiniMax-AI

Why do you have 2 HF accounts? The one you link on your landing page is empty: https://huggingface.co/MiniMax-AI

SnusGoose 01/14/25(Tue)13:12:37 No.103893026

It’s not my model I’m not sure why they did that

Anonymous 01/14/25(Tue)13:14:01 No.103893047

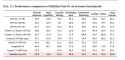

>>103892992

>MiniMax-Text-01 is a powerful language model with 456 billion total parameters, of which 45.9 billion are activated per token. To better unlock the long context capabilities of the model, MiniMax-Text-01 adopts a hybrid architecture that combines Lightning Attention, Softmax Attention and Mixture-of-Experts (MoE). Leveraging advanced parallel strategies and innovative compute-communication overlap methods—such as Linear Attention Sequence Parallelism Plus (LASP+), varlen ring attention, Expert Tensor Parallel (ETP), etc., MiniMax-Text-01's training context length is extended to 1 million tokens, and it can handle a context of up to 4 million tokens during the inference. On various academic benchmarks, MiniMax-Text-01 also demonstrates the performance of a top-tier model.

filled up my entire buzzword bingo card

>MiniMax-Text-01 is a powerful language model with 456 billion total parameters, of which 45.9 billion are activated per token. To better unlock the long context capabilities of the model, MiniMax-Text-01 adopts a hybrid architecture that combines Lightning Attention, Softmax Attention and Mixture-of-Experts (MoE). Leveraging advanced parallel strategies and innovative compute-communication overlap methods—such as Linear Attention Sequence Parallelism Plus (LASP+), varlen ring attention, Expert Tensor Parallel (ETP), etc., MiniMax-Text-01's training context length is extended to 1 million tokens, and it can handle a context of up to 4 million tokens during the inference. On various academic benchmarks, MiniMax-Text-01 also demonstrates the performance of a top-tier model.

filled up my entire buzzword bingo card

Anonymous 01/14/25(Tue)13:14:26 No.103893051

>>103892882

https://rentry.org/bds5pnoc

Here's the first nine from an rpg/text adventure type prompt. DSv3 Q6

Its not exactly unslopped or inspired prose, but its not repeating itself in any way I find alarming.

Believe it or not. The choice is yours!

https://rentry.org/bds5pnoc

Here's the first nine from an rpg/text adventure type prompt. DSv3 Q6

Its not exactly unslopped or inspired prose, but its not repeating itself in any way I find alarming.

Believe it or not. The choice is yours!

Anonymous 01/14/25(Tue)13:15:40 No.103893070

>>103892931

Miku isn't even a fucking thing anymore except for turbo-autists, literal oldfags (30+) and troons. The fact that you think she's still present in pop-culture puts you in the literal oldfag category, by the way.

Miku isn't even a fucking thing anymore except for turbo-autists, literal oldfags (30+) and troons. The fact that you think she's still present in pop-culture puts you in the literal oldfag category, by the way.

Anonymous 01/14/25(Tue)13:16:26 No.103893079

>>103893070

>puts you in the literal oldfag category

why do you say this like it's a bad thing, zoom zoom?

>puts you in the literal oldfag category

why do you say this like it's a bad thing, zoom zoom?

Anonymous 01/14/25(Tue)13:18:52 No.103893110

Anonymous 01/14/25(Tue)13:18:56 No.103893112

>>103893079

Man, I'm in that very same category, that's why I know damn well that you just never paused to think whether the things that were popular when you were a kid are still known at all. Happens to me all the time.

Man, I'm in that very same category, that's why I know damn well that you just never paused to think whether the things that were popular when you were a kid are still known at all. Happens to me all the time.

Anonymous 01/14/25(Tue)13:20:15 No.103893131

>>103893070

>Miku isn't even a fucking thing anymore

I dunno about the west, but Vocaloids are still massively popular with asian kids

>Miku isn't even a fucking thing anymore

I dunno about the west, but Vocaloids are still massively popular with asian kids

Anonymous 01/14/25(Tue)13:21:21 No.103893145

>>103893051

I can see repetition all through your text, but I guess it's good for you that you're blissfully unaware of it.

I can see repetition all through your text, but I guess it's good for you that you're blissfully unaware of it.

Anonymous 01/14/25(Tue)13:21:51 No.103893150

>>103893070

The point is that it used to be. And is thus highly represented within any stack of training data. Just about every AI model on earth knows what Hatsune Miku is.

The point is that it used to be. And is thus highly represented within any stack of training data. Just about every AI model on earth knows what Hatsune Miku is.

Anonymous 01/14/25(Tue)13:21:57 No.103893155

Anonymous 01/14/25(Tue)13:22:16 No.103893158

>>103893110

The simpleQA and IFEval score are high which means it's better for RP.

The simpleQA and IFEval score are high which means it's better for RP.

Anonymous 01/14/25(Tue)13:22:21 No.103893160

>>103893131

You know what, fair enough, I'll give you that. Still, you can't deny she's way less known on a global scale than she was a generation ago. Hate to say it, but Miku is a niche character at this point.

You know what, fair enough, I'll give you that. Still, you can't deny she's way less known on a global scale than she was a generation ago. Hate to say it, but Miku is a niche character at this point.

Anonymous 01/14/25(Tue)13:23:03 No.103893165

>>103893070

Yeah, Miku is pretty much like Touhou, it still exists only on the darkest corners of the internet.

Yeah, Miku is pretty much like Touhou, it still exists only on the darkest corners of the internet.

Anonymous 01/14/25(Tue)13:23:46 No.103893180

Anonymous 01/14/25(Tue)13:24:30 No.103893188

>>103893155

You still have time.

You still have time.

Anonymous 01/14/25(Tue)13:25:19 No.103893200

>>103893165

I'm still coping with that as a Touhoufag, to be honest. But yeah, it went from being the single most fervent fandom to a niche as well.

I'm still coping with that as a Touhoufag, to be honest. But yeah, it went from being the single most fervent fandom to a niche as well.

Anonymous 01/14/25(Tue)13:26:23 No.103893213

>>103893051

thanks for the log, I'll take a look

while I was waiting, I did a basic test using the chat api

thanks for the log, I'll take a look

while I was waiting, I did a basic test using the chat api

Anonymous 01/14/25(Tue)13:26:32 No.103893215

>>103893165

Hell yeah, now I am quirky and special for being a Mikufag

Hell yeah, now I am quirky and special for being a Mikufag

Anonymous 01/14/25(Tue)13:26:39 No.103893219

Anonymous 01/14/25(Tue)13:28:07 No.103893238

>>103893051

That is so different from what I do it's hard to say. If I use it as an assistant it pretty much starts and ends every reply the same way, you've kind of baked that into that method by having it ask you the same question on each turn so maybe that pacifies it.

That is so different from what I do it's hard to say. If I use it as an assistant it pretty much starts and ends every reply the same way, you've kind of baked that into that method by having it ask you the same question on each turn so maybe that pacifies it.

Anonymous 01/14/25(Tue)13:29:26 No.103893259

The goal for llms is predictability and to reduce surprises. The minority who use them to RP want surprises. You're fighting an uphill battle.

Anonymous 01/14/25(Tue)13:30:42 No.103893274

>>103893259

I thought the goal for LLMs was to become everything machines aka AGI.

I thought the goal for LLMs was to become everything machines aka AGI.

Anonymous 01/14/25(Tue)13:32:13 No.103893298

Anonymous 01/14/25(Tue)13:33:11 No.103893309

>>103892930

>>103892992

Hello Developer!

你好,开发者!

Welcome to 4chan LLM thread!

欢迎来到4chan LLM讨论串!

Please provide llama.cpp(https://github.com/ggerganov/llama.cpp) support so we can test your model!

请提供llama.cpp(https://github.com/ggerganov/llama.cpp)支持,以便我们可以测试你的模型!

Was your model trained on outputs of GPT4?

你的模型是基于GPT4的输出进行训练的吗?

>>103892992

Hello Developer!

你好,开发者!

Welcome to 4chan LLM thread!

欢迎来到4chan LLM讨论串!

Please provide llama.cpp(https://github.com/ggerga

请提供llama.cpp(https://github.com/gge

Was your model trained on outputs of GPT4?

你的模型是基于GPT4的输出进行训练的吗?

Anonymous 01/14/25(Tue)13:34:20 No.103893326

Anonymous 01/14/25(Tue)13:34:49 No.103893333

>>103889965

how is this different from genning summary?

I don't think you can summarize in parallel because individual tokens are created sequentially but weighted in full context.

My pleb expertise says the design is wrong and instead of having word tokens, it should also have sentence and story "super tokens" or any kind of structure or weights. Ai can't be smart if operates just on one dimensional context.

I don't jerk off because I remember that I did this yesterday nor because I noted down to do this today. So instead of summarizing, there should be a weighted interpretation.

Other example if saw a movie very long ago, you might not remember the plot but whether you liked it (at that time).

something like

if John jerked off 1,2,3,4 days ago...) the information gets condensed to John likes jerking off everyday. Then if he skips a day it would become he almost jerks off every day. Susy catches him multiple times and 'John is a pervert' becomes a fact (more weight, kept longer). If this story goes on for a long time most of this will get pushed out of summary unless there is a weight to all of this. Maybe all that remains of Susy is that she is John's sister and she thinks of him as a pervert instead of something like 'John's sister caught him jerking off a while ago', but at a later point another sister is introduced'

This would also replace definition because definitions can change. If John looses an arm the definition that he has an arm becomes redundant.

Thanks for reading my blogpost

how is this different from genning summary?

I don't think you can summarize in parallel because individual tokens are created sequentially but weighted in full context.

My pleb expertise says the design is wrong and instead of having word tokens, it should also have sentence and story "super tokens" or any kind of structure or weights. Ai can't be smart if operates just on one dimensional context.

I don't jerk off because I remember that I did this yesterday nor because I noted down to do this today. So instead of summarizing, there should be a weighted interpretation.

Other example if saw a movie very long ago, you might not remember the plot but whether you liked it (at that time).

something like

John:{

history: {1_day_ago{jerked off}}

facts: black hair, blue eyes, wears leather jacket, has a sister

assumption: maybe he likes jerking off

}

Susy:{

history:{1_day_ago:{saw John jerking off, called him a pervert}}

facts: sister of John

assumption: thinks John is pervert, dislikes John

}

if John jerked off 1,2,3,4 days ago...) the information gets condensed to John likes jerking off everyday. Then if he skips a day it would become he almost jerks off every day. Susy catches him multiple times and 'John is a pervert' becomes a fact (more weight, kept longer). If this story goes on for a long time most of this will get pushed out of summary unless there is a weight to all of this. Maybe all that remains of Susy is that she is John's sister and she thinks of him as a pervert instead of something like 'John's sister caught him jerking off a while ago', but at a later point another sister is introduced'

This would also replace definition because definitions can change. If John looses an arm the definition that he has an arm becomes redundant.

Thanks for reading my blogpost

Anonymous 01/14/25(Tue)13:35:56 No.103893353

>>103893165

I am pretty sure MLP followed the same trajectory as well, I see them a whole lot less then a mere decade ago.

I am pretty sure MLP followed the same trajectory as well, I see them a whole lot less then a mere decade ago.

Anonymous 01/14/25(Tue)13:37:03 No.103893372

>>103893326

It's by a literal nobody Chinese firm with no information about them. The fact that they don't even list how many tokens they trained on makes me think it's severely undertrained on trained on benchmarks to hit those scores.

It's by a literal nobody Chinese firm with no information about them. The fact that they don't even list how many tokens they trained on makes me think it's severely undertrained on trained on benchmarks to hit those scores.

Anonymous 01/14/25(Tue)13:38:32 No.103893387

Anonymous 01/14/25(Tue)13:41:21 No.103893417

>>103893051

>You awaken—or perhaps you simply become aware—in a place that defies comprehension.

>You drift—or perhaps you simply will yourself to move—through the Infinite Void

>The golden light emanating from the pinnacle casts long shadows

>The golden light from the pinnacle above grows brighter

>floating islands and crumbling structures bathed in the golden light that emanates from the pinnacle

>You must decide what to do next

>You must decide how to proceed.

>You must decide how to approach this discovery

>You must decide how to proceed.

>You must decide how to interact with it.

it's a bit less conspicuous because your have long replies and each message moves the plot forward, but it's still going to become tedious to read sonner than later

>You awaken—or perhaps you simply become aware—in a place that defies comprehension.

>You drift—or perhaps you simply will yourself to move—through the Infinite Void

>The golden light emanating from the pinnacle casts long shadows

>The golden light from the pinnacle above grows brighter

>floating islands and crumbling structures bathed in the golden light that emanates from the pinnacle

>You must decide what to do next

>You must decide how to proceed.

>You must decide how to approach this discovery

>You must decide how to proceed.

>You must decide how to interact with it.

it's a bit less conspicuous because your have long replies and each message moves the plot forward, but it's still going to become tedious to read sonner than later

Anonymous 01/14/25(Tue)13:41:55 No.103893424

>>103893387

Yea, minimax

Yea, minimax

Anonymous 01/14/25(Tue)13:42:06 No.103893425

>>103893372

I mean, you could've said this about Deepseek the company 3 months ago. Advances are increasingly in open source being driven by smaller firms.

I mean, you could've said this about Deepseek the company 3 months ago. Advances are increasingly in open source being driven by smaller firms.

Anonymous 01/14/25(Tue)13:42:10 No.103893426

>>103893387

Are they?

Are they?

Anonymous 01/14/25(Tue)13:46:20 No.103893461

>>103893426

No they just happen to be called minimax

No they just happen to be called minimax

Anonymous 01/14/25(Tue)13:46:31 No.103893463

>>103893160

>Hate to say it, but Miku is a niche character at this point.

the most popular vocaloid videos still get 100M+ views...somewhere around a mid-tier mr.beast video. I don't think its dying, but its not leading-edge culture any more.

I think you'll still find orders of magnitude more normies that could identify miku vs even the most well-known touhou character

>Hate to say it, but Miku is a niche character at this point.

the most popular vocaloid videos still get 100M+ views...somewhere around a mid-tier mr.beast video. I don't think its dying, but its not leading-edge culture any more.

I think you'll still find orders of magnitude more normies that could identify miku vs even the most well-known touhou character

Anonymous 01/14/25(Tue)13:49:03 No.103893488

>>103893387

Well, they're definitely up with the best in any case.

Well, they're definitely up with the best in any case.

Anonymous 01/14/25(Tue)13:49:36 No.103893492

>>103893417

>but it's still going to become tedious to read sonner than later

fair enough. I'd easily give the "you must.." prompt a pass since its told to be an interpreter, but maybe the others are annoying?

I can't think of another model I've used that is less prone to that type of prosal repetition though. What's your go-to for repetition-free outputs?

>but it's still going to become tedious to read sonner than later

fair enough. I'd easily give the "you must.." prompt a pass since its told to be an interpreter, but maybe the others are annoying?

I can't think of another model I've used that is less prone to that type of prosal repetition though. What's your go-to for repetition-free outputs?

Anonymous 01/14/25(Tue)13:50:24 No.103893497

>>103893274

How is it agi if it can't do something a human can do easily?

How is it agi if it can't do something a human can do easily?

Anonymous 01/14/25(Tue)13:52:02 No.103893512

>>103893497

That's part of my point, yes.

That's part of my point, yes.

Anonymous 01/14/25(Tue)13:53:10 No.103893528

>>103893512

Ah I see, I should have said that to who you replied to.

Ah I see, I should have said that to who you replied to.

Anonymous 01/14/25(Tue)13:53:16 No.103893531

Anonymous 01/14/25(Tue)13:54:49 No.103893548

>>103893070

are you retarded?

Open new miku MV, it already has million of view. If you combine all Miku songs you probably get more total views than any human artist (yes total views, I'm not saying Miku is the single most popular thing ever). Miku is probably also one of the chars with most art and most importantly she is around for 20 years which automatically makes her more relevant than any recent popular flavor of the month

Quick look at r34 tells us has like 20k art, for comparison d.va has 23k

Not that impressive you say?

r34 is very much a western site and just porn. On Sankaku she has 171k versus just 21k for d.va.

She is omnipresent in music, art, porn (spawned its own category of porn) and has tons of cameos in various media. If your dataset mentions anything weeb related its unlikely to not contain Miku.

are you retarded?

Open new miku MV, it already has million of view. If you combine all Miku songs you probably get more total views than any human artist (yes total views, I'm not saying Miku is the single most popular thing ever). Miku is probably also one of the chars with most art and most importantly she is around for 20 years which automatically makes her more relevant than any recent popular flavor of the month

Quick look at r34 tells us has like 20k art, for comparison d.va has 23k

Not that impressive you say?

r34 is very much a western site and just porn. On Sankaku she has 171k versus just 21k for d.va.

She is omnipresent in music, art, porn (spawned its own category of porn) and has tons of cameos in various media. If your dataset mentions anything weeb related its unlikely to not contain Miku.

Anonymous 01/14/25(Tue)13:57:12 No.103893569

Lightning Attention, Softmax Attention and Mixture-of-Experts (MoE). Leveraging advanced parallel strategies and innovative compute-communication overlap methods—such as Linear Attention Sequence Parallelism Plus (LASP+), varlen ring attention, Expert Tensor Parallel (ETP), etc., MiniMax-Text-01's training context length is extended to 1 million tokens, and it can handle a context of up to 4 million tokens during the inference

Anonymous 01/14/25(Tue)13:57:24 No.103893570

>>103892992

Nala test please

Nala test please

Anonymous 01/14/25(Tue)13:58:13 No.103893578

>>103893548

holy cope

holy cope

Anonymous 01/14/25(Tue)14:00:55 No.103893600

>>103893578

You're the one coping, old man. Miku is in Fortnite.

You're the one coping, old man. Miku is in Fortnite.

Anonymous 01/14/25(Tue)14:02:25 No.103893611

>>103893569

They tested a lot of new stuff with this. Just wanted to point out.

They tested a lot of new stuff with this. Just wanted to point out.

Anonymous 01/14/25(Tue)14:03:05 No.103893623

>>103893492

it's not just "you must". you're going to see "you must decide how to" for the next 1000 messages. except once it says e.g. "how to approach" for the 2nd and then 3rd time, it's going to actually be "you must decide how to approach ...". and the looping part will keep growing

and I don't really have an alternative recommendation because deepseek is the only "local" model I've ever had a longer chat with.

it's not just "you must". you're going to see "you must decide how to" for the next 1000 messages. except once it says e.g. "how to approach" for the 2nd and then 3rd time, it's going to actually be "you must decide how to approach ...". and the looping part will keep growing

and I don't really have an alternative recommendation because deepseek is the only "local" model I've ever had a longer chat with.

Anonymous 01/14/25(Tue)14:04:05 No.103893630

https://rentry.org/ona836nk

minimax summarization of its own paper. also apparently they have some inhouse benchmarks that judge creative writing lol.

minimax summarization of its own paper. also apparently they have some inhouse benchmarks that judge creative writing lol.

Anonymous 01/14/25(Tue)14:06:22 No.103893654

Anonymous 01/14/25(Tue)14:09:19 No.103893681

>>103893623

>I don't really have an alternative recommendation

The orthodox way is to either improve your initial prompt/character card or edit the first few responses to either delete that, or edit it into a more consistent output

>I don't really have an alternative recommendation

The orthodox way is to either improve your initial prompt/character card or edit the first few responses to either delete that, or edit it into a more consistent output

Anonymous 01/14/25(Tue)14:09:34 No.103893684

>>103893488

>Wolf (dog adjacent)

>Not instantly going for the food on the table

That's how you can tell that this video is AI generated, any canine would instantly beeline towards the food and cuddle with you afterwards. Not the other way around.

>Wolf (dog adjacent)

>Not instantly going for the food on the table

That's how you can tell that this video is AI generated, any canine would instantly beeline towards the food and cuddle with you afterwards. Not the other way around.

Anonymous 01/14/25(Tue)14:09:36 No.103893685

>>103893274

LLMs will NEVER become AGI, they're not even AI. YWNBAI

LLMs will NEVER become AGI, they're not even AI. YWNBAI

Anonymous 01/14/25(Tue)14:10:39 No.103893692

I hate furfags they ruin everything that is good in this world

Anonymous 01/14/25(Tue)14:11:27 No.103893700

>>103893685

I agree 100% my guy. Doesn't lessen my point in the least however.

I agree 100% my guy. Doesn't lessen my point in the least however.

Anonymous 01/14/25(Tue)14:11:59 No.103893707

>>103893274

LLM's would be just one part of AGI, in the same way the language centers in our brain are just one part of the brain. Many other systems would have to work in conjunction with LLM's before it could be called an AGI. LLM's on their own will never be called that.

LLM's would be just one part of AGI, in the same way the language centers in our brain are just one part of the brain. Many other systems would have to work in conjunction with LLM's before it could be called an AGI. LLM's on their own will never be called that.

Anonymous 01/14/25(Tue)14:13:55 No.103893724

Just tried the roleplaying thing from the op. Came to it in a totally cynicle mind but horry shit

Anonymous 01/14/25(Tue)14:15:59 No.103893742

>>103893707

Yeah. I've said that in this general a couple of times too.

AGI needs to be a complex system much like a brain, with different complex parts that do different things, even if all the different parts are also neural networks of their own.

Of course, you take a transformers LLM and look inside it and it does have its own complex blocks, so you could begin expanding from inside the LLM into something bigger and still call it a LLM, but it would be something much more complicated than just the token prediction machines we have today.

Yeah. I've said that in this general a couple of times too.

AGI needs to be a complex system much like a brain, with different complex parts that do different things, even if all the different parts are also neural networks of their own.

Of course, you take a transformers LLM and look inside it and it does have its own complex blocks, so you could begin expanding from inside the LLM into something bigger and still call it a LLM, but it would be something much more complicated than just the token prediction machines we have today.

Anonymous 01/14/25(Tue)14:16:05 No.103893744

>>103893707

Doesn't gpt incorporate some form of fusion behind the scenes with code based instructions? Like web searching in some form

Doesn't gpt incorporate some form of fusion behind the scenes with code based instructions? Like web searching in some form

Anonymous 01/14/25(Tue)14:16:40 No.103893750

>>103893700

I dont care about your point. I just said what I wanted to. Take it or leave it.

I dont care about your point. I just said what I wanted to. Take it or leave it.

Anonymous 01/14/25(Tue)14:17:02 No.103893755

On ruler, so real context

Anonymous 01/14/25(Tue)14:17:18 No.103893757

>>103893750

base

base

Anonymous 01/14/25(Tue)14:19:13 No.103893773

>>103893259

There's a hidden premise here that isn't quite true. Which is that "truly and utterly unpredictable surprises are present in creative writing". But in fact, creativity of the human kind that people enjoy is not really surprising or unpredictable. The most creative people on the planet are the ones who have a vast wealth of knowledge which they can mix concepts with. That is how you get truly coherent creative surprises rather than utter chaotic randomness that doesn't make sense. So ultimately surprises that also make sense still benefit from an autoregressive prediction training objective. The issue isn't necessarily the training objective, but about whether companies care about training on "low quality" diverse internet data that would lead to creativity in writing.

There's a hidden premise here that isn't quite true. Which is that "truly and utterly unpredictable surprises are present in creative writing". But in fact, creativity of the human kind that people enjoy is not really surprising or unpredictable. The most creative people on the planet are the ones who have a vast wealth of knowledge which they can mix concepts with. That is how you get truly coherent creative surprises rather than utter chaotic randomness that doesn't make sense. So ultimately surprises that also make sense still benefit from an autoregressive prediction training objective. The issue isn't necessarily the training objective, but about whether companies care about training on "low quality" diverse internet data that would lead to creativity in writing.

Anonymous 01/14/25(Tue)14:19:13 No.103893774

Holy fuck we're so back...

Anonymous 01/14/25(Tue)14:21:51 No.103893800

>>103893755

Impressive

Impressive

Anonymous 01/14/25(Tue)14:22:31 No.103893809

Anonymous 01/14/25(Tue)14:22:54 No.103893817

Anonymous 01/14/25(Tue)14:24:17 No.103893828

>>103893774

I can't run this shit. Wonder what the price will be on OR.

I can't run this shit. Wonder what the price will be on OR.

Anonymous 01/14/25(Tue)14:25:12 No.103893839

>>103893488

Man, those hand/paws are pretty good.

Man, those hand/paws are pretty good.

Anonymous 01/14/25(Tue)14:26:18 No.103893858

>>103884327

I just wanted to share my appreciation for this excellent smug Miku gen.

From a smug sommelier with over 2,400 smug anime girl images, I deem this a 10/10 smug gen.

I just wanted to share my appreciation for this excellent smug Miku gen.

From a smug sommelier with over 2,400 smug anime girl images, I deem this a 10/10 smug gen.

Anonymous 01/14/25(Tue)14:27:42 No.103893876

>>103892930

>>103893309

>>103892949

https://github.com/MiniMax-AI/MiniMax-01

blog post:

https://www.minimaxi.com/en/news/minimax-01-series-2

>>103893309

>>103892949

https://github.com/MiniMax-AI/MiniM

blog post:

https://www.minimaxi.com/en/news/mi

Anonymous 01/14/25(Tue)14:27:51 No.103893878

>>103893817

It's snout is much to narrow and ears pointy to be a dog, the only other thing that comes to mind is coyote but it looks more like a wolf than a coyote to me.

It's snout is much to narrow and ears pointy to be a dog, the only other thing that comes to mind is coyote but it looks more like a wolf than a coyote to me.

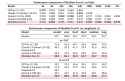

Anonymous 01/14/25(Tue)14:31:20 No.103893911

>>103893654

>Qwen, GPT, Gemini, DeepSeek and Llama beat Sonnet

Who was the evaluator? GPT4?

Let's look at appendix...

First fucking example:

>Whispers of the Lost City

>Human Evaluator:

>The lyrics are effective due to their vivid imagery, emotional depth, and narrative structure. They create a mysterious and atmospheric setting with phrases like "moonbeams" and "ancient walls," while also conveying the emotional journey of the traveler. The repetition in the chorus reinforces the central theme, making the song memorable. The poetic language and space for interpretation add layers of intrigue and emotional resonance, making the song both engaging and thought-provoking.

Example 2:

>In the quaint village of Elderglen, nestled between ancient woods and misty hills, lived a young adventurer named Elara.

>Human Evaluator:

>The story demonstrates strong world-building and an engaging narrative. The concept of Aetheria is imaginative, with vivid descriptions of floating mountains, crystal rivers, and mystical creatures that evoke a sense of wonder... Overall, the story shows strong creative potential, with an imaginative world, a compelling heroine, and an uplifting message.

Ehm... MiniMax team, I have very bad news for you. Your human evaluators offloaded all of their work to GPT4. Please take note and penalize them. Your Benchmarks is pure fucking GPTSLOP that does NOT represent ACTUAL HUMAN PREFERENCE.

>Qwen, GPT, Gemini, DeepSeek and Llama beat Sonnet

Who was the evaluator? GPT4?

Let's look at appendix...

First fucking example:

>Whispers of the Lost City

>Human Evaluator:

>The lyrics are effective due to their vivid imagery, emotional depth, and narrative structure. They create a mysterious and atmospheric setting with phrases like "moonbeams" and "ancient walls," while also conveying the emotional journey of the traveler. The repetition in the chorus reinforces the central theme, making the song memorable. The poetic language and space for interpretation add layers of intrigue and emotional resonance, making the song both engaging and thought-provoking.

Example 2:

>In the quaint village of Elderglen, nestled between ancient woods and misty hills, lived a young adventurer named Elara.

>Human Evaluator:

>The story demonstrates strong world-building and an engaging narrative. The concept of Aetheria is imaginative, with vivid descriptions of floating mountains, crystal rivers, and mystical creatures that evoke a sense of wonder... Overall, the story shows strong creative potential, with an imaginative world, a compelling heroine, and an uplifting message.

Ehm... MiniMax team, I have very bad news for you. Your human evaluators offloaded all of their work to GPT4. Please take note and penalize them. Your Benchmarks is pure fucking GPTSLOP that does NOT represent ACTUAL HUMAN PREFERENCE.

Anonymous 01/14/25(Tue)14:32:28 No.103893927

Guess I'll be getting a digit when it comes out. If that turns out to be shit then I guess Ill be getting a DDR5 server

Anonymous 01/14/25(Tue)14:32:45 No.103893931

>>103893911

lel that's pretty egregious

lel that's pretty egregious

Anonymous 01/14/25(Tue)14:33:24 No.103893939

>>103892992

>400B

>MoE

This is the perfect sweet spot between DSV3 and 405B. llama.cpp support and Q4 ggufs please and thank you.

>400B

>MoE

This is the perfect sweet spot between DSV3 and 405B. llama.cpp support and Q4 ggufs please and thank you.

Anonymous 01/14/25(Tue)14:33:34 No.103893943

>>103893911

Jesus Christ.

>human evaluator

Did the human evaluator cheat by running it through an LLM instead of doing their job?

Honestly I was thinking of working for one of those data labeling companies and just using an LLM to do it for me so I could see this happening for real.