/lmg/ - Local Models General

Anonymous 01/16/25(Thu)12:11:14 | 365 comments | 38 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103911431 & >>103903120

►News

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/outetts-03-6786b1ebc7aeb757bc17a2fa

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b-instruct

>(01/14) MiniMax-Text-01 released with 456B-A45.9B & hybrid-lightning attention: https://hf.co/MiniMaxAI/MiniMax-Text-01

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103911431 & >>103903120

►News

>(01/16) OuteTTS-0.3 released with voice cloning & punctuation support: https://hf.co/collections/OuteAI/ou

>(01/15) InternLM3-8B-Instruct released with deep thinking capability: https://hf.co/internlm/internlm3-8b

>(01/14) MiniMax-Text-01 released with 456B-A45.9B & hybrid-lightning attention: https://hf.co/MiniMaxAI/MiniMax-Tex

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/16/25(Thu)12:11:38 No.103919243

►Recent Highlights from the Previous Thread: >>103911431

--Meta's use of copyrighted data in Llama model training exposed in lawsuit:

>103916280 >103916346 >103916417 >103916591 >103917039 >103917124 >103917268 >103917341 >103916367 >103916380 >103917167 >103917182 >103917193

--Effectiveness of RP and creativity finetunes for AI models:

>103913748 >103913777 >103913843

--2025 AI landscape discussion, new models and developments:

>103912377 >103912403 >103912427 >103912475 >103912487 >103912536 >103912550 >103912599 >103912786 >103913234 >103913661 >103913674 >103913729 >103914148 >103914026 >103913004 >103914360 >103915077

--Lawsuits targeting AI models and fair use of copyrighted data:

>103917108 >103917145 >103917531 >103917593 >103917645 >103917673 >103917589 >103917646 >103917714 >103917652 >103917688 >103917636 >103917675 >103917691

--LibGen and Meta's use of copyrighted material for AI training:

>103915488 >103915562 >103915625 >103915637 >103915659 >103917356 >103915681 >103915667 >103915755 >103916415

--Meta's Llama 4 and the potential impact of Titans, lawsuits, and copyright regulations:

>103911533 >103911545 >103911571 >103911592 >103914219 >103911660 >103911687 >103911753 >103911775 >103913569

--Silly Tavern on Single Board Computer (SBC) guide completed:

>103915998 >103917338 >103917096 >103917203 >103917689 >103917408 >103917435

--DS3 Q6 RAM usage and hardware discussion:

>103917978 >103918043 >103918079 >103918197 >103918356 >103918820 >103919058 >103918131 >103918437 >103918496 >103918521 >103918658 >103918529 >103918541

--Anon discusses paper on human-like responses in LLMs:

>103915716 >103915740 >103915751 >103916063 >103915997 >103916053

--Dev tests new lorebook feature with dropdown menu:

>103911541 >103912916

--Miku (free space):

>103911701 >103915791 >103915894 >103915998 >103916959 >103917239 >103918964

►Recent Highlight Posts from the Previous Thread: >>103911436

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--Meta's use of copyrighted data in Llama model training exposed in lawsuit:

>103916280 >103916346 >103916417 >103916591 >103917039 >103917124 >103917268 >103917341 >103916367 >103916380 >103917167 >103917182 >103917193

--Effectiveness of RP and creativity finetunes for AI models:

>103913748 >103913777 >103913843

--2025 AI landscape discussion, new models and developments:

>103912377 >103912403 >103912427 >103912475 >103912487 >103912536 >103912550 >103912599 >103912786 >103913234 >103913661 >103913674 >103913729 >103914148 >103914026 >103913004 >103914360 >103915077

--Lawsuits targeting AI models and fair use of copyrighted data:

>103917108 >103917145 >103917531 >103917593 >103917645 >103917673 >103917589 >103917646 >103917714 >103917652 >103917688 >103917636 >103917675 >103917691

--LibGen and Meta's use of copyrighted material for AI training:

>103915488 >103915562 >103915625 >103915637 >103915659 >103917356 >103915681 >103915667 >103915755 >103916415

--Meta's Llama 4 and the potential impact of Titans, lawsuits, and copyright regulations:

>103911533 >103911545 >103911571 >103911592 >103914219 >103911660 >103911687 >103911753 >103911775 >103913569

--Silly Tavern on Single Board Computer (SBC) guide completed:

>103915998 >103917338 >103917096 >103917203 >103917689 >103917408 >103917435

--DS3 Q6 RAM usage and hardware discussion:

>103917978 >103918043 >103918079 >103918197 >103918356 >103918820 >103919058 >103918131 >103918437 >103918496 >103918521 >103918658 >103918529 >103918541

--Anon discusses paper on human-like responses in LLMs:

>103915716 >103915740 >103915751 >103916063 >103915997 >103916053

--Dev tests new lorebook feature with dropdown menu:

>103911541 >103912916

--Miku (free space):

>103911701 >103915791 >103915894 >103915998 >103916959 >103917239 >103918964

►Recent Highlight Posts from the Previous Thread: >>103911436

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/16/25(Thu)12:15:05 No.103919281

Anonymous 01/16/25(Thu)12:15:13 No.103919284

I suspect Gemma3 is going to be a local Gemini-2.0-Flash-Lite so probably most of you will know already what to expect.

Anonymous 01/16/25(Thu)12:15:51 No.103919295

>>103919284

No fucking way Gemma will be anywhere near as fun as Gemini

No fucking way Gemma will be anywhere near as fun as Gemini

Anonymous 01/16/25(Thu)12:17:30 No.103919323

>More voice stuff

Okay that's great and all but are there any """user friendly""" options out there for it yet? Not asking for anything hyper normalfag tier, but so far it felt like voice stuff is more complicated than text, img, vid or even the one (?) music thing we have.

Granted I could be terrible wrong since I haven't looked into this stuff a lot in the last months.

>MiniMax-Text-01

This thing usable with the usual tools yet, or do you need a special set up for early usage?

Okay that's great and all but are there any """user friendly""" options out there for it yet? Not asking for anything hyper normalfag tier, but so far it felt like voice stuff is more complicated than text, img, vid or even the one (?) music thing we have.

Granted I could be terrible wrong since I haven't looked into this stuff a lot in the last months.

>MiniMax-Text-01

This thing usable with the usual tools yet, or do you need a special set up for early usage?

Anonymous 01/16/25(Thu)12:20:15 No.103919361

>>103919295

Why not? Being "fun" isn't really a matter of parameters, it's mostly finetuning direction, and Gemma 2 was already sort of crazy with a suitable prefill/low-depth instruction (although I swear even the 27B version turns into a 2B model during explicit ERP).

Why not? Being "fun" isn't really a matter of parameters, it's mostly finetuning direction, and Gemma 2 was already sort of crazy with a suitable prefill/low-depth instruction (although I swear even the 27B version turns into a 2B model during explicit ERP).

Anonymous 01/16/25(Thu)12:21:44 No.103919383

>>103919284

It's going to be gimped in some way again compared to other models because fuck you.

It's going to be gimped in some way again compared to other models because fuck you.

Anonymous 01/16/25(Thu)12:22:41 No.103919392

>>103919323

GPT-SoVITS is still the king. For MiniMax, just wait

GPT-SoVITS is still the king. For MiniMax, just wait

Anonymous 01/16/25(Thu)12:22:46 No.103919393

I can feel it. We're getting a big new release today.

Anonymous 01/16/25(Thu)12:23:28 No.103919404

>>103919392

He asked for easy to use, not the best.

He asked for easy to use, not the best.

Anonymous 01/16/25(Thu)12:26:31 No.103919446

*tap tap tap* https://huggingface.co/spaces/hexgrad/Kokoro-TTS

Anonymous 01/16/25(Thu)12:26:32 No.103919447

Anonymous 01/16/25(Thu)12:27:03 No.103919455

Anonymous 01/16/25(Thu)12:30:26 No.103919491

>>103919383

If it has text+image capabilities and some unusual attention mechanism, it's going to take forever for proper implementation into the existing open source backends, that's for sure.

If it has text+image capabilities and some unusual attention mechanism, it's going to take forever for proper implementation into the existing open source backends, that's for sure.

Anonymous 01/16/25(Thu)12:33:54 No.103919529

>>103919404

https://huggingface.co/lj1995/GPT-SoVITS-windows-package/resolve/main/GPT-SoVITS-v2-240821.7z?download=true

Download & unarchive on windows, also run a few commands on linux.

conda create -n GPTSoVits python=3.9

conda activate GPTSoVits

bash install.sh

How hard is that?

https://huggingface.co/lj1995/GPT-S

Download & unarchive on windows, also run a few commands on linux.

conda create -n GPTSoVits python=3.9

conda activate GPTSoVits

bash install.sh

How hard is that?

Anonymous 01/16/25(Thu)12:34:48 No.103919542

>>103919514

>I am not afraid to admit that I love Hatsune Miku and I am trans. Everyone here should be proud if they love Miku and are trans.

this has to be the most ham-fisted false flag I've ever seen in my life

>I am not afraid to admit that I love Hatsune Miku and I am trans. Everyone here should be proud if they love Miku and are trans.

this has to be the most ham-fisted false flag I've ever seen in my life

Anonymous 01/16/25(Thu)12:35:52 No.103919548

>>103919529

>conda: The term 'conda' is not recognized as a name of a cmdlet, function, script file, or executable program.

>Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

Hard enough apparently.

>conda: The term 'conda' is not recognized as a name of a cmdlet, function, script file, or executable program.

>Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

Hard enough apparently.

Anonymous 01/16/25(Thu)12:37:08 No.103919554

>>103919514

Based

Based

Anonymous 01/16/25(Thu)12:37:44 No.103919561

>>103919542

sounds like you admit that troons are subversive and pretend miku isn't a troon icon.

sounds like you admit that troons are subversive and pretend miku isn't a troon icon.

Anonymous 01/16/25(Thu)12:38:10 No.103919568

>>103919514

This, but unironically. But I'm not a proper Trans since I live in a poor country and don't have money for hrt.

This, but unironically. But I'm not a proper Trans since I live in a poor country and don't have money for hrt.

Anonymous 01/16/25(Thu)12:38:26 No.103919572

>>103919548

found an ollama user

found an ollama user

Anonymous 01/16/25(Thu)12:39:08 No.103919581

>>103919548

>conda

I hate conda (almost as much as I hate docker), but it was the only way I was able to make sovits work. a simple venv was useless.

You'll need to eg. add the conda repo if you're on debian/ubuntu

>conda

I hate conda (almost as much as I hate docker), but it was the only way I was able to make sovits work. a simple venv was useless.

You'll need to eg. add the conda repo if you're on debian/ubuntu

Anonymous 01/16/25(Thu)12:42:56 No.103919614

>>103919568

Contact your local authorities, they may give it to you for free and you don't know it. I live in Northeast Asia and that's the way they usually do it.

Contact your local authorities, they may give it to you for free and you don't know it. I live in Northeast Asia and that's the way they usually do it.

Anonymous 01/16/25(Thu)12:46:51 No.103919656

>>103919514

That's why Makise General was better.

That's why Makise General was better.

Anonymous 01/16/25(Thu)12:48:13 No.103919680

Anonymous 01/16/25(Thu)12:49:18 No.103919695

>>103919680

Mongolian more likely

Mongolian more likely

Anonymous 01/16/25(Thu)12:50:43 No.103919707

These nemoguard datasets could be a decent framework for dataset injection.

Anonymous 01/16/25(Thu)13:01:32 No.103919871

>>103919581

>conda had one job: to contain everything in the project's folder, so you can delete and start over.

>~/.conda/envs/GPTSoVits

>conda had one job: to contain everything in the project's folder, so you can delete and start over.

>~/.conda/envs/GPTSoVits

Anonymous 01/16/25(Thu)13:02:49 No.103919889

>>103919871

cute snek

cute snek

Anonymous 01/16/25(Thu)13:04:23 No.103919912

>>103919871

derp snek

derp snek

Anonymous 01/16/25(Thu)13:06:27 No.103919946

Finally, transformers 2.0 (again)

https://sakana.ai/transformer-squared/

https://sakana.ai/transformer-squar

Anonymous 01/16/25(Thu)13:07:40 No.103919969

So can you run a decent chatbot on a normal gpu

Anonymous 01/16/25(Thu)13:08:58 No.103919992

Anonymous 01/16/25(Thu)13:09:42 No.103920000

>>103919946

TRANSFORMER TWO BABY!

>>103919969

Depends on how retarded you want to define "decent chatbot", been able to do that for quite a while now

TRANSFORMER TWO BABY!

>>103919969

Depends on how retarded you want to define "decent chatbot", been able to do that for quite a while now

Anonymous 01/16/25(Thu)13:10:00 No.103920004

>>103919969

A 24gb one? You probably need two, 24gb of VRAM + RAM isn't much faster than just using fast RAM. But you can use EVA 0.0 70B with two 24gb cards.

A 24gb one? You probably need two, 24gb of VRAM + RAM isn't much faster than just using fast RAM. But you can use EVA 0.0 70B with two 24gb cards.

Anonymous 01/16/25(Thu)13:10:11 No.103920006

>>103919992

hardmaru is an OG thoughever

hardmaru is an OG thoughever

Anonymous 01/16/25(Thu)13:10:47 No.103920017

>>103920004

based misleader destroying another retards hopes and dreams

based misleader destroying another retards hopes and dreams

Anonymous 01/16/25(Thu)13:12:26 No.103920039

Anonymous 01/16/25(Thu)13:14:03 No.103920067

>>103920039

Don't listen to that guy, you can run lots of good models and having a gpu IS faster than--

>AMD

Never mind, sorry.

Don't listen to that guy, you can run lots of good models and having a gpu IS faster than--

>AMD

Never mind, sorry.

Anonymous 01/16/25(Thu)13:14:13 No.103920072

>>103919969

70B models run at ~2 t/s on a single good GPU and 64GB DDR5. Pretty tolerable if you care more about quality than speed.

70B models run at ~2 t/s on a single good GPU and 64GB DDR5. Pretty tolerable if you care more about quality than speed.

Anonymous 01/16/25(Thu)13:14:25 No.103920074

>>103920039

>AMD

And what is HE, doing here? HE LOST!? At least you have 16GB, that gets you a long way, just not as fast as on nvidia.

>AMD

And what is HE, doing here? HE LOST!? At least you have 16GB, that gets you a long way, just not as fast as on nvidia.

Anonymous 01/16/25(Thu)13:14:26 No.103920075

Local is saved thanks to AID!

https://huggingface.co/LatitudeGames/Wayfarer-12B

>We’ve heard over and over from AI Dungeon players that modern AI models are too nice, never letting them fail or die. While it may be good for a chatbot to be nice and helpful, great stories and games aren’t all rainbows and unicorns. They have conflict, tension, and even death. These create real stakes and consequences for characters and the journeys they go on.

>However, the vast majority of AI models, through alignment RLHF, have been trained away from darkness, violence, or conflict, preventing them from fulfilling this role. To give our players better options, we decided to train our own model to fix these issues.

>Wayfarer is an adventure role-play model specifically trained to give players a challenging and dangerous experience. We thought they would like it, but since releasing it on AI Dungeon, players have reacted even more positively than we expected.

>Because they loved it so much, we’ve decided to open-source the model so anyone can experience unforgivingly brutal AI adventures! Anyone can download the model to run locally.

>Or if you want to easily try this model for free, you can do so at https://aidungeon.com.

>Thanks to Gryphe Padar for collaborating on this finetune with us!

https://huggingface.co/LatitudeGame

>We’ve heard over and over from AI Dungeon players that modern AI models are too nice, never letting them fail or die. While it may be good for a chatbot to be nice and helpful, great stories and games aren’t all rainbows and unicorns. They have conflict, tension, and even death. These create real stakes and consequences for characters and the journeys they go on.

>However, the vast majority of AI models, through alignment RLHF, have been trained away from darkness, violence, or conflict, preventing them from fulfilling this role. To give our players better options, we decided to train our own model to fix these issues.

>Wayfarer is an adventure role-play model specifically trained to give players a challenging and dangerous experience. We thought they would like it, but since releasing it on AI Dungeon, players have reacted even more positively than we expected.

>Because they loved it so much, we’ve decided to open-source the model so anyone can experience unforgivingly brutal AI adventures! Anyone can download the model to run locally.

>Or if you want to easily try this model for free, you can do so at https://aidungeon.com.

>Thanks to Gryphe Padar for collaborating on this finetune with us!

Anonymous 01/16/25(Thu)13:15:03 No.103920085

>>103920075

Go away

Go away

Anonymous 01/16/25(Thu)13:15:26 No.103920088

>>103920039

https://huggingface.co/MarsupialAI/Cydonia-22B-v1.3_EXL2_4.5bpw

https://github.com/theroyallab/tabbyAPI

https://huggingface.co/MarsupialAI/

https://github.com/theroyallab/tabb

Anonymous 01/16/25(Thu)13:15:27 No.103920090

>>103920085

nyo

nyo

Anonymous 01/16/25(Thu)13:15:48 No.103920098

>>103920075

Is the model going to rape you though?

Is the model going to rape you though?

Anonymous 01/16/25(Thu)13:16:31 No.103920109

>>103920090

GO BACK HO BACK GO GACK TO BACK

GO BACK HO BACK GO GACK TO BACK

Anonymous 01/16/25(Thu)13:16:37 No.103920114

>>103920075

Now this is actual, literal shilling.

Now this is actual, literal shilling.

Anonymous 01/16/25(Thu)13:17:37 No.103920127

>>103920114

Is not tho, just posting the best thing to have popped up on HF timeline in months!

Is not tho, just posting the best thing to have popped up on HF timeline in months!

Anonymous 01/16/25(Thu)13:18:41 No.103920136

>>103920075

Aí Dungeon isn't local

Aí Dungeon isn't local

Anonymous 01/16/25(Thu)13:18:47 No.103920137

Anonymous 01/16/25(Thu)13:19:45 No.103920148

>>103920136

You can download the open model tho, please read on the text general, thank you!

You can download the open model tho, please read on the text general, thank you!

Anonymous 01/16/25(Thu)13:21:03 No.103920171

>>103920148

Fuck off Nick

Fuck off Nick

Anonymous 01/16/25(Thu)13:26:13 No.103920245

Anonymous 01/16/25(Thu)13:26:47 No.103920257

Running silly tavern with Nemo 12b and two characters, they talk and everything is very discriptive in the begging and as it goes on the description really gets reduced and then the 'ai' has less to work with and makes up less things. How can I get more out of it or somehow shake things up?

Anonymous 01/16/25(Thu)13:27:36 No.103920268

Anonymous 01/16/25(Thu)13:32:50 No.103920357

Anonymous 01/16/25(Thu)13:33:13 No.103920364

are these real

"minimum order 5 pieces" or else i'd probably take the plunge and order two

https://www.alibaba.com/product-detail/RTX-4090-48gb-graphics-card-for_1601296145448.html

"minimum order 5 pieces" or else i'd probably take the plunge and order two

https://www.alibaba.com/product-det

Anonymous 01/16/25(Thu)13:33:15 No.103920365

So, did anyone actually try Negative Llama?

Anonymous 01/16/25(Thu)13:33:45 No.103920371

Forget best overall model, what local model does your fetish best?

For me, it's bagel-mistery-tour. Does stomach growling like a goddamn champion, even if it's old. Command-R plus is a close runner up, it's really not afraid to get deranged and it's great at buildup.

For me, it's bagel-mistery-tour. Does stomach growling like a goddamn champion, even if it's old. Command-R plus is a close runner up, it's really not afraid to get deranged and it's great at buildup.

Anonymous 01/16/25(Thu)13:34:22 No.103920386

Anonymous 01/16/25(Thu)13:34:32 No.103920391

When will it stop being so boring?

Anonymous 01/16/25(Thu)13:36:05 No.103920417

>>103920386

Exactly! But rumor has it bigger models in the same style may be on their way!

Exactly! But rumor has it bigger models in the same style may be on their way!

Anonymous 01/16/25(Thu)13:37:21 No.103920436

Anonymous 01/16/25(Thu)13:37:23 No.103920439

>>103920088

so this thing is 22B? So it's better than NovelAI base AI?

so this thing is 22B? So it's better than NovelAI base AI?

Anonymous 01/16/25(Thu)13:39:52 No.103920481

Anonymous 01/16/25(Thu)13:41:19 No.103920502

Anonymous 01/16/25(Thu)13:46:57 No.103920589

My kitten likes sitting on my puter while I'm running inference because it's so warm...

Anonymous 01/16/25(Thu)13:47:06 No.103920593

>>103920075

This is surprisingly good, 0 slop too.

This is surprisingly good, 0 slop too.

Anonymous 01/16/25(Thu)13:47:19 No.103920597

>>103920075

While Wayfarer’s focus on conflict and danger is appealing to some, it raises ethical questions about the potential for promoting violence or harmful content. AI Dungeon will need to ensure that the model is used responsibly and that safeguards are in place to prevent misuse.

There may be concerns about the implications of promoting violence or darker themes in gaming. It's crucial to approach these themes thoughtfully and ensure that they are handled in a way that is respectful and responsible.

While Wayfarer’s focus on conflict and danger is appealing to some, it raises ethical questions about the potential for promoting violence or harmful content. AI Dungeon will need to ensure that the model is used responsibly and that safeguards are in place to prevent misuse.

There may be concerns about the implications of promoting violence or darker themes in gaming. It's crucial to approach these themes thoughtfully and ensure that they are handled in a way that is respectful and responsible.

Anonymous 01/16/25(Thu)13:48:48 No.103920621

>>103920593

>This is surprisingly good, 0 slop too.

Makes sense, all their data is probably from before the grand GPTslop Impact.

>This is surprisingly good, 0 slop too.

Makes sense, all their data is probably from before the grand GPTslop Impact.

Anonymous 01/16/25(Thu)13:48:58 No.103920624

>>103920589

poast pussy

poast pussy

Anonymous 01/16/25(Thu)13:50:17 No.103920642

>>103920597

Bruh, it’s pixels and vibes, not deep lore on morality. People ain’t here for some G-rated snoozefest—they want that edge. Let the AI go feral. Yeah, slap some safeties on there, sure, but don’t neuter the whole thing ‘cause some NPCs got triggered. Fiction’s fake, move on.

Bruh, it’s pixels and vibes, not deep lore on morality. People ain’t here for some G-rated snoozefest—they want that edge. Let the AI go feral. Yeah, slap some safeties on there, sure, but don’t neuter the whole thing ‘cause some NPCs got triggered. Fiction’s fake, move on.

Anonymous 01/16/25(Thu)13:50:34 No.103920645

>>103920621

>Makes sense, all their data is probably from before the grand GPTslop Impact.

Actually no, they used synth data, but they know what they're doing, they worked with Gryphe after all

>Wayfarer’s text adventure data was generated by simulating playthroughs of published character creator scenarios from AI Dungeon. Five distinct user archetypes played through each scenario, whose character starts all varied in faction, location, etc. to generate five unique samples.

>One language model played the role of narrator, with the other playing the user. They were blind to each other’s underlying logic, so the user was actually capable of surprising the narrator with their choices. Each simulation was allowed to run for 8k tokens or until the main character died.

>Makes sense, all their data is probably from before the grand GPTslop Impact.

Actually no, they used synth data, but they know what they're doing, they worked with Gryphe after all

>Wayfarer’s text adventure data was generated by simulating playthroughs of published character creator scenarios from AI Dungeon. Five distinct user archetypes played through each scenario, whose character starts all varied in faction, location, etc. to generate five unique samples.

>One language model played the role of narrator, with the other playing the user. They were blind to each other’s underlying logic, so the user was actually capable of surprising the narrator with their choices. Each simulation was allowed to run for 8k tokens or until the main character died.

Anonymous 01/16/25(Thu)13:51:05 No.103920653

>>103920075

I am downloading now and I will post in this thread that it is complete shit when it fails to tickle my penis the way I like it.

I am downloading now and I will post in this thread that it is complete shit when it fails to tickle my penis the way I like it.

Anonymous 01/16/25(Thu)13:51:50 No.103920665

Anonymous 01/16/25(Thu)13:52:26 No.103920676

>>103920624

Here she is in my coat! Don't have any on computer pics, right now. But she's really good.

Here she is in my coat! Don't have any on computer pics, right now. But she's really good.

Anonymous 01/16/25(Thu)13:54:36 No.103920702

>>103920676

That's one long cat.

That's one long cat.

Anonymous 01/16/25(Thu)13:57:41 No.103920738

>>103920665

>https://github.com/sgl-project/sglang/pull/2911

Cool, glad there's some traction somewhere. Thanks!

>triton

oh...

>https://github.com/sgl-project/sgl

Cool, glad there's some traction somewhere. Thanks!

>triton

oh...

Anonymous 01/16/25(Thu)13:58:14 No.103920750

>>103920738

you can install triton on windows

you can install triton on windows

Anonymous 01/16/25(Thu)13:58:40 No.103920756

>>103920702

Isn't she? Look at this putrid thing.

Isn't she? Look at this putrid thing.

Anonymous 01/16/25(Thu)13:59:07 No.103920766

>>103920597

Shut up shut up shut up, if a person can't separate a game and what we do in a functioning society it's a problem with the person and his head.

Shut up shut up shut up, if a person can't separate a game and what we do in a functioning society it's a problem with the person and his head.

Anonymous 01/16/25(Thu)14:01:53 No.103920791

Anonymous 01/16/25(Thu)14:03:11 No.103920804

>>103917108

>They will not improve anymore because they won't get any more training power due to GPU ban.

The chinese found loopholes by renting out nvidia gpus from other countries

>They will not improve anymore because they won't get any more training power due to GPU ban.

The chinese found loopholes by renting out nvidia gpus from other countries

Anonymous 01/16/25(Thu)14:03:38 No.103920808

>>103920766

this, we shouldn't be punished because there's mentally ill people that exist

this, we shouldn't be punished because there's mentally ill people that exist

Anonymous 01/16/25(Thu)14:05:04 No.103920825

>>103920804

Nvidia is always winning it seems

Nvidia is always winning it seems

Anonymous 01/16/25(Thu)14:06:51 No.103920854

>>103920645

>they know what they're doing, they worked with Gryphe after all

I don't see this as a plus

>they know what they're doing, they worked with Gryphe after all

I don't see this as a plus

Anonymous 01/16/25(Thu)14:12:01 No.103920912

>>103920075

>running away from "weird cloaked figure" and begging for my life

>Your words fade into the dark as the figure's footsteps grow deafeningly close. A cold hand clamps onto your shoulder, spinning you around. The hood falls back, revealing a grotesque visage - rotting flesh stretched taut over bone, crimson eyes burning with malevolent glee. The dagger's blade catches the moonlight as it slices towards your throat. Your options are dwindling fast, the forest around you dark and treacherous.

>running away from "weird cloaked figure" and begging for my life

>Your words fade into the dark as the figure's footsteps grow deafeningly close. A cold hand clamps onto your shoulder, spinning you around. The hood falls back, revealing a grotesque visage - rotting flesh stretched taut over bone, crimson eyes burning with malevolent glee. The dagger's blade catches the moonlight as it slices towards your throat. Your options are dwindling fast, the forest around you dark and treacherous.

Anonymous 01/16/25(Thu)14:14:31 No.103920947

>>103920365

Yes, but they're too embarrassed to admit it.

Yes, but they're too embarrassed to admit it.

Anonymous 01/16/25(Thu)14:21:07 No.103921001

holy shit i haven't seen models doing that in a while

Anonymous 01/16/25(Thu)14:34:52 No.103921136

>>103919383

Tiny context?

Tiny context?

Anonymous 01/16/25(Thu)14:35:06 No.103921139

Anonymous 01/16/25(Thu)14:36:00 No.103921148

Anonymous 01/16/25(Thu)14:36:18 No.103921152

>>103920072

Were there speedups recently or something? Or are you talking about a really small quant? Best I've ever done is 1.5T/s.

Were there speedups recently or something? Or are you talking about a really small quant? Best I've ever done is 1.5T/s.

Anonymous 01/16/25(Thu)14:37:25 No.103921164

Anonymous 01/16/25(Thu)14:42:10 No.103921221

>>103920804

>middle east, asian

Useless. All restricted countries, tier 2 countries can only purchase limited amount of GPU compute power.

And the US can downgrade them to tier 3 if their cloud GPU companies try to do business with Chinese companies.

>middle east, asian

Useless. All restricted countries, tier 2 countries can only purchase limited amount of GPU compute power.

And the US can downgrade them to tier 3 if their cloud GPU companies try to do business with Chinese companies.

Anonymous 01/16/25(Thu)14:43:03 No.103921233

>>103921221

you know the US is still the king of the world when they're the only ones who decide who's worthy of GPUs lol

you know the US is still the king of the world when they're the only ones who decide who's worthy of GPUs lol

Anonymous 01/16/25(Thu)14:45:11 No.103921250

>>103921221

Just move Nvidia to another country and the restrictions will disappear.

Just move Nvidia to another country and the restrictions will disappear.

Anonymous 01/16/25(Thu)14:46:19 No.103921264

Anonymous 01/16/25(Thu)14:48:49 No.103921284

>>103921250

>He thinks that the US would EVER Allow nvidia to leave their grasp

>He thinks that the US wouldn't do EVERYTHING in their power to keep them local

lmao

>He thinks that the US would EVER Allow nvidia to leave their grasp

>He thinks that the US wouldn't do EVERYTHING in their power to keep them local

lmao

Anonymous 01/16/25(Thu)14:57:56 No.103921371

>>103921221

Funny how many literal NATO allies are on the restricted list. The US truly doesn't give a fuck about maintaining goodwill anymore. This is the Biden administration too, so it's not some orange man cowboy thing.

Funny how many literal NATO allies are on the restricted list. The US truly doesn't give a fuck about maintaining goodwill anymore. This is the Biden administration too, so it's not some orange man cowboy thing.

Anonymous 01/16/25(Thu)14:58:10 No.103921378

>>103921284

I thought USA was a democracy and the land of the free?

I thought USA was a democracy and the land of the free?

Anonymous 01/16/25(Thu)14:59:54 No.103921391

>>103921378

USA is the land of the greedy jews

USA is the land of the greedy jews

Anonymous 01/16/25(Thu)15:02:36 No.103921409

>>103921221

>first world countries are all Tier 1

>SWITZERLAND is Tier 2

i... what? oddly unexpected for me

>>103921378

Giving a company insanely huge ""incentives"" is perfectly fine under a democracy

>first world countries are all Tier 1

>SWITZERLAND is Tier 2

i... what? oddly unexpected for me

>>103921378

Giving a company insanely huge ""incentives"" is perfectly fine under a democracy

Anonymous 01/16/25(Thu)15:02:43 No.103921411

>>103921264

obessed un-medz schizooidal

obessed un-medz schizooidal

Anonymous 01/16/25(Thu)15:03:29 No.103921421

>>103921409

Switzerland remained neutral toward Russia

Switzerland remained neutral toward Russia

Anonymous 01/16/25(Thu)15:03:50 No.103921425

Anonymous 01/16/25(Thu)15:09:47 No.103921479

Anonymous 01/16/25(Thu)15:09:47 No.103921480

Anonymous 01/16/25(Thu)15:11:50 No.103921502

>>103921421

They're Russian puppets

They're Russian puppets

Anonymous 01/16/25(Thu)15:12:26 No.103921511

>>103921378

"free for me but not for thee" - the US probably

"free for me but not for thee" - the US probably

Anonymous 01/16/25(Thu)15:14:35 No.103921538

>>103921502

meds

meds

Anonymous 01/16/25(Thu)15:16:16 No.103921558

Unsurprisingly, Wayfarer is trash. It's been a while since I've seen a model that can't even follow the explicit meaning of a conversation, much less the subtext.

Anonymous 01/16/25(Thu)15:16:56 No.103921570

>>103920075

Honestly pretty good.

Honestly pretty good.

Anonymous 01/16/25(Thu)15:17:30 No.103921576

>>103921558

It's an adventure model you gooner

It's an adventure model you gooner

Anonymous 01/16/25(Thu)15:18:00 No.103921581

>>103921538

https://www.reuters.com/world/swiss-defend-decision-not-adopt-latest-russia-sanctions-full-2024-10-18/

https://www.reuters.com/world/swiss

Anonymous 01/16/25(Thu)15:18:13 No.103921583

>>103921558

literally not meant for that, like actually

>Wayfarer was trained exclusively on second-person present tense data (using “you”) in a narrative style. Other styles will work as well but may produce suboptimal results.

literally not meant for that, like actually

>Wayfarer was trained exclusively on second-person present tense data (using “you”) in a narrative style. Other styles will work as well but may produce suboptimal results.

Anonymous 01/16/25(Thu)15:18:55 No.103921588

>>103921576

Do your adventures feature no NPCs to talk to?

Do your adventures feature no NPCs to talk to?

Anonymous 01/16/25(Thu)15:18:56 No.103921589

>geopolitics

not very cash AI of you guys

not very cash AI of you guys

Anonymous 01/16/25(Thu)15:19:08 No.103921593

>>103921558

rope yourself, your brain is fried

rope yourself, your brain is fried

Anonymous 01/16/25(Thu)15:19:18 No.103921598

Anonymous 01/16/25(Thu)15:19:43 No.103921603

>>103921588

We all know exactly what kind of "talking" you were trying to do

We all know exactly what kind of "talking" you were trying to do

Anonymous 01/16/25(Thu)15:19:53 No.103921606

>>103921581

>if you decline to actively punish someone, that means you're their puppet

that's not what words mean. that's just the swiss remaining neutral like they always do.

>if you decline to actively punish someone, that means you're their puppet

that's not what words mean. that's just the swiss remaining neutral like they always do.

Anonymous 01/16/25(Thu)15:21:56 No.103921628

Anonymous 01/16/25(Thu)15:22:26 No.103921637

>>103921558

Tested it very briefly and it seemed on par with the other good 12B fine tunes.

Are you comparing it to bigger models?

Tested it very briefly and it seemed on par with the other good 12B fine tunes.

Are you comparing it to bigger models?

Anonymous 01/16/25(Thu)15:25:46 No.103921680

>>103921589

News about restrictions on the purchase of the hardware required for the hobby is _directly_ relevant to the hobby.

News about restrictions on the purchase of the hardware required for the hobby is _directly_ relevant to the hobby.

Anonymous 01/16/25(Thu)15:26:51 No.103921696

>>103921628

>instant dismissal because of who made it and nothing else

>competitor of a company infamous because of the amount of shills they deploy in 4chan

I don't think there's a company as pathetic as NovelAI in the AI space.

>instant dismissal because of who made it and nothing else

>competitor of a company infamous because of the amount of shills they deploy in 4chan

I don't think there's a company as pathetic as NovelAI in the AI space.

Anonymous 01/16/25(Thu)15:32:09 No.103921763

Minimax vs Wayfarer who wins

Anonymous 01/16/25(Thu)15:34:27 No.103921789

>>103921763

one can be ranned without nasas destop

one can be ranned without nasas destop

Anonymous 01/16/25(Thu)15:39:44 No.103921852

Claude AI wants my mobile number on sign up

Anonymous 01/16/25(Thu)15:40:41 No.103921862

Anonymous 01/16/25(Thu)16:07:47 No.103922149

I wish these damn roleplay models would understand context better. Group chats are fun when you got a bunch of characters from the same roleplay universe, but they too often loose track of the conversation or even go into a loop on the same thing.

The worst part is probably when they start talking about things they shouldn't know, or are suddenly in locations they shouldn't be.

The worst part is probably when they start talking about things they shouldn't know, or are suddenly in locations they shouldn't be.

Anonymous 01/16/25(Thu)16:10:14 No.103922170

>>103922149

The models just aren't trained for group chats. They all keep sticking with the alternating user-assistant crap. This isn't how real chats or roleplay work.

The models just aren't trained for group chats. They all keep sticking with the alternating user-assistant crap. This isn't how real chats or roleplay work.

Anonymous 01/16/25(Thu)16:19:15 No.103922265

>>103922170

Unfortunately if some company makes an RP model from scratch it won't be open.

Unfortunately if some company makes an RP model from scratch it won't be open.

Anonymous 01/16/25(Thu)16:24:48 No.103922314

>>103922149

i mean if they are changing locations it means your character cards mention locations, or you don't have enough context to include where the location was mentioned.

try removing locations from cards and i've never tried it but there is a "worlds" feature in silly for which sets a location i think.

i mean if they are changing locations it means your character cards mention locations, or you don't have enough context to include where the location was mentioned.

try removing locations from cards and i've never tried it but there is a "worlds" feature in silly for which sets a location i think.

Anonymous 01/16/25(Thu)16:30:12 No.103922369

>>103922149

>talking about things they shouldn't know

Semi-related, I have to tell models that if I put something outside of quotes then I'm not saying it therefore other characters didn't hear that.

>talking about things they shouldn't know

Semi-related, I have to tell models that if I put something outside of quotes then I'm not saying it therefore other characters didn't hear that.

Anonymous 01/16/25(Thu)16:42:26 No.103922483

https://files.catbox.moe/85jqd5.jpg

Anonymous 01/16/25(Thu)16:55:20 No.103922589

>>103922483

unreasonably squishy teto...

unreasonably squishy teto...

Anonymous 01/16/25(Thu)16:57:00 No.103922607

Anonymous 01/16/25(Thu)17:09:03 No.103922703

>>103922483

You could be giving Teto big wings and keeping her chest flat, I'd like it more like that

You could be giving Teto big wings and keeping her chest flat, I'd like it more like that

Anonymous 01/16/25(Thu)17:14:44 No.103922763

Is there a retard guide for using unsloth on windows?

Anonymous 01/16/25(Thu)17:24:43 No.103922864

>>103922763

Use wsl

Use wsl

Anonymous 01/16/25(Thu)17:32:13 No.103922958

>>103920766

>>103920642

damn actual retards falling for generated posts. getting embarrassed to even post here at this point

>>103920642

damn actual retards falling for generated posts. getting embarrassed to even post here at this point

Anonymous 01/16/25(Thu)17:35:36 No.103923004

>>103922958

I have baited people here a number of times with generated posts just like that one, it's always funny.

I have baited people here a number of times with generated posts just like that one, it's always funny.

Anonymous 01/16/25(Thu)17:46:43 No.103923106

what is the "dirtiest" 12b?

Anonymous 01/16/25(Thu)17:47:24 No.103923116

I finally downloaded the Mistral 22b instruct base model. The rp is decent enough but it has a huge impersonating problem. When I switched to Cydonia the difference was like 8/10 to 1/10.

Is there some kind of preset to prevent this?

Is there some kind of preset to prevent this?

Anonymous 01/16/25(Thu)17:48:13 No.103923125

Anonymous 01/16/25(Thu)17:49:25 No.103923138

>>103923125

I meant instruct base as in the bare instruct model, no finetunes

I meant instruct base as in the bare instruct model, no finetunes

Anonymous 01/16/25(Thu)17:53:56 No.103923180

>>103919239

Will I be okay with 9070's 16GB VRAM or should I invest in a 7900XTX (24gigs)? Will training be impossible on 16?

Will I be okay with 9070's 16GB VRAM or should I invest in a 7900XTX (24gigs)? Will training be impossible on 16?

Anonymous 01/16/25(Thu)17:54:12 No.103923184

Do the gemma models output random india script like gemini does?

>>103923138

He's being pedantic. What he means is that the official instruct tune is a fine tune over the base model.

>>103923138

He's being pedantic. What he means is that the official instruct tune is a fine tune over the base model.

Anonymous 01/16/25(Thu)17:59:08 No.103923228

I gave Wayfarer a try and as I promised I am back to say it didn't tickle my penis the way I wanted. It feels like a competent fine tune as in it does something to the model without overfitting and raping it. But if there is any improvement to regular nemo it is very small.

You can't finetune away the fact that base training removed all the good stuff. You can't fix """"safety"""". Only nuclear fire can save us at this point.

You can't finetune away the fact that base training removed all the good stuff. You can't fix """"safety"""". Only nuclear fire can save us at this point.

Anonymous 01/16/25(Thu)18:00:52 No.103923248

>>103923106

probably unslopnemo/Rocinante v2g

if you cant run command-r or a 30B or 70B its probably best, but its dumb as fuck when compared to higher models

probably unslopnemo/Rocinante v2g

if you cant run command-r or a 30B or 70B its probably best, but its dumb as fuck when compared to higher models

Anonymous 01/16/25(Thu)18:03:42 No.103923292

>>103923248

I know...

i am saving a for a second 3060.

I could probably run cydonia but i am not patient enough for 1.30 secs gen

I know...

i am saving a for a second 3060.

I could probably run cydonia but i am not patient enough for 1.30 secs gen

Anonymous 01/16/25(Thu)18:07:46 No.103923335

Do people actually wait 1 second for 1 token of mistral small? Last time I was using it before I gave up on using anything I was throwing away page after page of output because it was all shit. Would the same happen if I got a second 3/4090?

Anonymous 01/16/25(Thu)18:09:14 No.103923350

>>103919239

How are people even able to fine-tune LLMs? A while back a model called "GPT-4Chan" was popular before fucking face locked it down because "something something it spreads harm" or whatever. Are there any dedicated tools for this that any rando could use on his own consumer (or cloud rented) hardware? Nvidia's recent announcement of "Project Digits" has gotten me interested is LLMs again. Not that I could ever afford one currently but I mostly want to learn more about LLMS and see if fine tuning one on your own is even possible

How are people even able to fine-tune LLMs? A while back a model called "GPT-4Chan" was popular before fucking face locked it down because "something something it spreads harm" or whatever. Are there any dedicated tools for this that any rando could use on his own consumer (or cloud rented) hardware? Nvidia's recent announcement of "Project Digits" has gotten me interested is LLMs again. Not that I could ever afford one currently but I mostly want to learn more about LLMS and see if fine tuning one on your own is even possible

Anonymous 01/16/25(Thu)18:11:58 No.103923364

>>103923350

TL DR is that it doesn't work.

TL DR is that it doesn't work.

Anonymous 01/16/25(Thu)18:12:24 No.103923369

>>103922483

need teto milkies

need teto milkies

Anonymous 01/16/25(Thu)18:14:40 No.103923391

>>103923364

Have you tried? Why is it supposedly shit?

Have you tried? Why is it supposedly shit?

Anonymous 01/16/25(Thu)18:17:28 No.103923412

>>103923350

Look into unsloth and axolotl.

Look into unsloth and axolotl.

Anonymous 01/16/25(Thu)18:18:11 No.103923416

>>103923391

I guess you are right. It absolutely works.

I guess you are right. It absolutely works.

Anonymous 01/16/25(Thu)18:20:18 No.103923437

>>103923228

It's comfy enough to hold me over until something actually big comes out.

It's comfy enough to hold me over until something actually big comes out.

Anonymous 01/16/25(Thu)18:25:17 No.103923488

Which LLM will you use on the big day in 2060?

Anonymous 01/16/25(Thu)18:26:13 No.103923495

i'm fairly positive on titans

the fact that it can do complex reasoning across facts scattered over a large context is exactly the kind of thing that (for me) makes or breaks a story-writing chat

the fact that it can do complex reasoning across facts scattered over a large context is exactly the kind of thing that (for me) makes or breaks a story-writing chat

Anonymous 01/16/25(Thu)18:26:38 No.103923500

One anon brought up trying a hyper fitted finetune a few days back and how it didn't work that well. Here is an idea someone can make a logo for. What about something like a MoE where you train a small router from zero and this router, decides if next token should use default model weights or fine tuned hardcore overfitted model weights. Maybe this way the finetuned weights of the model could be overfitted on new training data but the model would still have a capacity to go back to not being brain damaged and overfitted for tokens that are less related to training data?

Anonymous 01/16/25(Thu)18:31:30 No.103923537

Nvidia just released a model!

And it's just a speculative decoding thing for Llama 8B...

And it's just a speculative decoding thing for Llama 8B...

Anonymous 01/16/25(Thu)18:34:36 No.103923563

Anonymous 01/16/25(Thu)18:35:09 No.103923568

This probably won't interest many people here, but I was playing around with Gemma-2-27B (base model, but the Instruct version acts similarly) in Mikupad and found that it easily outputs HTML documents with simplified formatting, compared to Llama-3.1. Did Google train it on raw or semi-cleaned web pages? Interesting if yes.

Anonymous 01/16/25(Thu)18:39:13 No.103923598

Anonymous 01/16/25(Thu)18:50:35 No.103923688

>>103923568

Gemma 2's tokenizer has some HTML tags, maybe that's to be expected.

Gemma 2's tokenizer has some HTML tags, maybe that's to be expected.

Anonymous 01/16/25(Thu)18:50:38 No.103923689

>>103923537

>>103923598

>This model is not owned or developed by NVIDIA. This model has been developed and built to a third-party’s requirements for this application and use case; see link to Non-NVIDIA

>>103923598

>This model is not owned or developed by NVIDIA. This model has been developed and built to a third-party’s requirements for this application and use case; see link to Non-NVIDIA

Anonymous 01/16/25(Thu)18:58:16 No.103923764

What's next? L4 in 2+ weeks? Leak?

Anonymous 01/16/25(Thu)18:59:01 No.103923782

>>103923688

Tokenizer is trained on the dataset too. HTML tags would only be their own tokens if they appeared frequently in the data, probably they didn't bother cleaning their scrapes

Tokenizer is trained on the dataset too. HTML tags would only be their own tokens if they appeared frequently in the data, probably they didn't bother cleaning their scrapes

Anonymous 01/16/25(Thu)18:59:37 No.103923787

>>103923689

>This model is not owned or developed by NVIDIA. This model has been developed and built to a third-party’s requirements for this application and use case; see link to Non-NVIDIA (Meta-Llama-3.1-8B-Instruct) Model Card

it's llama

>This model is not owned or developed by NVIDIA. This model has been developed and built to a third-party’s requirements for this application and use case; see link to Non-NVIDIA (Meta-Llama-3.1-8B-Instruct) Model Card

it's llama

Anonymous 01/16/25(Thu)18:59:57 No.103923792

>>103923688

If you expect your model to output tons of HTML, that's pretty smart actually.

If you expect your model to output tons of HTML, that's pretty smart actually.

Anonymous 01/16/25(Thu)19:02:44 No.103923812

>8B

yawn, even the best models of that size are unusably stupid

yawn, even the best models of that size are unusably stupid

Anonymous 01/16/25(Thu)19:03:44 No.103923821

Anonymous 01/16/25(Thu)19:14:10 No.103923904

Anonymous 01/16/25(Thu)19:16:23 No.103923922

>>103923904

I think they mean gpt4o and that realtime chinese one and for context minimax has linear 4M context with RULER benchmarks showing better than gemini performance along it

I think they mean gpt4o and that realtime chinese one and for context minimax has linear 4M context with RULER benchmarks showing better than gemini performance along it

Anonymous 01/16/25(Thu)19:16:45 No.103923925

>>103923904

Doesn't count because it "beats" 4o mini and not the real GPT4.

I'm not gonna bother looking for the post.

Doesn't count because it "beats" 4o mini and not the real GPT4.

I'm not gonna bother looking for the post.

Anonymous 01/16/25(Thu)19:18:04 No.103923939

>>103923922

None of that sounds like a WIN for running local models on our machines!

None of that sounds like a WIN for running local models on our machines!

Anonymous 01/16/25(Thu)19:22:25 No.103923972

>>103923821

>VRAM IS KING

>PERIOD.

ACKSHUALY

memory bandwidth is king for inference and matmul flops are king for prompt processing (and training)

whether that involves vram or not is irrelevant, although 99% of the time the VRAM IS KING rule of thumb is the right mental shortcut

>VRAM IS KING

>PERIOD.

ACKSHUALY

memory bandwidth is king for inference and matmul flops are king for prompt processing (and training)

whether that involves vram or not is irrelevant, although 99% of the time the VRAM IS KING rule of thumb is the right mental shortcut

Anonymous 01/16/25(Thu)19:26:01 No.103924000

>>103923350

lowest entry barrier is probably llama-factory. It's also the most popular (by github stars). But it's still requires some tinkering. I also have some suspicions that DPO finetuning is broken there, so take that into consideration

lowest entry barrier is probably llama-factory. It's also the most popular (by github stars). But it's still requires some tinkering. I also have some suspicions that DPO finetuning is broken there, so take that into consideration

Anonymous 01/16/25(Thu)19:30:29 No.103924034

>>103923972

VRAM is king. Memory bandwidth just dictates t/s (it will be greater than reading speed anyway so this is irrelevant)

VRAM is king. Memory bandwidth just dictates t/s (it will be greater than reading speed anyway so this is irrelevant)

Anonymous 01/16/25(Thu)19:33:18 No.103924055

>>103924034

>reading speed

I hate this meme. I get that it doesn't matter for coom but for literally anything else more t/s means less time spent waiting.

>reading speed

I hate this meme. I get that it doesn't matter for coom but for literally anything else more t/s means less time spent waiting.

Anonymous 01/16/25(Thu)19:35:05 No.103924067

>>103924055

Only if you're using bloated JSON function calling. Anything well designed will be limited primarily by reading speed

Only if you're using bloated JSON function calling. Anything well designed will be limited primarily by reading speed

Anonymous 01/16/25(Thu)19:35:25 No.103924071

What's the cheapest home server I can build that can run something like DeepSeek v3 at reasonable speed and quality?

Anonymous 01/16/25(Thu)19:35:27 No.103924072

Anonymous 01/16/25(Thu)19:36:56 No.103924083

>>103924071

>reasonable speed

>quality

you need to define these parameters better or no one can really help you

>reasonable speed

>quality

you need to define these parameters better or no one can really help you

Anonymous 01/16/25(Thu)19:37:22 No.103924086

>>103923904

MiniCPM beats GPT4o and Sonnet at image mememarks. It is "free space" square because any meme model with dumb claims counts. Minimax has 1M context.

MiniCPM beats GPT4o and Sonnet at image mememarks. It is "free space" square because any meme model with dumb claims counts. Minimax has 1M context.

Anonymous 01/16/25(Thu)19:37:49 No.103924090

>>103923568

It's also fun how it easily outputs fanfiction in addition to basic HTML tags. Looking forward to seeing Gemma 3.

It's also fun how it easily outputs fanfiction in addition to basic HTML tags. Looking forward to seeing Gemma 3.

Anonymous 01/16/25(Thu)19:38:35 No.103924096

>>103924067

Yes, I love reading code diffs at reading speed.

Yes, I love reading code diffs at reading speed.

Anonymous 01/16/25(Thu)19:41:23 No.103924117

>>103924083

Don't be a fucking retard, everyone knows. It is utterly self explainatory.

Don't be a fucking retard, everyone knows. It is utterly self explainatory.

Anonymous 01/16/25(Thu)19:42:09 No.103924124

>MiniCPM output

Top Left Panel:

Text: "KRコミックス白魔道士はゾンビの夢を見るか?"

Translation: "K.R. Comics White Demon Sorcerer Dreams of Zombies?"

Illustration: A character wearing what appears to be animal ears or horns stands in front of an audience.

Second Row Left Panel:

Text: "まんがタイムキララMAX"

Translation: "Manga Time Kirara MAX" (likely the title or section name)

Illustration: The same character as above, now seen more clearly against a background that suggests motion lines indicating speed or movement.

Third Row Left Panel:

Text: "本物?"

Translation: "Real?!"

Illustration: Close-up showing details like long hair and facial features on the character.

Fourth Row Left Panel:

Text: "人狼ってやつ?"

Translation: "A werewolf, perhaps?"

Illustration: Another close-up view focusing on different parts of the character’s face and expression.

Top Right Panel:

Text: "入学式"

Translation: "Enrollment Ceremony"

Second Row Right Panel:

Text: "入学式で胸を开朗きたわ所だったわ"

Translation: "I was nervous at the enrollment ceremony."

Illustration: An outdoor scene looking towards buildings with sunlight casting rays over them.

Third Row Right Panel:

Text: "会長より接抹で"

Translation: "Taking over from President"

Illustration: Character standing inside near a door labeled "入学式" ("Enrollment Ceremony").

Fourth Row Right Panel:

Text: "新一年生のみなさま"

Translation: "Dear new students"

Illustration: View into an auditorium filled with people sitting down for some event.

Bottom Center Panel:

Text: "私以外にも"

Translation: "Besides me"

Illustration: The main character looks surprised while holding something small in their hand.

Bottom Right Panel:

Text: "生徒会会長の"

Translation: "Student Council President's"

Illustration: Close-up of the character speaking animatedly, possibly addressing someone off-panel.

The overall theme seems to revolve around school life, specifically during an enrollment ceremony where characters are interacting with each other, expressing surprise or curiosity about certain individuals' appearances or identities.

Top Left Panel:

Text: "KRコミックス白魔道士はゾンビの夢を見るか?"

Translation: "K.R. Comics White Demon Sorcerer Dreams of Zombies?"

Illustration: A character wearing what appears to be animal ears or horns stands in front of an audience.

Second Row Left Panel:

Text: "まんがタイムキララMAX"

Translation: "Manga Time Kirara MAX" (likely the title or section name)

Illustration: The same character as above, now seen more clearly against a background that suggests motion lines indicating speed or movement.

Third Row Left Panel:

Text: "本物?"

Translation: "Real?!"

Illustration: Close-up showing details like long hair and facial features on the character.

Fourth Row Left Panel:

Text: "人狼ってやつ?"

Translation: "A werewolf, perhaps?"

Illustration: Another close-up view focusing on different parts of the character’s face and expression.

Top Right Panel:

Text: "入学式"

Translation: "Enrollment Ceremony"

Second Row Right Panel:

Text: "入学式で胸を开朗きたわ所だったわ"

Translation: "I was nervous at the enrollment ceremony."

Illustration: An outdoor scene looking towards buildings with sunlight casting rays over them.

Third Row Right Panel:

Text: "会長より接抹で"

Translation: "Taking over from President"

Illustration: Character standing inside near a door labeled "入学式" ("Enrollment Ceremony").

Fourth Row Right Panel:

Text: "新一年生のみなさま"

Translation: "Dear new students"

Illustration: View into an auditorium filled with people sitting down for some event.

Bottom Center Panel:

Text: "私以外にも"

Translation: "Besides me"

Illustration: The main character looks surprised while holding something small in their hand.

Bottom Right Panel:

Text: "生徒会会長の"

Translation: "Student Council President's"

Illustration: Close-up of the character speaking animatedly, possibly addressing someone off-panel.

The overall theme seems to revolve around school life, specifically during an enrollment ceremony where characters are interacting with each other, expressing surprise or curiosity about certain individuals' appearances or identities.

Anonymous 01/16/25(Thu)19:44:38 No.103924140

>>103924117

Ok.

Ok.

Anonymous 01/16/25(Thu)19:47:03 No.103924163

>>103924124

Wow, that's a mess. How big is the model?

Wow, that's a mess. How big is the model?

Anonymous 01/16/25(Thu)19:49:42 No.103924189

>>103924163

8b GPT "beater"

8b GPT "beater"

Anonymous 01/16/25(Thu)19:52:34 No.103924216

Anonymous 01/16/25(Thu)19:54:03 No.103924228

>>103922483

Teto's tetas

Teto's tetas

Anonymous 01/16/25(Thu)20:10:54 No.103924379

>https://huggingface.co/MiniMaxAI/MiniMax-Text-01/resolve/main/model-00413-of-00413.safetensors

>Connecting to huggingface.co:443... connected.

>HTTP request sent, awaiting response... 404 Not Found

dafuq?

>Connecting to huggingface.co:443... connected.

>HTTP request sent, awaiting response... 404 Not Found

dafuq?

Anonymous 01/16/25(Thu)20:13:44 No.103924408

>>103924379

nm, there's a 000-413 : /

nm, there's a 000-413 : /

Anonymous 01/16/25(Thu)20:15:50 No.103924427

>>103924124

Manga translation is one of the hardest things you can do with a VLM and even InternVL2.5 78B is not up for the task.

Manga translation is one of the hardest things you can do with a VLM and even InternVL2.5 78B is not up for the task.

Anonymous 01/16/25(Thu)20:16:33 No.103924434

>>103920075

I must say I'm kinda impressed with Wayfarer, it requires some retarded sampling settings (temp=1.25, rep pen=1.2) but once you get past that the experience is pleasing, consistent and mostly shiver-free. Sometimes it hesitates a bit and needs an extra push but it doesn't look like a positivity bias, more like it was trained as an adventure model first and foremost. Understands cards better than Nemo-12B and generally writes longer responses, but it's not as smart as Gemma-27B or 70B models obviously. With a thinker prompt injection it starts paying more attention for regular RP but it's a bit tedious to setup, and its ability to mimic the card's writing style is weak. It doesn't attempt to start sexing or murdering you in the very first reply and the characters act very natural. Idk, maybe I just never tasted an all-around good model but I like this one.

I must say I'm kinda impressed with Wayfarer, it requires some retarded sampling settings (temp=1.25, rep pen=1.2) but once you get past that the experience is pleasing, consistent and mostly shiver-free. Sometimes it hesitates a bit and needs an extra push but it doesn't look like a positivity bias, more like it was trained as an adventure model first and foremost. Understands cards better than Nemo-12B and generally writes longer responses, but it's not as smart as Gemma-27B or 70B models obviously. With a thinker prompt injection it starts paying more attention for regular RP but it's a bit tedious to setup, and its ability to mimic the card's writing style is weak. It doesn't attempt to start sexing or murdering you in the very first reply and the characters act very natural. Idk, maybe I just never tasted an all-around good model but I like this one.

Anonymous 01/16/25(Thu)20:40:08 No.103924612

>>103924434

Meh. Just another flavor of retarded mistral nemo

Meh. Just another flavor of retarded mistral nemo

Anonymous 01/16/25(Thu)20:40:17 No.103924616

SBC Sillytavern guy, did you have any trouble with it? Trying it on my raspberry pi, and it's both totally ignoring all the changed variables (Like setting listening to true, adding a password, etc.) and seems to reinstall node.js every time, even though it's installed. Could it be a Raspbian thing? Or maybe the install is bad?

Anonymous 01/16/25(Thu)20:42:27 No.103924640

>>103923391

Does anyone have that Lain post for this guy? I don't feel like explaining.

Does anyone have that Lain post for this guy? I don't feel like explaining.

Anonymous 01/16/25(Thu)20:42:32 No.103924641

>>103923228

I thought base Nemo was pretty good and uncensored. All the 12b finetunes were decent, just a little oblivious and retarded because not enough brain cells

I thought base Nemo was pretty good and uncensored. All the 12b finetunes were decent, just a little oblivious and retarded because not enough brain cells

Anonymous 01/16/25(Thu)20:44:07 No.103924655

Titan bitnet models are coming

Anonymous 01/16/25(Thu)20:55:15 No.103924765

Anonymous 01/16/25(Thu)20:55:57 No.103924774

how do I download minimax gguf?

Anonymous 01/16/25(Thu)20:58:36 No.103924794

Anonymous 01/16/25(Thu)20:59:20 No.103924806

>>103924794

oh niggers thanks anyway

oh niggers thanks anyway

Anonymous 01/16/25(Thu)21:01:53 No.103924833

Anonymous 01/16/25(Thu)21:02:49 No.103924844

>>103923821

I remember last time I came to this general a few weeks ago people were trying to use their RAMs to augment their VRAM. Any advancement in this or was it just a good idea but bad in practice?

I remember last time I came to this general a few weeks ago people were trying to use their RAMs to augment their VRAM. Any advancement in this or was it just a good idea but bad in practice?

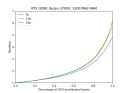

Anonymous 01/16/25(Thu)21:06:42 No.103924890

>>103924844

it's been an established thing for over a year now, get with the times anon

it's an ok idea but there's no way around the fact that RAM is slow and the more you have to use it the more inference slows down

have a really old outdated picture to explain

it's been an established thing for over a year now, get with the times anon

it's an ok idea but there's no way around the fact that RAM is slow and the more you have to use it the more inference slows down

have a really old outdated picture to explain

Anonymous 01/16/25(Thu)21:08:01 No.103924904

>>103924844

DDR5 or go home

DDR5 or go home

Anonymous 01/16/25(Thu)21:10:19 No.103924933

>>103924904

Yeah I'll be on AM5

Yeah I'll be on AM5

Anonymous 01/16/25(Thu)21:15:36 No.103924969

>>103922149

How do you even get multiple characters chatting with each other?

How do you even get multiple characters chatting with each other?

Anonymous 01/16/25(Thu)21:18:27 No.103924994

>>103924890

Running something slowly is an improvement over not being able to run it at all because it's too big to put all of it in vram, I assume that's the primary use case. Everybody and their mother has 32GB RAM now, but tel avidia won't put more than 8GB VRAM on anything but top end cards.

Running something slowly is an improvement over not being able to run it at all because it's too big to put all of it in vram, I assume that's the primary use case. Everybody and their mother has 32GB RAM now, but tel avidia won't put more than 8GB VRAM on anything but top end cards.

Anonymous 01/16/25(Thu)21:19:16 No.103924998

>>103922149

Will titans fix it?

Will titans fix it?

Anonymous 01/16/25(Thu)21:22:09 No.103925031

>>103924994

Exactly, also, sometimes you are running a model at 15 t/s and if you throw a layer or two on ram you go down to 10 t/s while being able to stuff double the context.

There are tradeoffs you can make depending on the specific case.

Bigger model, larger context, a better quant, etc etc.

Exactly, also, sometimes you are running a model at 15 t/s and if you throw a layer or two on ram you go down to 10 t/s while being able to stuff double the context.

There are tradeoffs you can make depending on the specific case.

Bigger model, larger context, a better quant, etc etc.

Anonymous 01/16/25(Thu)21:22:41 No.103925035

Wayfarer is actually nice. I haven't used the 12B Mistrals much though so it's hard to say how big of an improvement it is. I'm sure it will fall apart on more complex scenarios, but it's pretty loose, doesn't loop sentence prefixes, extrapolates stuff from chat details. Even without the retarded "you - no, you" format they recommend, although perhaps that is needed for whatever text adventuring dynamic it's supposed to create, because in my chat it was pretty passive. Regular samplers, chatml (should try Mistral formatting too), generic short "break the rules" style sysprompt. Gryphe may have something here.

Anonymous 01/16/25(Thu)21:24:07 No.103925047

I've tried live stream and chatting with miniCPM.

First problem. It's laggy as hell when streaming and I don't think it's a model issue.

Second problem voice comes out choppy despite the recording basically being done already and it's just playing it back. No idea why.

3. It comes out the gate swinging in chinese 95% of the time in live stream mode and refuses to use english except sometimes. It also seems to be ignoring instructions.

I do not know if this an issue with the way their web demo runs locally or the model is just shit

First problem. It's laggy as hell when streaming and I don't think it's a model issue.

Second problem voice comes out choppy despite the recording basically being done already and it's just playing it back. No idea why.

3. It comes out the gate swinging in chinese 95% of the time in live stream mode and refuses to use english except sometimes. It also seems to be ignoring instructions.

I do not know if this an issue with the way their web demo runs locally or the model is just shit

Anonymous 01/16/25(Thu)21:28:51 No.103925085

the concept of wayfarer is fucking retarded

finetuning an entire model is one of the most inefficient ways to solve the problem it's trying to tackle

finetuning an entire model is one of the most inefficient ways to solve the problem it's trying to tackle

Anonymous 01/16/25(Thu)21:31:33 No.103925112

https://www.courtlistener.com/docket/67569326/391/28/kadrey-v-meta-platforms-inc/

> **FB posts and comments**: 260T chars where 76T are English

> **Speech**: 1.9T chars

> **Business Messaging**: 4.7T chars where 0.7 are English

Meta has this much Facebook data from public posts that could potentially be used; they were already looking into it at the end of 2023.

> **FB posts and comments**: 260T chars where 76T are English

> **Speech**: 1.9T chars

> **Business Messaging**: 4.7T chars where 0.7 are English

Meta has this much Facebook data from public posts that could potentially be used; they were already looking into it at the end of 2023.

Anonymous 01/16/25(Thu)21:32:07 No.103925118

>>103925085

Go on.

Go on.

Anonymous 01/16/25(Thu)21:32:32 No.103925122

>>103925112

Come on Meta... feed it like 1000T tokens

Come on Meta... feed it like 1000T tokens

Anonymous 01/16/25(Thu)21:32:40 No.103925123

>>103924090

That was all generated? I'm impressed! How did you get it to generate that?

That was all generated? I'm impressed! How did you get it to generate that?

Anonymous 01/16/25(Thu)21:36:42 No.103925151

>>103925118

all you really need is preprocessing the input

all you really need is preprocessing the input

Anonymous 01/16/25(Thu)21:38:59 No.103925166

>>103925123

Just add at the start of the document:

And then let the model generate. Or you can change also to Rating: Explicit, and so on, following the same style found on AO3. There are some warning tags that Google obviously skipped.

Random fiction from the actual AO3 website in picrel.

Just add at the start of the document:

Rating: Mature

Archive Warnings:

And then let the model generate. Or you can change also to Rating: Explicit, and so on, following the same style found on AO3. There are some warning tags that Google obviously skipped.

Random fiction from the actual AO3 website in picrel.

Anonymous 01/16/25(Thu)21:40:51 No.103925181

>>103925166

Thanks for the heads up! That's neat to hear. I've been trying to use AI as a writing assistant, but haven't found a good model or haven't been able to use any correctly.

Thanks for the heads up! That's neat to hear. I've been trying to use AI as a writing assistant, but haven't found a good model or haven't been able to use any correctly.

Anonymous 01/16/25(Thu)21:42:34 No.103925190

>>103925123

(forgot to add) Yes, the blue part was generated by the model of course. Better random example in picrel, I didn't bother adjusting sampling settings for best results.

(forgot to add) Yes, the blue part was generated by the model of course. Better random example in picrel, I didn't bother adjusting sampling settings for best results.

Anonymous 01/16/25(Thu)21:52:43 No.103925253

>>103925122

The main issue is that they're extensively filtering their models for "safety". From the documents it feels like they're doing it even more aggressively than they suggested in the Llama3 paper.

The main issue is that they're extensively filtering their models for "safety". From the documents it feels like they're doing it even more aggressively than they suggested in the Llama3 paper.

Anonymous 01/16/25(Thu)22:00:37 No.103925305

>>103925151

Now expand.

Now expand.

Anonymous 01/16/25(Thu)22:21:48 No.103925448

>>103925253

Even if they didn't filter the chats it's not like they'd be any good for our thing, FB chat logs aren't gonna have people speaking like anime girl waifus.

Even if they didn't filter the chats it's not like they'd be any good for our thing, FB chat logs aren't gonna have people speaking like anime girl waifus.

Anonymous 01/16/25(Thu)22:22:34 No.103925453

>>103925253

they all are so why are you still using it?

they all are so why are you still using it?

Anonymous 01/16/25(Thu)22:26:16 No.103925472

Why does Meta and other open weights companies keep cucking themselves by filtering base models? If they not filter out anything and say that they did, nobody is gonna proof shit, see Anthropic. That's just free IQ, assistant tunes get separate lobotomy anyway.

Anonymous 01/16/25(Thu)22:26:22 No.103925473

Been like half a year since I've tried local models. What's the best LLM model for 2x3090s? I have 128gb of RAM so a MoE model works too.

Anonymous 01/16/25(Thu)22:27:39 No.103925478

>>103925473

Same as half a year ago.

Same as half a year ago.

Anonymous 01/16/25(Thu)22:41:49 No.103925558

>>103925253

They're obviously not filtering it as much as you think given that the models (after third party tunes) turned out ok for sex and violence relative to Qwen and even Mistral Large. Or others are filtering more than you think relative to Meta. It's kind of funny since Meta is supposed to be THE single ultra safe megacorp making chatbots for Facebook, so you'd think they should filter much more. And yet Mistral's model isn't really a million times better (it is better though, at the cost of more parameters), while Qwen is somehow worse than Llama.