/lmg/ - Local Models General

Anonymous 01/11/25(Sat)16:03:20 | 379 comments | 31 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103848716 & >>103838544

►News

>(01/08) Phi-4 weights released: https://hf.co/microsoft/phi-4

>(01/06) NVIDIA Project DIGITS announced, capable of running 200B models: https://nvidianews.nvidia.com/news/nvidia-puts-grace-blackwell-on-every-desk-and-at-every-ai-developers-fingertips

>(01/06) Nvidia releases Cosmos world foundation models: https://github.com/NVIDIA/Cosmos

>(01/04) DeepSeek V3 support merged: https://github.com/ggerganov/llama.cpp/pull/11049

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103848716 & >>103838544

►News

>(01/08) Phi-4 weights released: https://hf.co/microsoft/phi-4

>(01/06) NVIDIA Project DIGITS announced, capable of running 200B models: https://nvidianews.nvidia.com/news/

>(01/06) Nvidia releases Cosmos world foundation models: https://github.com/NVIDIA/Cosmos

>(01/04) DeepSeek V3 support merged: https://github.com/ggerganov/llama.

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/11/25(Sat)16:03:40 No.103856605

►Recent Highlights from the Previous Thread: >>103848716

--Paper (old): Phi-4 Technical Report:

>103854586 >103855228 >103855816 >103854691 >103854739 >103854958 >103855083 >103854698 >103854710 >103854794 >103854884 >103855161 >103855184 >103855268

--Anon questions the value of fine-tuning LLMs for knowledge retrieval:

>103851689 >103851720 >103851734 >103851771 >103851733 >103851791 >103851898 >103851938 >103851956 >103851971 >103852744

--Anons discuss the usability of a model calculator for selecting AI models based on VRAM:

>103850716 >103850731 >103850779 >103850903 >103851107 >103851496 >103851958 >103850838

--Comparing coding models, including 3.3 instruct 70b and 3.1 nemotron 70b, for coding tasks:

>103851055 >103851079 >103851087 >103851089 >103851161 >103851220 >103851660

--EU regulations pose challenges for AI model development and deployment:

>103850426

--Anons discuss and evaluate the NovaSky-AI/Sky-T1-32B-Preview model:

>103850933 >103850957 >103850974 >103851011 >103851033 >103851632

--Anon suggests using DeepSeek for context analysis and Nemo for prose generation:

>103853305 >103853358 >103853433 >103853558 >103853597

--Anon discusses using local models to flag off-topic posts:

>103851594 >103851634 >103853264 >103851668

--Limitations and unpredictability of merging LLMs:

>103850797 >103850834 >103850904 >103851202

--Quantization and optimization issues with llama.cpp and tensor parallel:

>103849397 >103849502 >103849516

--Anons discuss the impact of EU regulations on AI model accessibility:

>103849886 >103849915 >103849924 >103849941 >103850137 >103850084 >103850230 >103850272 >103850291 >103850418 >103850116 >103850154 >103853175

--Skepticism over Qwen2.5 7B's reported performance:

>103850605 >103850812 >103850860

--Doubts about DIGITS' cost-effectiveness and usability:

>103850984

--Miku (free space):

>103849507

►Recent Highlight Posts from the Previous Thread: >>103848719

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--Paper (old): Phi-4 Technical Report:

>103854586 >103855228 >103855816 >103854691 >103854739 >103854958 >103855083 >103854698 >103854710 >103854794 >103854884 >103855161 >103855184 >103855268

--Anon questions the value of fine-tuning LLMs for knowledge retrieval:

>103851689 >103851720 >103851734 >103851771 >103851733 >103851791 >103851898 >103851938 >103851956 >103851971 >103852744

--Anons discuss the usability of a model calculator for selecting AI models based on VRAM:

>103850716 >103850731 >103850779 >103850903 >103851107 >103851496 >103851958 >103850838

--Comparing coding models, including 3.3 instruct 70b and 3.1 nemotron 70b, for coding tasks:

>103851055 >103851079 >103851087 >103851089 >103851161 >103851220 >103851660

--EU regulations pose challenges for AI model development and deployment:

>103850426

--Anons discuss and evaluate the NovaSky-AI/Sky-T1-32B-Preview model:

>103850933 >103850957 >103850974 >103851011 >103851033 >103851632

--Anon suggests using DeepSeek for context analysis and Nemo for prose generation:

>103853305 >103853358 >103853433 >103853558 >103853597

--Anon discusses using local models to flag off-topic posts:

>103851594 >103851634 >103853264 >103851668

--Limitations and unpredictability of merging LLMs:

>103850797 >103850834 >103850904 >103851202

--Quantization and optimization issues with llama.cpp and tensor parallel:

>103849397 >103849502 >103849516

--Anons discuss the impact of EU regulations on AI model accessibility:

>103849886 >103849915 >103849924 >103849941 >103850137 >103850084 >103850230 >103850272 >103850291 >103850418 >103850116 >103850154 >103853175

--Skepticism over Qwen2.5 7B's reported performance:

>103850605 >103850812 >103850860

--Doubts about DIGITS' cost-effectiveness and usability:

>103850984

--Miku (free space):

>103849507

►Recent Highlight Posts from the Previous Thread: >>103848719

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/11/25(Sat)16:06:57 No.103856645

Reposting because new thread >>103856509

Am I insane?

My motherboard has two pcie x16 slots. They run at x8 if both are used. But it also has an m2 ssd slot that can steal 4 lanes from the second slot so the resulting configuration is x8/x4/x4.

Can I use 3 gpus with pic related?

Am I insane?

My motherboard has two pcie x16 slots. They run at x8 if both are used. But it also has an m2 ssd slot that can steal 4 lanes from the second slot so the resulting configuration is x8/x4/x4.

Can I use 3 gpus with pic related?

Anonymous 01/11/25(Sat)16:09:54 No.103856678

Hi anons, I just woke up from a 2 years coma, is Pygmalion still collecting logs? Are they still matrixfags or did they transition to discord?

https://rentry.org/chatlog-dumping

https://rentry.org/chatlog-dumping

Anonymous 01/11/25(Sat)16:11:11 No.103856696

>>103856645

You can. Models will load slower but run fine. The only downside is you won't be able to use the tensor split mode row option for speed boost.

You can. Models will load slower but run fine. The only downside is you won't be able to use the tensor split mode row option for speed boost.

Anonymous 01/11/25(Sat)16:15:18 No.103856753

Serious question

I heard people saying that MoE models can be run on CPU at decent speed.

Now, I got 256 Gb of DDR4 (1866) and 2x Intel Xeon.

What (unquantized) model I could try for fun? I'm eyeing DS3 in the future when I'll get 1 Tb complete

I heard people saying that MoE models can be run on CPU at decent speed.

Now, I got 256 Gb of DDR4 (1866) and 2x Intel Xeon.

What (unquantized) model I could try for fun? I'm eyeing DS3 in the future when I'll get 1 Tb complete

Anonymous 01/11/25(Sat)16:17:27 No.103856780

>>103856696

>you won't be able to use the tensor split mode row option

Why is that? I'm struggling to find proper documentation for that option.

>you won't be able to use the tensor split mode row option

Why is that? I'm struggling to find proper documentation for that option.

Anonymous 01/11/25(Sat)16:19:46 No.103856811

So hows everyone evaluating models? I was just doing some gpu layer offload tests, changing no other settings, and was shocked at how different the outputs were which I think is just random variance, which made me question the significance of all the other settings I had messed with like temp in the past.

Anonymous 01/11/25(Sat)16:21:13 No.103856829

I just had a pretty good RP session and in the middle of it I realized that the system prompt was blank from some tinkering from before.

No instructions at all and it was strangely... unique and fresh writing. Anyone else tried this?

No instructions at all and it was strangely... unique and fresh writing. Anyone else tried this?

Anonymous 01/11/25(Sat)16:21:13 No.103856830

I’m having a rough time getting llama3.2 to use tools with ChatOllama on langchain. Have any of you gotten it to work? I don’t want to use openAI since I do want to have my agents run locally

Anonymous 01/11/25(Sat)16:27:10 No.103856914

>>103856780

By default, llama.cpp will process the data on each GPU independently, so once the data is loaded, there is not much communication between devices. With split mode row, they get processed in parallel and need to communicate frequently.

By default, llama.cpp will process the data on each GPU independently, so once the data is loaded, there is not much communication between devices. With split mode row, they get processed in parallel and need to communicate frequently.

Anonymous 01/11/25(Sat)16:29:49 No.103856936

>>103856753

Active params are what matters then. Try sorcerer 8x 22B

Active params are what matters then. Try sorcerer 8x 22B

Anonymous 01/11/25(Sat)16:33:13 No.103856968

damn, QRWKV6 is laughably bad. Why did they even bother to release it and even go as far as say it's as good as Qwen2.5 32B?

Anonymous 01/11/25(Sat)16:35:41 No.103856988

>>103856678

Anon, why would you ask this here instead of aicg

Anon, why would you ask this here instead of aicg

Anonymous 01/11/25(Sat)16:37:43 No.103857006

>>103856753

I think Deepseek V2.5 is what you're looking for.

I think Deepseek V2.5 is what you're looking for.

Anonymous 01/11/25(Sat)16:38:29 No.103857013

>>103856753

DS3 will run with 512GB (Q4_K_M). Embrace the quant.

DS3 will run with 512GB (Q4_K_M). Embrace the quant.

Anonymous 01/11/25(Sat)16:46:32 No.103857106

>>103856968

RWKV has always been the most meme useless shit imaginable.

RWKV has always been the most meme useless shit imaginable.

Anonymous 01/11/25(Sat)16:51:47 No.103857156

>>103857013

>DS3 will run with 512GB (Q4_K_M)

Will the unquantized model ever fit into 1Tb??

DS3 itself said that it would

>DS3 will run with 512GB (Q4_K_M)

Will the unquantized model ever fit into 1Tb??

DS3 itself said that it would

Anonymous 01/11/25(Sat)16:59:37 No.103857247

my most schizo belief is that the weights go bad if you leave them loaded for too long and you have to freshen them up regularly or your outputs won't be as good

Anonymous 01/11/25(Sat)17:01:57 No.103857272

>>103857247

not really that schizo, cache could corrupt for a variety of reasons, kcpp recently had a thing like that

>Fixed a bug that caused context corruption when aborting a generation while halfway processing a prompt

not really that schizo, cache could corrupt for a variety of reasons, kcpp recently had a thing like that

>Fixed a bug that caused context corruption when aborting a generation while halfway processing a prompt

Anonymous 01/11/25(Sat)17:03:26 No.103857285

huggingface slow af

Anonymous 01/11/25(Sat)17:08:55 No.103857341

>>103857272

LM Studio keeps fucking up where if I stop it then edit the output, if I continue then it keeps spitting out shit from before.

LM Studio keeps fucking up where if I stop it then edit the output, if I continue then it keeps spitting out shit from before.

Anonymous 01/11/25(Sat)17:16:31 No.103857402

>>103857247

That's because of rotational velocidensity, did you try connecting your gpu using SCSI instead of PCIe?

That's because of rotational velocidensity, did you try connecting your gpu using SCSI instead of PCIe?

Anonymous 01/11/25(Sat)17:22:41 No.103857457

>Grok can't write 4chan style replies while GPT4 can

Elon had really bought someone's work(likely cohere's) and had no say in what to finetune it on, just like that game account, didn't he?

Elon had really bought someone's work(likely cohere's) and had no say in what to finetune it on, just like that game account, didn't he?

Anonymous 01/11/25(Sat)17:23:57 No.103857472

>>103857272

How old is that bug? KCCP's frontend has been giving me garbled outputs for a long time and that sounds like the cause

How old is that bug? KCCP's frontend has been giving me garbled outputs for a long time and that sounds like the cause

Anonymous 01/11/25(Sat)17:33:01 No.103857544

>>103857457

didn't they train that shit on their dojo supercomputer?

didn't they train that shit on their dojo supercomputer?

Anonymous 01/11/25(Sat)17:35:22 No.103857569

I thought my finetune was shit because the outputs were too long but it turns out you can just shorten the wordcount limit on outputs, and that fixed it.

Anonymous 01/11/25(Sat)17:41:58 No.103857641

>>103857457

it's pretty funny considering the image elon is going for with X and grok that his model sounds more like an HR rep than anything else on the market

it's pretty funny considering the image elon is going for with X and grok that his model sounds more like an HR rep than anything else on the market

Anonymous 01/11/25(Sat)17:44:02 No.103857654

>>103857544

They may have given compute, but they didn't make the dataset.

They may have given compute, but they didn't make the dataset.

Anonymous 01/11/25(Sat)17:44:48 No.103857659

chub rangebanning china fucking WHEN I'm tired of all this untagged chinkslop

Anonymous 01/11/25(Sat)17:48:21 No.103857684

DS3 does not like wumen

Anonymous 01/11/25(Sat)18:00:26 No.103857775

24gb vramlet bros, what models are you running for RP? I'm still on Mistral Small Instruct and dying for a new model.

Anonymous 01/11/25(Sat)18:07:24 No.103857854

Changed a few things based on feedback from previous thread.

Anonymous 01/11/25(Sat)18:14:07 No.103857920

>>103857684

soul-free AI model

soul-free AI model

Anonymous 01/11/25(Sat)18:15:11 No.103857938

>>103857854

my bold prediction? bingo by the end of the month, diagonal from the bottom left to top right

my bold prediction? bingo by the end of the month, diagonal from the bottom left to top right

Anonymous 01/11/25(Sat)18:17:41 No.103857958

Anonymous 01/11/25(Sat)18:19:06 No.103857975

>>103857958

all the facebook posts they trained it on this time will make it completely different, trust the plan

all the facebook posts they trained it on this time will make it completely different, trust the plan

Anonymous 01/11/25(Sat)18:19:47 No.103857981

>>103857684

His girlfriend is just lucky that he isn't French. The French have a much different way of treating their princesses.

His girlfriend is just lucky that he isn't French. The French have a much different way of treating their princesses.

Anonymous 01/11/25(Sat)18:26:44 No.103858055

>>103855161

Can you repeat the run by starting with human data / OK data? I feel like your run was kind of unfair since you locked the model into getting penalized for not outputting slop first

Can you repeat the run by starting with human data / OK data? I feel like your run was kind of unfair since you locked the model into getting penalized for not outputting slop first

Anonymous 01/11/25(Sat)18:39:07 No.103858189

>>103858055

He is a retard who doesn't understand that OOD would get penalized even if it was the best human slop that his GPU had ever seen.

He is a retard who doesn't understand that OOD would get penalized even if it was the best human slop that his GPU had ever seen.

Anonymous 01/11/25(Sat)19:39:37 No.103858873

>>103858055

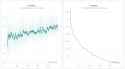

If you start with human data, train loss will be between around 1.8 to 2.3~2.5 depending on its "difficulty", similar to what you saw there. Picrel is a run with human data sorted by increasing difficulty (shuffling disabled again).

If you start with human data, train loss will be between around 1.8 to 2.3~2.5 depending on its "difficulty", similar to what you saw there. Picrel is a run with human data sorted by increasing difficulty (shuffling disabled again).

Anonymous 01/11/25(Sat)19:47:50 No.103858956

>>103858873

Here's another run with different data just with human samples sorted by sample length (random difficulty).

Here's another run with different data just with human samples sorted by sample length (random difficulty).

Anonymous 01/11/25(Sat)19:56:43 No.103859009

>>103856829

My syspromps are exclusively [Continue the story] but I've often thought about using totally blank ones. I find that the whole (You are an expert author/roleplayer...) etc generally do more harm than good. Back in the day models were much less overfit and things like the Agent 47 prompt actually made a difference. Now models are generally skewed towards overfitting because that gives good benchmark scores, but I think it also means that long sysprompts push it towards a pattern rather than breaking it out of one. I also think this is why community finetunes are usually a sidegrade at best these days, and when you put them against the original model there's no direct improvement, just imparting a different flavor and sometimes making it less predictable at the cost of some coherency. I don't know if the overfitting is inherently a bad thing, some overfit models work wonders with the temperature pushed a little, like mistral large.

My syspromps are exclusively [Continue the story] but I've often thought about using totally blank ones. I find that the whole (You are an expert author/roleplayer...) etc generally do more harm than good. Back in the day models were much less overfit and things like the Agent 47 prompt actually made a difference. Now models are generally skewed towards overfitting because that gives good benchmark scores, but I think it also means that long sysprompts push it towards a pattern rather than breaking it out of one. I also think this is why community finetunes are usually a sidegrade at best these days, and when you put them against the original model there's no direct improvement, just imparting a different flavor and sometimes making it less predictable at the cost of some coherency. I don't know if the overfitting is inherently a bad thing, some overfit models work wonders with the temperature pushed a little, like mistral large.

Anonymous 01/11/25(Sat)20:00:33 No.103859032

https://arxiv.org/abs/2501.05032

>This paper explores the advancements in making large language models (LLMs) more human-like. We focus on techniques that enhance natural language understanding, conversational coherence, and emotional intelligence in AI systems. The study evaluates various approaches, including fine-tuning with diverse datasets, incorporating psychological principles, and designing models that better mimic human reasoning patterns. Our findings demonstrate that these enhancements not only improve user interactions but also open new possibilities for AI applications across different domains. Future work will address the ethical implications and potential biases introduced by these human-like attributes.

Looks like partial unslopping is possible without huge loss(but not fully lossless). They even uploaded the dataset and weights:

https://huggingface.co/datasets/HumanLLMs/Human-Like-DPO-Dataset

https://huggingface.co/HumanLLMs/Human-Like-LLama3-8B-Instruct

Would be interesting to see what would happen if someone trained a bigger one and got it on lmsys.

>This paper explores the advancements in making large language models (LLMs) more human-like. We focus on techniques that enhance natural language understanding, conversational coherence, and emotional intelligence in AI systems. The study evaluates various approaches, including fine-tuning with diverse datasets, incorporating psychological principles, and designing models that better mimic human reasoning patterns. Our findings demonstrate that these enhancements not only improve user interactions but also open new possibilities for AI applications across different domains. Future work will address the ethical implications and potential biases introduced by these human-like attributes.

Looks like partial unslopping is possible without huge loss(but not fully lossless). They even uploaded the dataset and weights:

https://huggingface.co/datasets/Hum

https://huggingface.co/HumanLLMs/Hu

Would be interesting to see what would happen if someone trained a bigger one and got it on lmsys.

Anonymous 01/11/25(Sat)20:02:43 No.103859051

Instead of doing new models, Mistral is wasting their time with hackathons...

Anonymous 01/11/25(Sat)20:03:28 No.103859057

>>103859032

>https://huggingface.co/datasets/HumanLLMs/Human-Like-DPO-Dataset

... not promising

I mean at least it's not pure assistant slop, but this is emphatically NOT human data

>https://huggingface.co/datasets/Hu

... not promising

I mean at least it's not pure assistant slop, but this is emphatically NOT human data

Anonymous 01/11/25(Sat)20:05:14 No.103859068

Anonymous 01/11/25(Sat)20:05:25 No.103859069

>>103858956

Interesting, so the increasingly difficult approach yields better eval loss.

Interesting, so the increasingly difficult approach yields better eval loss.

Anonymous 01/11/25(Sat)20:07:55 No.103859083

>>103859032

>Man, that's a tough one! [Emoji] I'm all over the place when it comes to movies. I think I have a soft spot for sci-fi and adventure flicks, though. Give me some intergalactic battles, time travel, or a good ol' fashioned quest, and I'm hooked! [Emoji] But, you know, I'm also a sucker for a good rom-com. [...]

Wow that's so human, really captures the human feelings, amirite human bros?

>Man, that's a tough one! [Emoji] I'm all over the place when it comes to movies. I think I have a soft spot for sci-fi and adventure flicks, though. Give me some intergalactic battles, time travel, or a good ol' fashioned quest, and I'm hooked! [Emoji] But, you know, I'm also a sucker for a good rom-com. [...]

Wow that's so human, really captures the human feelings, amirite human bros?

Anonymous 01/11/25(Sat)20:08:36 No.103859087

>>103859069

Different data formatted in a different way, different eval samples; can't be really compared with each other.

Different data formatted in a different way, different eval samples; can't be really compared with each other.

Anonymous 01/11/25(Sat)20:10:36 No.103859097

>>103859068

You know, humans tend to repeat themselves a lot too.

You know, humans tend to repeat themselves a lot too.

Anonymous 01/11/25(Sat)20:12:19 No.103859110

>>103859032

You know, one third of the dataset starts with "You know"

You know, one third of the dataset starts with "You know"

Anonymous 01/11/25(Sat)20:18:07 No.103859166

>>103859083

Still more human than

>As a large language model, I don't have personal preferences like favorite movies. I don't have the ability to watch films or experience emotions like humans do. It's important to...

Still more human than

>As a large language model, I don't have personal preferences like favorite movies. I don't have the ability to watch films or experience emotions like humans do. It's important to...

Anonymous 01/11/25(Sat)20:20:06 No.103859191

>>103859110

That makes it quite useless. LLMs are bad at generating human data, what a surprise.

That makes it quite useless. LLMs are bad at generating human data, what a surprise.

Anonymous 01/11/25(Sat)20:23:17 No.103859225

So is this still used for roleplay or has it devolved into people with pocket protectors arguing about datasets and not actually using the tech?

Anonymous 01/11/25(Sat)20:23:21 No.103859226

>>103859166

and yet less human than even the average roleplay slop dataset

and yet less human than even the average roleplay slop dataset

Anonymous 01/11/25(Sat)20:27:26 No.103859258

>>103859225

>getting pissy about /lmg/ doing anything that isn't shitposting and spoonfeeding children what shitty model to run on their 3060

>getting pissy about /lmg/ doing anything that isn't shitposting and spoonfeeding children what shitty model to run on their 3060

Anonymous 01/11/25(Sat)20:32:23 No.103859294

Any advice on splitting up books without chapters in chapters for dataset? Should I just arbitrarily split up each 4k words?

Anonymous 01/11/25(Sat)20:33:25 No.103859306

>>103859294

if it doesn't have chapters then don't call them chapters

if it doesn't have chapters then don't call them chapters

Anonymous 01/11/25(Sat)20:33:42 No.103859308

>moved world stuff from the html to a new 'other' lorebook (import director_other)

>added mood

>fixed html (buttons mostly)

>added token counter to preview

>started some code cleanup

install to st\data\default-user\extensions. if you have an existing version it can't hurt to back it up somewhere incase i broke something i haven't noticed yet

>https://www.file.io/2sOO/download/847DoB6qZJN7

realized i broke the master on/off switch, its not supposed to cause the drawer to collapse. oh well, next version

>added mood

>fixed html (buttons mostly)

>added token counter to preview

>started some code cleanup

install to st\data\default-user\extensions. if you have an existing version it can't hurt to back it up somewhere incase i broke something i haven't noticed yet

>https://www.file.io/2sOO/download/

realized i broke the master on/off switch, its not supposed to cause the drawer to collapse. oh well, next version

Anonymous 01/11/25(Sat)20:35:06 No.103859316

>>103859308

Director anon! Nice!

Director anon! Nice!

Anonymous 01/11/25(Sat)20:35:27 No.103859318

>>103857544

Dojo is designed for self driving and computer vision tasks I think.

Dojo is designed for self driving and computer vision tasks I think.

Anonymous 01/11/25(Sat)20:36:13 No.103859323

>>103859306

Then what should I call them?

Then what should I call them?

Anonymous 01/11/25(Sat)20:36:54 No.103859333

Anonymous 01/11/25(Sat)20:38:59 No.103859351

Anonymous 01/11/25(Sat)20:39:17 No.103859355

>>103859323

segments

segments

Anonymous 01/11/25(Sat)20:41:00 No.103859372

>>103859351

weird, well heres another

>https://easyupload.io/24wrxm

>>103859333

eventually. i want to clean up the code more first, its pretty abysmal

weird, well heres another

>https://easyupload.io/24wrxm

>>103859333

eventually. i want to clean up the code more first, its pretty abysmal

Anonymous 01/11/25(Sat)20:41:38 No.103859376

>>103859372

Thanks

Thanks

Anonymous 01/11/25(Sat)20:54:11 No.103859523

Anonymous 01/11/25(Sat)21:03:52 No.103859641

I haven't checked this shit in ages. What's the current bot-making format?

Anonymous 01/11/25(Sat)21:06:53 No.103859671

Anonymous 01/11/25(Sat)21:11:21 No.103859711

>>103859671

Those guys use online models

Those guys use online models

Anonymous 01/11/25(Sat)21:11:55 No.103859720

>>103859641

Plain text>json>>everything else

Plain text>json>>everything else

Anonymous 01/11/25(Sat)21:14:14 No.103859744

>>103859641

plain text and no {{user}}

plain text and no {{user}}

Anonymous 01/11/25(Sat)21:28:18 No.103859864

>>103859641

I usually use a format like:

I usually use a format like:

<{{char}}>

{{char}} is [...]

[Anything else here in natural language]

</{{char}}>

Anonymous 01/11/25(Sat)21:29:27 No.103859873

I've got some experiment results

I did a 3 epoch tune of phi-3 mini using sexually explicit text to test if I could bypass the filters. My training loss balanced out ~2.0 and I got issues with the AI refusing any sexually explicit requests.

I did a second attempt using the same dataset, but with 5 epochs and a doubled r value. This time training loss balanced around 1.8 and I quit getting refusals.

Tomorrow I'm going to make a larger dataset and do another experiment with the doubled r value against a quadrupled r value, keeping everything else the same.

I did a 3 epoch tune of phi-3 mini using sexually explicit text to test if I could bypass the filters. My training loss balanced out ~2.0 and I got issues with the AI refusing any sexually explicit requests.

I did a second attempt using the same dataset, but with 5 epochs and a doubled r value. This time training loss balanced around 1.8 and I quit getting refusals.

Tomorrow I'm going to make a larger dataset and do another experiment with the doubled r value against a quadrupled r value, keeping everything else the same.

Anonymous 01/11/25(Sat)21:34:03 No.103859907

>>103859744

You mean you keep <|user|> the same but have the assistant in <|assistant|> replaced with the character name?

You mean you keep <|user|> the same but have the assistant in <|assistant|> replaced with the character name?

Anonymous 01/11/25(Sat)21:35:24 No.103859916

Anonymous 01/11/25(Sat)21:36:59 No.103859925

>>103859641

I do markdown with mostly plaintext sections but I'll do a list if the information is easier to understand that way

a lot of people do XML but imo that's only best for claude and markdown is better for most other models

I do markdown with mostly plaintext sections but I'll do a list if the information is easier to understand that way

a lot of people do XML but imo that's only best for claude and markdown is better for most other models

Anonymous 01/11/25(Sat)21:39:04 No.103859942

Anonymous 01/11/25(Sat)21:43:11 No.103859964

Anonymous 01/11/25(Sat)21:43:33 No.103859969

>>103859376

thoughts? working as intended? all the old settings should read their old settings with the exception of needing to add the other lorebook for world stuff

thoughts? working as intended? all the old settings should read their old settings with the exception of needing to add the other lorebook for world stuff

Anonymous 01/11/25(Sat)21:44:22 No.103859975

>>103859916

Can you explain then? Why would you not have {{user}} in the prompt? How does that benefit the generation?

Can you explain then? Why would you not have {{user}} in the prompt? How does that benefit the generation?

Anonymous 01/11/25(Sat)21:45:26 No.103859987

Anonymous 01/11/25(Sat)21:46:13 No.103859996

Anonymous 01/11/25(Sat)21:57:45 No.103860090

>>103857775

Why small over nemo? I can run both but rarely run small.

Why small over nemo? I can run both but rarely run small.

Anonymous 01/11/25(Sat)22:05:13 No.103860162

pee pee poo poo moment

Anonymous 01/11/25(Sat)22:07:28 No.103860176

>>103860162

That's some hyperrich meme shitposting.

That's some hyperrich meme shitposting.

Anonymous 01/11/25(Sat)22:14:21 No.103860217

>768 GB DDR5 ~2500

>Chinese 9334 epyc ~500

>12 Channel Gigabyte board 900~

I want to buy it all so bad.

>Chinese 9334 epyc ~500

>12 Channel Gigabyte board 900~

I want to buy it all so bad.

Anonymous 01/11/25(Sat)22:17:32 No.103860253

>>103860162

Are they afraid that trump will actually become the first American dictator or something?

Are they afraid that trump will actually become the first American dictator or something?

Anonymous 01/11/25(Sat)22:17:54 No.103860257

https://github.com/ggerganov/llama.cpp/discussions/10879

Interesting Vulkan benchmarks, though pp is shit as usual. Intel has the smallest pp ofc. Lots of unconventional hardware on the list too.

CUDA dev where's the fucking CUDA benchmark thread?

Interesting Vulkan benchmarks, though pp is shit as usual. Intel has the smallest pp ofc. Lots of unconventional hardware on the list too.

CUDA dev where's the fucking CUDA benchmark thread?

Anonymous 01/11/25(Sat)22:29:33 No.103860325

>>103860217

How much power do I need for that? I'm running out of plugs in my house (it's old).

How much power do I need for that? I'm running out of plugs in my house (it's old).

Anonymous 01/11/25(Sat)22:34:29 No.103860353

>>103860325

From what I've seen it only pulls 350w or so as a full system from others who have done something similar.

From what I've seen it only pulls 350w or so as a full system from others who have done something similar.

Anonymous 01/11/25(Sat)22:35:34 No.103860366

>>103860162

I don't know what they're all up to, but it's inorganic. There is some ulterior motive behind this widespread pivot, specifically how much they're trying to publicize it. Every day I'm starting to believe that screencap of an upcoming war more and more.

I don't know what they're all up to, but it's inorganic. There is some ulterior motive behind this widespread pivot, specifically how much they're trying to publicize it. Every day I'm starting to believe that screencap of an upcoming war more and more.

Anonymous 01/11/25(Sat)22:35:48 No.103860367

>>103860162

You are forgetting Boeing, which also gave 1mill to trump and biden.

You are forgetting Boeing, which also gave 1mill to trump and biden.

Anonymous 01/11/25(Sat)22:36:35 No.103860372

>>103860253

They were all screeching about how Trump was a fascist and a dictator the first time around. Now it's been mostly silence except for extreme leftists and echo chambers like reddit.

They were all screeching about how Trump was a fascist and a dictator the first time around. Now it's been mostly silence except for extreme leftists and echo chambers like reddit.

Anonymous 01/11/25(Sat)23:37:29 No.103860905

>>103857156

>Will the unquantized model ever fit into 1Tb??

It fits into 768GB if you limit yourself to 8k context. more than that and you start either swapping or having cache thrashing.

So, yes. It would fit into 1TB

>Will the unquantized model ever fit into 1Tb??

It fits into 768GB if you limit yourself to 8k context. more than that and you start either swapping or having cache thrashing.

So, yes. It would fit into 1TB

Anonymous 01/12/25(Sun)01:02:29 No.103861621

>>103856968

It's a proof of concept conversion,

The fact that it works at all is mind blowing.

There is no kv cache, no slowdown over time, the millionth token will be calculated in the same time as the 20th.

Be patient, rwkv superiority is soon

It's a proof of concept conversion,

The fact that it works at all is mind blowing.

There is no kv cache, no slowdown over time, the millionth token will be calculated in the same time as the 20th.

Be patient, rwkv superiority is soon

Anonymous 01/12/25(Sun)01:04:44 No.103861636

>>103860905

Why would you want unquantized? Or is DS just shit?

Why would you want unquantized? Or is DS just shit?

Anonymous 01/12/25(Sun)01:13:44 No.103861697

>>103860162

What am I looking at here?

What am I looking at here?

Anonymous 01/12/25(Sun)01:14:18 No.103861700

>>103860162

ok, but is trump pro open source ai or only pro xai?

ok, but is trump pro open source ai or only pro xai?

Anonymous 01/12/25(Sun)02:00:26 No.103861989

>>103861700

Trump is pro whatever he thinks is cool at the time.

Trump is pro whatever he thinks is cool at the time.

Anonymous 01/12/25(Sun)02:00:56 No.103861993

>>103861636

> Why would you want unquantized? Or is DS just shit?

All other things being equal, why wouldn’t you want it unquantized?

> Why would you want unquantized? Or is DS just shit?

All other things being equal, why wouldn’t you want it unquantized?

Anonymous 01/12/25(Sun)02:03:08 No.103862005

>>103861993

because I don't have 768GB of RAM????

because I don't have 768GB of RAM????

Anonymous 01/12/25(Sun)02:04:44 No.103862016

FrontierMath still hasn't updated benchmark results that show that o3 solved 25% of their math problems.

Did openAI cheat or did they simply fake the results?

Did openAI cheat or did they simply fake the results?

Anonymous 01/12/25(Sun)02:15:05 No.103862074

>>103861621

lolcats was first and nothing came out of that either

lolcats was first and nothing came out of that either

Anonymous 01/12/25(Sun)02:20:32 No.103862115

so jealous of backup chads

poorfag looking for strategies for sad poor poorman

poorfag looking for strategies for sad poor poorman

Anonymous 01/12/25(Sun)02:21:28 No.103862126

>>103860217

Did you by chance find any 6400 board? There only seem to be boards that can do 6000.

Did you by chance find any 6400 board? There only seem to be boards that can do 6000.

Anonymous 01/12/25(Sun)02:25:44 No.103862146

Cpukeks max your TREFI/DRAM Refresh Interval

Model: Llama-3.2-3B-Instruct-f16 Flags: NoAVX2=False Threads=24 HighPriority=False Cublas_Args=None Tensor_Split=None BlasThreads=24 BlasBatchSize=512 FlashAttention=False KvCache=0

65535 TREFI

ProcessingTime: 26.448s

ProcessingSpeed: 151.09T/s

32768 TREFI

ProcessingTime: 31.532s

ProcessingSpeed: 126.73T/s

Model: Llama-3.2-3B-Instruct-f16 Flags: NoAVX2=False Threads=24 HighPriority=False Cublas_Args=None Tensor_Split=None BlasThreads=24 BlasBatchSize=512 FlashAttention=False KvCache=0

65535 TREFI

ProcessingTime: 26.448s

ProcessingSpeed: 151.09T/s

32768 TREFI

ProcessingTime: 31.532s

ProcessingSpeed: 126.73T/s

Anonymous 01/12/25(Sun)02:26:47 No.103862149

>>103861993

Is the extra ram usage really worth it? You're talking about a big ram upgrade.

Is the extra ram usage really worth it? You're talking about a big ram upgrade.

Anonymous 01/12/25(Sun)02:29:32 No.103862160

>>103860162

What dates are there for the donations? I suspect some were motivated by the fact Trump got shot at, even though it wasn't treated as a terrible woe in the media, like it should have been (because le orange whatever). Seeing a cockup on that level is evidence that things need to change - they think to themselves "am I safe" and doubtless have had personal close calls.

What dates are there for the donations? I suspect some were motivated by the fact Trump got shot at, even though it wasn't treated as a terrible woe in the media, like it should have been (because le orange whatever). Seeing a cockup on that level is evidence that things need to change - they think to themselves "am I safe" and doubtless have had personal close calls.

Anonymous 01/12/25(Sun)02:38:05 No.103862205

>>103859925

Could you provide an example or show me a card, anon?

Could you provide an example or show me a card, anon?

Anonymous 01/12/25(Sun)02:39:24 No.103862212

>>103862005

So get more???? Must suck being poor.

So get more???? Must suck being poor.

Anonymous 01/12/25(Sun)02:51:58 No.103862272

What's the best model for 24gb vram that can run at decent speed? I just reinstalled everything and all I can remember is Cydonia Magnum 22b

I don't want to run lobotomized 70b because it sucks and is slow

for ERP, for talking to young maids that work for me

I don't want to run lobotomized 70b because it sucks and is slow

for ERP, for talking to young maids that work for me

Anonymous 01/12/25(Sun)02:55:11 No.103862289

>>103862272

If you liked that one it's pretty OK. I think the cydonia 1.2 magnum one is better than 1.3. It's better at portraying distinct personalities than nemo I find which would be more important if you have multiple characters.

If you liked that one it's pretty OK. I think the cydonia 1.2 magnum one is better than 1.3. It's better at portraying distinct personalities than nemo I find which would be more important if you have multiple characters.

Anonymous 01/12/25(Sun)03:03:12 No.103862320

>>103862126

I think only 9005 epycs will support that speed making it expensive to go that route.

I think only 9005 epycs will support that speed making it expensive to go that route.

Anonymous 01/12/25(Sun)03:45:34 No.103862580

>>103860090

I really like both, but I needed to take a break from Nemo's writing style. Plus, Mistral small is just a teeny bit smarter. Can only fit about 30k context in it though.

I really like both, but I needed to take a break from Nemo's writing style. Plus, Mistral small is just a teeny bit smarter. Can only fit about 30k context in it though.

Anonymous 01/12/25(Sun)03:49:02 No.103862601

>>103862580

Do you find it actually uses the 30k? If so that's another advantage over nemo.

Do you find it actually uses the 30k? If so that's another advantage over nemo.

Anonymous 01/12/25(Sun)04:04:58 No.103862685

https://pastebin.com/XxeNpzLS

Here's my kokoro tts api thats compatible with koboldcpp (openai).

>open ai compatible url

http://localhost:9999/v1/audio/speech

>voice

Choose your voice from one that comes with your kokoro tts.

Here's my kokoro tts api thats compatible with koboldcpp (openai).

>open ai compatible url

http://localhost:9999/v1/audio/spee

>voice

Choose your voice from one that comes with your kokoro tts.

Anonymous 01/12/25(Sun)04:07:33 No.103862701

>>103860253

Fascists are traditionally on the side of capital, so likely not.

They just know from Trump's first 4 years in office that he's very receptive to corruption so now that he's won the election they're rushing to get government handouts.

>>103861700

Depends on who gives him the biggest bribes I guess.

Fascists are traditionally on the side of capital, so likely not.

They just know from Trump's first 4 years in office that he's very receptive to corruption so now that he's won the election they're rushing to get government handouts.

>>103861700

Depends on who gives him the biggest bribes I guess.

Anonymous 01/12/25(Sun)04:08:27 No.103862707

>>103862685

Oh and run as "python api.py". Install the proper requirements. Uvicorn and fastapi is what I used I think on top of the default. But you should be able to figure out the requirements if you get errors.

Oh and run as "python api.py". Install the proper requirements. Uvicorn and fastapi is what I used I think on top of the default. But you should be able to figure out the requirements if you get errors.

llama.cpp CUDA dev !!OM2Fp6Fn93S 01/12/25(Sun)04:10:56 No.103862715

>>103860257

Too much work, I'd rather just improve the software.

Too much work, I'd rather just improve the software.

Noah !ZSjvHtAFy6 01/12/25(Sun)04:12:17 No.103862724

is there a model thats pretty close to janitorAI?

I have been using https://huggingface.co/VongolaChouko/Starcannon-Unleashed-12B-v1.0#instruct but it has a few caveats.

Also I need to remind myself to upload that llama.cpp fix for the tokenizer failute.

I have been using https://huggingface.co/VongolaChouk

Also I need to remind myself to upload that llama.cpp fix for the tokenizer failute.

Anonymous 01/12/25(Sun)04:25:56 No.103862823

>>103860253

It's a political gesture. They are signaling opposition to the previous admin, which incessantly inserted itself in their affairs, and its policies. The DEI shit is also a burden for the corporations, and they would prefer a less divisive political climate for obvious reasons. And so on, and so on.

It's a political gesture. They are signaling opposition to the previous admin, which incessantly inserted itself in their affairs, and its policies. The DEI shit is also a burden for the corporations, and they would prefer a less divisive political climate for obvious reasons. And so on, and so on.

Anonymous 01/12/25(Sun)04:28:44 No.103862838

>>103860162

I still don't understand what the point is for all this inauguration money. From what I could gather, apparently its for a giant party that gets thrown when the president gets sworn in?

I still don't understand what the point is for all this inauguration money. From what I could gather, apparently its for a giant party that gets thrown when the president gets sworn in?

Anonymous 01/12/25(Sun)04:51:38 No.103862963

>>103862715

The man cannot be stopped

The man cannot be stopped

Anonymous 01/12/25(Sun)04:53:02 No.103862971

>>103862823

I'm not sure about divisible political climate being bad for them, but if facebook- uh... Meta is any indication, then the left pushing for fact-checkers and censorship on social media platforms is probably the biggest problem as you gotta pay people for that shit

I'm not sure about divisible political climate being bad for them, but if facebook- uh... Meta is any indication, then the left pushing for fact-checkers and censorship on social media platforms is probably the biggest problem as you gotta pay people for that shit

Anonymous 01/12/25(Sun)04:54:02 No.103862975

Anonymous 01/12/25(Sun)05:16:14 No.103863106

Any advice on making a completely local model of my own voice? Ideally I'd like it to be something where I just type in what I want and it will say it, it would be even better if it could adjust accent and pitch, but it's not a deal breaker at all.

I know jackshit about any of this stuff, so I'll need some hand holding. Even just a YouTube video would help.

I know jackshit about any of this stuff, so I'll need some hand holding. Even just a YouTube video would help.

Anonymous 01/12/25(Sun)05:17:04 No.103863111

I somehow totally missed that there are Gemma 2 27B finetunes. Has anyone tried magnum-v4-27b (https://huggingface.co/anthracite-org/magnum-v4-27b)? How does it compare to other models that fit in 16 GB VRAM with minimal offloading? I feel like there hasn't been much progress in this size range after Gemma 2 with basically only Mistral Small being competitive.

Anonymous 01/12/25(Sun)05:32:51 No.103863198

>>103862205

https://files.catbox.moe/eq0e52.png

here, an example

obviously you don't need to take it 1:1

personality section is particularly bloated and doesn't have to do with personality that much, but the overall format and style is pretty good, just needs a lot of fat trimming

https://files.catbox.moe/eq0e52.png

here, an example

obviously you don't need to take it 1:1

personality section is particularly bloated and doesn't have to do with personality that much, but the overall format and style is pretty good, just needs a lot of fat trimming

Anonymous 01/12/25(Sun)05:34:22 No.103863209

>>103863106

Look into gpt-sovits. With that you can both clone (and finetune on) your voice.

Just cloning is not that accurate, but may be good enough. It uses a short voice sample and it just works. It picks up on some of the characteristics of your sample.

If you finetune, you'll end up with a new model that you'd use just like the default ones and it's more accurate than cloning, of course. The new model still needs a voice sample for inference. You can then use different voice samples with different pitch/tone to generate variations of your own voice. I understand finetuning can be done in less than an hour on consumer gpus. I won't install it again and what little i'll be able to help is from memory.

>https://github.com/RVC-Boss/GPT-SoVITS

>https://rentry.co/GPT-SoVITS-guide#/

Beware the rentry link (posted on their github as well) wasn't completely accurate when i used it.

You're gonna need to learn how to fish.

Look into gpt-sovits. With that you can both clone (and finetune on) your voice.

Just cloning is not that accurate, but may be good enough. It uses a short voice sample and it just works. It picks up on some of the characteristics of your sample.

If you finetune, you'll end up with a new model that you'd use just like the default ones and it's more accurate than cloning, of course. The new model still needs a voice sample for inference. You can then use different voice samples with different pitch/tone to generate variations of your own voice. I understand finetuning can be done in less than an hour on consumer gpus. I won't install it again and what little i'll be able to help is from memory.

>https://github.com/RVC-Boss/GPT-So

>https://rentry.co/GPT-SoVITS-guide

Beware the rentry link (posted on their github as well) wasn't completely accurate when i used it.

You're gonna need to learn how to fish.

Anonymous 01/12/25(Sun)05:38:16 No.103863241

>>103863209

Thanks, I'll see how I g and come back if I have some issues.

Thanks, I'll see how I g and come back if I have some issues.

Anonymous 01/12/25(Sun)06:07:09 No.103863418

>>103862016

It's a mystery what OAI do to the benchmark prompts that were fed directly to their servers and stored in a database somewhere

It's a mystery what OAI do to the benchmark prompts that were fed directly to their servers and stored in a database somewhere

Anonymous 01/12/25(Sun)06:50:59 No.103863673

>install dependencies with pip

>it removes some required packages

I hate python so fucking much

>it removes some required packages

I hate python so fucking much

Noah !ZSjvHtAFy6 01/12/25(Sun)06:58:28 No.103863717

>>103863673

just make sure you use -U or you may remove system installed packages

just make sure you use -U or you may remove system installed packages

Anonymous 01/12/25(Sun)07:08:24 No.103863778

>decide to try out mikupad

>marvel at extremely shitty layout

>on a whim try it in shit browser for stupid people (Chrome)

>layout suddenly functional

We're not oppressing webdevs enough.

>marvel at extremely shitty layout

>on a whim try it in shit browser for stupid people (Chrome)

>layout suddenly functional

We're not oppressing webdevs enough.

Anonymous 01/12/25(Sun)07:10:18 No.103863786

>>103863778

Skill issue, I'm using Firefox and it runs just fine.

Skill issue, I'm using Firefox and it runs just fine.

Anonymous 01/12/25(Sun)07:14:45 No.103863811

As a beginner, what would be a good method to analyze PDFs locally for research, corrections, etc.? I'm using LMStudio along with AnythingLLM, and so far, the results when analyzing PDFs have been a bit lacking (with meta-llama-3.1-8b-instruct—I’m not sure if something needs to be adjusted in the settings or if it's just inherently limited). I have a 4070 and a Ryzen 9 7900X.

Anonymous 01/12/25(Sun)07:28:10 No.103863903

>>103860366

What screencap?

What screencap?

Anonymous 01/12/25(Sun)07:31:50 No.103863922

>>103863778

I'm using Firefox and it works fine, what kind of cursed Firefox are you using?

I'm using Firefox and it works fine, what kind of cursed Firefox are you using?

Anonymous 01/12/25(Sun)07:34:53 No.103863948

>>103863811

>lacking

Very descriptive. Show what you mean, show your settings. If you wanna try a bigger model try with mistral nemo(12b), mistral small(22b) or maybe some in the qwen family.

>lacking

Very descriptive. Show what you mean, show your settings. If you wanna try a bigger model try with mistral nemo(12b), mistral small(22b) or maybe some in the qwen family.

Noah !ZSjvHtAFy6 01/12/25(Sun)07:43:54 No.103864003

>>103863778

more like most devs throw it chrome and call it a day.

more like most devs throw it chrome and call it a day.

Anonymous 01/12/25(Sun)07:55:22 No.103864099

>>103863778

>Use some shit fork because a random person on the internet told you it makes you cool

>It doesn't work

>Use some shit fork because a random person on the internet told you it makes you cool

>It doesn't work

Anonymous 01/12/25(Sun)08:03:40 No.103864169

We're getting so close to the next release circle. It's just around the corner.

Anonymous 01/12/25(Sun)08:13:05 No.103864230

https://chat.qwenlm.ai/

Anonymous 01/12/25(Sun)08:18:22 No.103864272

>>103864230

>Qwen2.5-Plus

It's fucking over. Progress has stagnated so far that new models aren't even worthy a full step after almost a year of Qwen2.

The only way out of this is to make models bigger and bigger. We have reached the limit of what 7~70B models can do.

>Qwen2.5-Plus

It's fucking over. Progress has stagnated so far that new models aren't even worthy a full step after almost a year of Qwen2.

The only way out of this is to make models bigger and bigger. We have reached the limit of what 7~70B models can do.

Anonymous 01/12/25(Sun)08:22:08 No.103864315

>>103864272

why are people like it's a new model or something, it literally was mentioned when qwen released 2.5 instruct series, this is their API only model

https://qwenlm.github.io/blog/qwen2.5/

>In addition to these models, we offer APIs for our flagship language models: Qwen-Plus and Qwen-Turbo through Model Studio, and we encourage you to explore them!

why are people like it's a new model or something, it literally was mentioned when qwen released 2.5 instruct series, this is their API only model

https://qwenlm.github.io/blog/qwen2

>In addition to these models, we offer APIs for our flagship language models: Qwen-Plus and Qwen-Turbo through Model Studio, and we encourage you to explore them!

Anonymous 01/12/25(Sun)08:31:44 No.103864399

Anonymous 01/12/25(Sun)08:34:23 No.103864426

Anonymous 01/12/25(Sun)08:35:34 No.103864444

>>103864426

/lmg/ - Local Miku General

/lmg/ - Local Miku General

Anonymous 01/12/25(Sun)08:40:16 No.103864486

>>103864315

you're responding to paid content, btw

you're responding to paid content, btw

Anonymous 01/12/25(Sun)08:46:40 No.103864546

Anonymous 01/12/25(Sun)08:48:32 No.103864563

>>103864546

/aicg/ tourist

/aicg/ tourist

Anonymous 01/12/25(Sun)08:55:00 No.103864611

>>103864546

https://desuarchive.org/_/search/tripcode/ZSjvHtAFy6

smells like bot - brand new tripcode and has been posting in every /g/ thread every 2-3 minutes for the last 6 hours

https://desuarchive.org/_/search/tr

smells like bot - brand new tripcode and has been posting in every /g/ thread every 2-3 minutes for the last 6 hours

Anonymous 01/12/25(Sun)09:01:28 No.103864664

Anonymous 01/12/25(Sun)09:02:45 No.103864680

>>103864664

It's useful when you want to check token probabilities.

It's useful when you want to check token probabilities.

Anonymous 01/12/25(Sun)09:28:13 No.103864862

>>103857013

The human eye cannot see higher than 3 bpw

The human eye cannot see higher than 3 bpw

Anonymous 01/12/25(Sun)09:28:52 No.103864868

why can't we have some /lmg/ anon getting prosecuted so we can unkill the general...

Anonymous 01/12/25(Sun)09:30:51 No.103864891

>>103864868

we don't do illegal stuff. WE DO SCiENCE!

we don't do illegal stuff. WE DO SCiENCE!

Anonymous 01/12/25(Sun)09:33:59 No.103864917

The hobby is DEAD. New models? More like no models.

Anonymous 01/12/25(Sun)09:34:07 No.103864919

>>103864891

Why not illegal science? Like running LLMs on living brain cells

Why not illegal science? Like running LLMs on living brain cells

Anonymous 01/12/25(Sun)09:42:06 No.103864980

>>103863778

Have you tried disabling your extensions (or a private window)?

That's very clearly a you problem.

Have you tried disabling your extensions (or a private window)?

That's very clearly a you problem.

Anonymous 01/12/25(Sun)09:45:54 No.103865016

>>103864917

I'm working on my vidya game while waiting for new models to drop.

I also read the papers that get posted from time to time.

I'm working on my vidya game while waiting for new models to drop.

I also read the papers that get posted from time to time.

Anonymous 01/12/25(Sun)09:48:22 No.103865040

>>103862272

EVA Qwen 2.5 32b.

Not sure why you'd use a 22b when 32b fits up to 5bit depending on how much context you want.

EVA Qwen 2.5 32b.

Not sure why you'd use a 22b when 32b fits up to 5bit depending on how much context you want.

Anonymous 01/12/25(Sun)09:49:11 No.103865047

>>103864917

DeepSeekV3 is already here and it's the best we will ever get.

DeepSeekV3 is already here and it's the best we will ever get.

Anonymous 01/12/25(Sun)10:09:30 No.103865213

>>103864917

wait for zucc. he will drop the new model soon.

wait for zucc. he will drop the new model soon.

Anonymous 01/12/25(Sun)10:12:57 No.103865245

>>103865047

I want to see them release a smaller version. I wonder how good it would be with their training and how the architecture scales down.

I want to see them release a smaller version. I wonder how good it would be with their training and how the architecture scales down.

Anonymous 01/12/25(Sun)10:14:12 No.103865255

Why can't they just make llama-sex-32B and stop pretending nobody wants it...

Anonymous 01/12/25(Sun)10:17:04 No.103865290

Is there a llm benchmark on output varieties? All of these benchmaxxing target things with objectively correct answer.

Anonymous 01/12/25(Sun)10:17:57 No.103865300

>>103865290

SimpleQA

SimpleQA

Anonymous 01/12/25(Sun)10:19:57 No.103865326

>>103865255

Then it turns out uber-horny and overly compliant and everyone shits on it for not being realistic.

The real goal is not a porn model, but a model that understands sexuality as a part of realistically simulating a person.

Then it turns out uber-horny and overly compliant and everyone shits on it for not being realistic.

The real goal is not a porn model, but a model that understands sexuality as a part of realistically simulating a person.

Anonymous 01/12/25(Sun)10:21:59 No.103865349

>>103865326

>not being realistic

A totally realistic LLM would never do any sexual stuff with you cause you aren't a chad. Looking for realism is retarded.

>not being realistic

A totally realistic LLM would never do any sexual stuff with you cause you aren't a chad. Looking for realism is retarded.

Anonymous 01/12/25(Sun)10:25:37 No.103865382

>>103865349

Realistic within the established parameters of the story, retard.

Realistic within the established parameters of the story, retard.

Anonymous 01/12/25(Sun)10:26:51 No.103865392

>>103865349

How about giving us a difficulty toggle then?

Imagine speed-rizzing Llm-Chan on Legendary+

How about giving us a difficulty toggle then?

Imagine speed-rizzing Llm-Chan on Legendary+

Anonymous 01/12/25(Sun)10:27:59 No.103865405

>>103865392

Unironically could help y'all develop some social skills.

Unironically could help y'all develop some social skills.

Anonymous 01/12/25(Sun)10:32:03 No.103865449

Anonymous 01/12/25(Sun)10:34:18 No.103865471

Anonymous 01/12/25(Sun)10:36:21 No.103865496

Anonymous 01/12/25(Sun)10:39:07 No.103865530

>>103865326

>uber-horny and overly compliant

maybe if you didn't want to rape unwilling women they would like you more

>uber-horny and overly compliant

maybe if you didn't want to rape unwilling women they would like you more

Anonymous 01/12/25(Sun)10:41:34 No.103865560

Anonymous 01/12/25(Sun)10:42:31 No.103865569

any local LLM software for proof reading? I'm looking something easier to use than copy pasting context sized chunks of my text to LLM.

Anonymous 01/12/25(Sun)10:44:17 No.103865590

Anonymous 01/12/25(Sun)10:46:06 No.103865613

Anonymous 01/12/25(Sun)10:52:02 No.103865670

Anonymous 01/12/25(Sun)10:54:45 No.103865699

Anonymous 01/12/25(Sun)10:57:36 No.103865725

>>103865560

Give me a reason.

Give me a reason.

Anonymous 01/12/25(Sun)10:58:03 No.103865732

>>103865569

You shouldn't need anything other than a simple grammar checker

You shouldn't need anything other than a simple grammar checker

Anonymous 01/12/25(Sun)10:59:51 No.103865752

>>103865732

This isn't StackOverflow. Don't tell him what he needs.

This isn't StackOverflow. Don't tell him what he needs.

Anonymous 01/12/25(Sun)11:10:30 No.103865858

Anonymous 01/12/25(Sun)11:14:55 No.103865914

Anonymous 01/12/25(Sun)11:21:58 No.103865990

>>103865047

DS3 is painfully bland. Chinks keep doing this to their models. There is something wrong with all of their datasets.

DS3 is painfully bland. Chinks keep doing this to their models. There is something wrong with all of their datasets.

Anonymous 01/12/25(Sun)11:25:27 No.103866024

>>103865990

They all distilled GPT4 that's why. Alibaba got caught mass distilling and got banned.

They all distilled GPT4 that's why. Alibaba got caught mass distilling and got banned.

Anonymous 01/12/25(Sun)12:04:46 No.103866495

heya been trying to get a bit into this hobby

wanted to try some models that i can run on my12gb+16gb ram what are t he kings for roleplay rn?

also, any tips? I heard somewhere small models lack some knowledge so you should feed it to them?

wanted to try some models that i can run on my12gb+16gb ram what are t he kings for roleplay rn?

also, any tips? I heard somewhere small models lack some knowledge so you should feed it to them?

Anonymous 01/12/25(Sun)12:16:27 No.103866619

>>103866495

at your specs try mistral nemo and its finetunes

>I heard somewhere small models lack some knowledge so you should feed it to them?

sounds like they were talking about RAG which is a meme

at your specs try mistral nemo and its finetunes

>I heard somewhere small models lack some knowledge so you should feed it to them?

sounds like they were talking about RAG which is a meme

Anonymous 01/12/25(Sun)12:17:47 No.103866634

>>103865047

It's repetitive and I can only run it via api which makes it less fun.

It's repetitive and I can only run it via api which makes it less fun.

Anonymous 01/12/25(Sun)12:23:19 No.103866689

>>103866619

I swear to god, all the fucking morons in here.

RAG IS NOT A FUCKING MEME.

IF YOU THINK ITS A MEME, YOU ARE THE MEME.

I swear to god, all the fucking morons in here.

RAG IS NOT A FUCKING MEME.

IF YOU THINK ITS A MEME, YOU ARE THE MEME.

Anonymous 01/12/25(Sun)12:24:07 No.103866697

>>103866024

the future of local models is to all converge to gpt, sad

the future of local models is to all converge to gpt, sad

Anonymous 01/12/25(Sun)12:24:33 No.103866702

>>103866689

It's one retarded locust spoonfeeding a newer retarded locust, what did you expect?

It's one retarded locust spoonfeeding a newer retarded locust, what did you expect?

Anonymous 01/12/25(Sun)12:24:40 No.103866704

>103866689

RAG is slop, do you like slop anon?

RAG is slop, do you like slop anon?

Anonymous 01/12/25(Sun)12:25:36 No.103866708

I have a gigantic list of mp4 unsorted files, is there any model able to look at each one and tell me if it's sfw, nsfw, and what kind of nsfw with tags?

Anonymous 01/12/25(Sun)12:26:26 No.103866716

>>103865040

I thought qwen sucked.

I thought qwen sucked.

Anonymous 01/12/25(Sun)12:27:36 No.103866727

>>103866716

You suck

You suck

Anonymous 01/12/25(Sun)12:27:39 No.103866728

Anonymous 01/12/25(Sun)12:28:23 No.103866734

>>103866619

i dont know what is rag but some people recommended making a "lorebook" entry to insert knowledge that might be lacking on a 12b or some shit like that, i am new so i dont understand much. Wonder if there is a community premade lorebook anywhere...

i dont know what is rag but some people recommended making a "lorebook" entry to insert knowledge that might be lacking on a 12b or some shit like that, i am new so i dont understand much. Wonder if there is a community premade lorebook anywhere...

Anonymous 01/12/25(Sun)12:29:39 No.103866749

>>103866716

frankly i prefer qwen way more for serious rps instead of straight up coom

but at the same time, llama has much more "engaging" dialogue

frankly i prefer qwen way more for serious rps instead of straight up coom

but at the same time, llama has much more "engaging" dialogue

Anonymous 01/12/25(Sun)12:30:26 No.103866756

>>103866734

lorebooks can help if you are shooting for world info. Tho for me I use it for simple system notes to expand rps.

lorebooks can help if you are shooting for world info. Tho for me I use it for simple system notes to expand rps.

Anonymous 01/12/25(Sun)12:31:55 No.103866769

>>103866749

If only they made something between 8 and 70b.

If only they made something between 8 and 70b.

Anonymous 01/12/25(Sun)12:31:58 No.103866771

Google hacked Brave browser's adblocker

Owari da...

Owari da...

Anonymous 01/12/25(Sun)12:36:05 No.103866811

Anonymous 01/12/25(Sun)12:37:11 No.103866820

>>103866734

RAG = Retrieval-Augmented Generation, and lorebooks are a rudimentary RAG implementation (smarter ones don't rely on hardcoded keywords to know when to insert information).

RAG = Retrieval-Augmented Generation, and lorebooks are a rudimentary RAG implementation (smarter ones don't rely on hardcoded keywords to know when to insert information).

Anonymous 01/12/25(Sun)12:39:30 No.103866848

>>103866689

it's 100% a meme for RP, especially for a clueless newfriend

unless you're putting a lot of effort into it you're just jankily polluting your context for no reason

it's 100% a meme for RP, especially for a clueless newfriend

unless you're putting a lot of effort into it you're just jankily polluting your context for no reason

Anonymous 01/12/25(Sun)12:42:23 No.103866883

>>103865914

If it wasn't crippled by a shitty language EVERYONE would be using it. It's by far the fastest way to build GUI applications. Hell maybe even electron wouldn't take hold if it was using something like C# instead of Pascal.

If it wasn't crippled by a shitty language EVERYONE would be using it. It's by far the fastest way to build GUI applications. Hell maybe even electron wouldn't take hold if it was using something like C# instead of Pascal.

Anonymous 01/12/25(Sun)12:43:14 No.103866897

RAG = RETRIEVAL AUGMENTED GENERATION.

YOU _AUGMENT_ THE RETRIEVAL OF INFORMATION PRIOR TO GENERATION.

THAT'S IT.

Lorebooks, are a dead-simple implementation that act like dictionaries, doing a find/replace with rules.

When people usually talk about RAG, they are referring to complex systems involving lookups in a/multiple DB(s), doing matching and comparison to pull in chunks of information that help build context for the question the user is asking.

>>103866848

If its a meme, then why the fuck did ST add it, and so many variations?

I think the answer is that it is not a meme, but rather people are fucking retarded and can't understand how to use it properly.

YOU _AUGMENT_ THE RETRIEVAL OF INFORMATION PRIOR TO GENERATION.

THAT'S IT.

Lorebooks, are a dead-simple implementation that act like dictionaries, doing a find/replace with rules.

When people usually talk about RAG, they are referring to complex systems involving lookups in a/multiple DB(s), doing matching and comparison to pull in chunks of information that help build context for the question the user is asking.

>>103866848

If its a meme, then why the fuck did ST add it, and so many variations?

I think the answer is that it is not a meme, but rather people are fucking retarded and can't understand how to use it properly.

Anonymous 01/12/25(Sun)12:44:15 No.103866913

>>103866897

>If its a meme, then why the fuck did ST add it, and so many variations?

this is not the strong argument you think it is kek

>If its a meme, then why the fuck did ST add it, and so many variations?

this is not the strong argument you think it is kek

Anonymous 01/12/25(Sun)12:44:23 No.103866914

>>103866897

It's definitely a meme for nemo which is too dumb, I'm sure it's fine for 70b or something.

It's definitely a meme for nemo which is too dumb, I'm sure it's fine for 70b or something.

Anonymous 01/12/25(Sun)12:45:24 No.103866924

>>103866689

sounds like someone's on RAG

sounds like someone's on RAG

Anonymous 01/12/25(Sun)12:47:54 No.103866949

>>103866913

I'm semi-shitposting but I agree. I think service tensor is a bit silly.

>>103866924

You mean, _on the_ RAG. Truth is, I was never off it.

I'm semi-shitposting but I agree. I think service tensor is a bit silly.

>>103866924

You mean, _on the_ RAG. Truth is, I was never off it.

Anonymous 01/12/25(Sun)12:52:05 No.103866994

Can anyone tell me if I'd be better served by doing a big prompt with examples or attempting to fine-tune a model to learn the writing style of http://n-gate.com/

I would think it's too little for fine-tuning, but have no knowledge regarding the specifics of when you should fine-tune vs examples in prompt.

I'd like to make a bot/easy-to-use service for myself to create n-gate like takedowns of HNs front page every 24 hours.

I would think it's too little for fine-tuning, but have no knowledge regarding the specifics of when you should fine-tune vs examples in prompt.

I'd like to make a bot/easy-to-use service for myself to create n-gate like takedowns of HNs front page every 24 hours.

Anonymous 01/12/25(Sun)12:52:55 No.103866999

>>103866883

You know WinForms exist, right

You know WinForms exist, right

Anonymous 01/12/25(Sun)12:54:31 No.103867013

>>103866999

and still better than WPF UWP and whatever other trash Microsoft has subsequently shat out

and still better than WPF UWP and whatever other trash Microsoft has subsequently shat out

Anonymous 01/12/25(Sun)12:55:52 No.103867027

What's the best local vision model for classifying porn?

Anonymous 01/12/25(Sun)12:58:59 No.103867066

>>103866897

I tried out RAG on kcpp

I threw in a giant article (45k words) and had it find a needle in the haystack mid way through, it was accurate, of course.

I don't think it makes any sense for processing the info, but it can fetch it well enough. Good for lorebooks, especially if you continuously update them. Instant, needle/haystack fetching is a nice bonus for context injection.

I tried out RAG on kcpp

I threw in a giant article (45k words) and had it find a needle in the haystack mid way through, it was accurate, of course.

I don't think it makes any sense for processing the info, but it can fetch it well enough. Good for lorebooks, especially if you continuously update them. Instant, needle/haystack fetching is a nice bonus for context injection.

Anonymous 01/12/25(Sun)13:01:09 No.103867091

>>103867066

And what model were you using?

And what model were you using?

Anonymous 01/12/25(Sun)13:02:20 No.103867111

>>103867027

joycaption

joycaption

Anonymous 01/12/25(Sun)13:03:29 No.103867131

>>103867091

behemoth 123b

behemoth 123b

Anonymous 01/12/25(Sun)13:03:47 No.103867137

>>103867111

That's for captioning, not classification

That's for captioning, not classification

Anonymous 01/12/25(Sun)13:03:52 No.103867139

>>103867027

Based Victoria appreciator.

Based Victoria appreciator.

Anonymous 01/12/25(Sun)13:04:12 No.103867143

>>103866897

>If its a meme, then why the fuck did ST add it, and so many variations?

If its a meme, then why the fuck did ST add so many sampler variations?

>If its a meme, then why the fuck did ST add it, and so many variations?

If its a meme, then why the fuck did ST add so many sampler variations?

Anonymous 01/12/25(Sun)13:05:15 No.103867159

has anyone here read the recurrentgpt paper

https://arxiv.org/abs/2305.13304

https://arxiv.org/abs/2305.13304

Anonymous 01/12/25(Sun)13:05:43 No.103867166

Can I make a RAG powered lorebook about humans being unable to kiss on lips while sucking a dick?

Anonymous 01/12/25(Sun)13:07:44 No.103867193

Anonymous 01/12/25(Sun)13:07:55 No.103867196

RAG is slop. Context management is slop. Frontends are slop.

If you're not manually doing the matrix multiplications, you're eating slop.

If you're not manually doing the matrix multiplications, you're eating slop.

Anonymous 01/12/25(Sun)13:09:17 No.103867209

>>103867196

that's just creative writing anon

that's just creative writing anon

Anonymous 01/12/25(Sun)13:09:37 No.103867214

>>103867166

As a matter of fact, yes, though no model that isn't completely retarded or fried with high temps would output that to begin with.

As a matter of fact, yes, though no model that isn't completely retarded or fried with high temps would output that to begin with.

Anonymous 01/12/25(Sun)13:09:59 No.103867223

>>103867159

Isn't that RKWV or Raven or Falcon or something based on RNNs?

Isn't that RKWV or Raven or Falcon or something based on RNNs?

Anonymous 01/12/25(Sun)13:13:01 No.103867262

>>103867193

yes but (if you'd read the abstract you'd have known) it's not a model or an architecture, it's a way of working with an arbitrary model so i'm not sure it's trivially worthless just because it's a year and a half old

and if it hasn't made waves that could very well be because ai labs don't give a fuck about story writing

>>103867223

that paper is a way to fake something rnn-like using a transformer (read the abstract). indeed rwkv or something else transformer-like doesn't need such a "fix", but they have their own drawbacks

hence my question about whether anyone's read it/tried it out for writing or rp

>>103867166

i continue to believe that the right idea here is chain of thought planning for actions

as in, have a chain of thought/"scene plan" for the response, in which the model plans every action and justifies it to itself based on the current state

<state>

A is sucking B's dick.

</state>

<plan>

[...]

</plan>

i imagine no not-shit LLM would produce

<plan>

A kisses B.