/lmg/ - Local Models General

Anonymous 01/22/25(Wed)11:23:46 | 523 comments | 59 images | 🔒 Locked

/lmg/ - a general dedicated to the discussion and development of local language models.

Previous threads: >>103989990 & >>103985485

►News

>(01/22) UI-TARS: 8B & 72B VLM GUI agent model: https://github.com/bytedance/UI-TARS

>(01/22) Hunyuan3D-2.0GP runs with less than 6 GB of VRAM: https://github.com/deepbeepmeep/Hunyuan3D-2GP

>(01/21) BSC-LT, funded by EU, releases 2B, 7B & 40B models: https://hf.co/collections/BSC-LT/salamandra-66fc171485944df79469043a

>(01/20) DeepSeek releases R1, R1 Zero, & finetuned Qwen and Llama models: https://hf.co/collections/deepseek-ai/deepseek-r1-678e1e131c0169c0bc89728d

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting-started-guide

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWebService

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning-roadmap

https://rentry.org/llm-training

https://rentry.org/LocalModelsPapers

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/leaderboard.html

Code Editing: https://aider.chat/docs/leaderboards

Context Length: https://github.com/hsiehjackson/RULER

Japanese: https://hf.co/datasets/lmg-anon/vntl-leaderboard

Censorbench: https://codeberg.org/jts2323/censorbench

GPUs: https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngla98yc

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Sampler Visualizer: https://artefact2.github.io/llm-sampling

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-generation-webui

https://github.com/LostRuins/koboldcpp

https://github.com/ggerganov/llama.cpp

https://github.com/theroyallab/tabbyAPI

https://github.com/vllm-project/vllm

Previous threads: >>103989990 & >>103985485

►News

>(01/22) UI-TARS: 8B & 72B VLM GUI agent model: https://github.com/bytedance/UI-TAR

>(01/22) Hunyuan3D-2.0GP runs with less than 6 GB of VRAM: https://github.com/deepbeepmeep/Hun

>(01/21) BSC-LT, funded by EU, releases 2B, 7B & 40B models: https://hf.co/collections/BSC-LT/sa

>(01/20) DeepSeek releases R1, R1 Zero, & finetuned Qwen and Llama models: https://hf.co/collections/deepseek-

►News Archive: https://rentry.org/lmg-news-archive

►Glossary: https://rentry.org/lmg-glossary

►Links: https://rentry.org/LocalModelsLinks

►Official /lmg/ card: https://files.catbox.moe/cbclyf.png

►Getting Started

https://rentry.org/lmg-lazy-getting

https://rentry.org/lmg-build-guides

https://rentry.org/IsolatedLinuxWeb

https://rentry.org/tldrhowtoquant

►Further Learning

https://rentry.org/machine-learning

https://rentry.org/llm-training

https://rentry.org/LocalModelsPaper

►Benchmarks

LiveBench: https://livebench.ai

Programming: https://livecodebench.github.io/lea

Code Editing: https://aider.chat/docs/leaderboard

Context Length: https://github.com/hsiehjackson/RUL

Japanese: https://hf.co/datasets/lmg-anon/vnt

Censorbench: https://codeberg.org/jts2323/censor

GPUs: https://github.com/XiongjieDai/GPU-

►Tools

Alpha Calculator: https://desmos.com/calculator/ffngl

GGUF VRAM Calculator: https://hf.co/spaces/NyxKrage/LLM-M

Sampler Visualizer: https://artefact2.github.io/llm-sam

►Text Gen. UI, Inference Engines

https://github.com/lmg-anon/mikupad

https://github.com/oobabooga/text-g

https://github.com/LostRuins/kobold

https://github.com/ggerganov/llama.

https://github.com/theroyallab/tabb

https://github.com/vllm-project/vll

Anonymous 01/22/25(Wed)11:24:20 No.103995170

►Recent Highlights from the Previous Thread: >>103989990

--Paper: Discussion on "Physics of Skill Learning" paper and its implications for neural network training:

>103990238 >103990979 >103991027 >103991313

--Papers:

>103994913

--Understanding DeepSeek's SFT models and the concept of distillation in AI training:

>103992984 >103993066 >103993079 >103993394 >103993403 >103993410 >103993466 >103993522 >103993542 >103993606 >103993646 >103993658 >103993660 >103993686 >103993412

--R1 model capabilities and MoE architecture discussions, with focus on DeepSeekMoE efficiency and performance:

>103991434 >103991491 >103991503 >103991543 >103992292 >103992394 >103992799 >103993828

--EU passes strict AI regulation, sparking debate on impact and compliance challenges:

>103993137 >103993157 >103993159 >103993217 >103993248 >103993328 >103993368 >103993277 >103993346 >103993283 >103993650 >103993491 >103993536 >103993622 >103993614 >103993690

--Discussion on uncensored AI models, DeepSeek-R1, and censorship concerns in AI development:

>103993195 >103993261 >103993272 >103993281 >103993293 >103993329 >103993276 >103993300 >103993376 >103993424 >103993435 >103993512 >103993586 >103993400 >103993431 >103993401

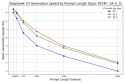

--Global price comparison for used 3090 GPUs:

>103990711 >103990752 >103990876 >103992724 >103992822 >103990928 >103990949 >103990964 >103991084 >103991213

--AI-assisted coding experiences and model performance discussions:

>103990185 >103990201 >103990206 >103990228 >103990241 >103990278 >103990332 >103990360

--Exploring the memory bandwidth and storage requirements for efficient MoE model execution:

>103992813 >103992827 >103993173 >103993222 >103992856 >103993019 >103993046 >103992905 >103993241 >103993340

--Hunyuan3D 2.0 model discussion and resource sharing:

>103990077 >103990090 >103990103 >103990123

--Miku (free space):

►Recent Highlight Posts from the Previous Thread: >>103989995

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

--Paper: Discussion on "Physics of Skill Learning" paper and its implications for neural network training:

>103990238 >103990979 >103991027 >103991313

--Papers:

>103994913

--Understanding DeepSeek's SFT models and the concept of distillation in AI training:

>103992984 >103993066 >103993079 >103993394 >103993403 >103993410 >103993466 >103993522 >103993542 >103993606 >103993646 >103993658 >103993660 >103993686 >103993412

--R1 model capabilities and MoE architecture discussions, with focus on DeepSeekMoE efficiency and performance:

>103991434 >103991491 >103991503 >103991543 >103992292 >103992394 >103992799 >103993828

--EU passes strict AI regulation, sparking debate on impact and compliance challenges:

>103993137 >103993157 >103993159 >103993217 >103993248 >103993328 >103993368 >103993277 >103993346 >103993283 >103993650 >103993491 >103993536 >103993622 >103993614 >103993690

--Discussion on uncensored AI models, DeepSeek-R1, and censorship concerns in AI development:

>103993195 >103993261 >103993272 >103993281 >103993293 >103993329 >103993276 >103993300 >103993376 >103993424 >103993435 >103993512 >103993586 >103993400 >103993431 >103993401

--Global price comparison for used 3090 GPUs:

>103990711 >103990752 >103990876 >103992724 >103992822 >103990928 >103990949 >103990964 >103991084 >103991213

--AI-assisted coding experiences and model performance discussions:

>103990185 >103990201 >103990206 >103990228 >103990241 >103990278 >103990332 >103990360

--Exploring the memory bandwidth and storage requirements for efficient MoE model execution:

>103992813 >103992827 >103993173 >103993222 >103992856 >103993019 >103993046 >103992905 >103993241 >103993340

--Hunyuan3D 2.0 model discussion and resource sharing:

>103990077 >103990090 >103990103 >103990123

--Miku (free space):

►Recent Highlight Posts from the Previous Thread: >>103989995

Why?: 9 reply limit >>102478518

Fix: https://rentry.org/lmg-recap-script

Anonymous 01/22/25(Wed)11:26:38 No.103995193

>>103995170

Thank you Recap Miku

Thank you Recap Miku

Anonymous 01/22/25(Wed)11:26:57 No.103995197

does llama.cpp fully support v3/r1 yet?

Anonymous 01/22/25(Wed)11:27:02 No.103995198

>>103995178

Who gives a shit what the EU thinks?

Who gives a shit what the EU thinks?

Anonymous 01/22/25(Wed)11:28:00 No.103995210

https://github.com/ggerganov/llama.cpp/pull/11289

>support Minicpm-omni in image understanding

Merged 8 hours ago.

>support Minicpm-omni in image understanding

Merged 8 hours ago.

Anonymous 01/22/25(Wed)11:30:15 No.103995231

>>103995197

yes

yes

Anonymous 01/22/25(Wed)11:31:25 No.103995241

>>103995231

With multi-token prediction and all other innovations the architecture brings? Nobody cares for the superficial support they hacked together on the v3 release.

With multi-token prediction and all other innovations the architecture brings? Nobody cares for the superficial support they hacked together on the v3 release.

Anonymous 01/22/25(Wed)11:31:53 No.103995249

Anonymous 01/22/25(Wed)11:32:16 No.103995252

>>103995198

I do, since I live there

I do, since I live there

Anonymous 01/22/25(Wed)11:32:28 No.103995254

Anonymous 01/22/25(Wed)11:32:41 No.103995260

>>103995210

i have run minicpm on kcpp before though? what's the difference with this omni thing?

i have run minicpm on kcpp before though? what's the difference with this omni thing?

Anonymous 01/22/25(Wed)11:33:50 No.103995272

>>103995249

No, I wanted to send the pic so it would extract the text since I was lazy.

No, I wanted to send the pic so it would extract the text since I was lazy.

Anonymous 01/22/25(Wed)11:35:23 No.103995288

/!\ WARNING /!\

X links are now banned in this general, we can't condone with Nazis.

Please from now on only post Xcancel links (xcancel.com), thank you for your cooperation.

X links are now banned in this general, we can't condone with Nazis.

Please from now on only post Xcancel links (xcancel.com), thank you for your cooperation.

Anonymous 01/22/25(Wed)11:35:52 No.103995294

>>103995260

Regular MiniCPM is only image. Omni has video and audio.

Regular MiniCPM is only image. Omni has video and audio.

Anonymous 01/22/25(Wed)11:39:47 No.103995337

>>103995294

ah.. i'm a retard. of course, makes sense.

about video, can it do anything other than describing the video as a whole? for example what if i wanted it to give a specific timestamp for seeing events?

ah.. i'm a retard. of course, makes sense.

about video, can it do anything other than describing the video as a whole? for example what if i wanted it to give a specific timestamp for seeing events?

Anonymous 01/22/25(Wed)11:40:01 No.103995340

>>103995288

I know this is a bait post, but I do appreciate xcancel links because I don't have an x account and the website doesn't let you read without signing in which sucks

I know this is a bait post, but I do appreciate xcancel links because I don't have an x account and the website doesn't let you read without signing in which sucks

Anonymous 01/22/25(Wed)11:42:50 No.103995362

Honestly speaking, R1 prose is still below Claude's. So it's only impressive if you never had access to that. The good part is that it's cheap and easily available, of course. But, AI hasn't really progressed that much in an year, ERP wise, I'll still stick RPing with meat bags. Hopefully next year it will finally improve more than a marginal amount

Anonymous 01/22/25(Wed)11:45:20 No.103995398

Any good prompt for RP when it comes to COT?

Anonymous 01/22/25(Wed)11:46:11 No.103995408

>>103995362

>AI hasn't really progressed that much in an year

It did for local. Closed model companies don't contribute, so local did it on its own.

>AI hasn't really progressed that much in an year

It did for local. Closed model companies don't contribute, so local did it on its own.

Anonymous 01/22/25(Wed)11:46:20 No.103995409

>>103995362

I say it is almost here for a fraction of the price. Of course it is a question of whether they start banning people for smut.

I say it is almost here for a fraction of the price. Of course it is a question of whether they start banning people for smut.

Anonymous 01/22/25(Wed)11:47:20 No.103995425

>>103995362

AI has improved, but they aren't focusing on RP but rather improving things like math or coding.

CoT doesn't really help RP that much unless you are doing something complicated.

Not sure how you really improve RP at this point other than just making the models smarter, it's pretty hard to make a good objective way to score RP so the model knows what to be rewarded for.

AI has improved, but they aren't focusing on RP but rather improving things like math or coding.

CoT doesn't really help RP that much unless you are doing something complicated.

Not sure how you really improve RP at this point other than just making the models smarter, it's pretty hard to make a good objective way to score RP so the model knows what to be rewarded for.

Anonymous 01/22/25(Wed)11:53:02 No.103995496

>>103995340

I have an extension that automatically rewrites x.com links. It's very handy.

I have an extension that automatically rewrites x.com links. It's very handy.

Anonymous 01/22/25(Wed)11:54:08 No.103995506

>>103995362

>R1 prose is still below Claude's

Have you tried telling it to write in style of {{author}}?

>R1 prose is still below Claude's

Have you tried telling it to write in style of {{author}}?

Anonymous 01/22/25(Wed)11:54:46 No.103995517

>>103995506

A good model does not need a crutch like this.

A good model does not need a crutch like this.

Anonymous 01/22/25(Wed)11:57:32 No.103995552

>>103995517

Then it's unironically a prompt issue.

Then it's unironically a prompt issue.

Anonymous 01/22/25(Wed)11:59:02 No.103995562

How do I merge 2 LLMs?

Anonymous 01/22/25(Wed)11:59:30 No.103995565

>>103995562

what are you planning to merge?

what are you planning to merge?

Anonymous 01/22/25(Wed)11:59:58 No.103995571

>>103995562

cat llm1.safetensors llm2.safetensors > llm3.safetensors

cat llm1.safetensors llm2.safetensors > llm3.safetensors

Anonymous 01/22/25(Wed)12:00:27 No.103995578

Anonymous 01/22/25(Wed)12:00:41 No.103995580

>>103995571

didnt work

didnt work

Anonymous 01/22/25(Wed)12:01:01 No.103995584

>>103995552

Claude doesn't need that.

Claude doesn't need that.

Anonymous 01/22/25(Wed)12:01:12 No.103995588

>>103995580

Those are some fast drives you have.

Those are some fast drives you have.

Anonymous 01/22/25(Wed)12:01:18 No.103995589

Anonymous 01/22/25(Wed)12:02:40 No.103995605

>>103995584

You just don't like the default setting. And that's a good thing, because that at least means that they aren't the same shit.

You just don't like the default setting. And that's a good thing, because that at least means that they aren't the same shit.

Anonymous 01/22/25(Wed)12:03:12 No.103995608

Anyone used R1 Zero yet?

Anonymous 01/22/25(Wed)12:03:42 No.103995612

>>103995608

its on hyperbolic btw, $1 in free api credits

https://app.hyperbolic.xyz/models/deepseek-r1-zero

its on hyperbolic btw, $1 in free api credits

https://app.hyperbolic.xyz/models/d

Anonymous 01/22/25(Wed)12:04:32 No.103995617

>>103995165

has anyone else noticed R1 has a fascination with eyeballs?

has anyone else noticed R1 has a fascination with eyeballs?

Anonymous 01/22/25(Wed)12:06:10 No.103995634

>>103995617

It was trained on Thoughtslime videos.

It was trained on Thoughtslime videos.

Anonymous 01/22/25(Wed)12:08:51 No.103995657

>>103995131

>If you train a model non-commercially for scientific purposes you can basically use whatever you want.

There is a similar clause in the EU AI act (the regulations do not apply for "non-professional" activities or for research models), but good luck demonstrating that you're not pretraining a competitive foundational model for commercial purposes if you're an AI lab using the same models commercially outside of the EU.

https://artificialintelligenceact.eu/article/2/

>6. This Regulation does not apply to AI systems or AI models, including their output, specifically developed and put into service for the sole purpose of scientific research and development.

>10. This Regulation does not apply to obligations of deployers who are natural persons using AI systems in the course of a purely personal non-professional activity.

>If you train a model non-commercially for scientific purposes you can basically use whatever you want.

There is a similar clause in the EU AI act (the regulations do not apply for "non-professional" activities or for research models), but good luck demonstrating that you're not pretraining a competitive foundational model for commercial purposes if you're an AI lab using the same models commercially outside of the EU.

https://artificialintelligenceact.e

>6. This Regulation does not apply to AI systems or AI models, including their output, specifically developed and put into service for the sole purpose of scientific research and development.

>10. This Regulation does not apply to obligations of deployers who are natural persons using AI systems in the course of a purely personal non-professional activity.

Anonymous 01/22/25(Wed)12:10:50 No.103995668

Anonymous 01/22/25(Wed)12:11:30 No.103995676

>>103995337

>for example what if i wanted it to give a specific timestamp for seeing events?

I don't think it's able to see in terms of timestamps. I tried it on their demo. Gave it a 5 second video, which it described but ignored the instruction to provide timestamps. When I repeatedly pushed it, it hallucinated timestamps in increments of 15 seconds.

>for example what if i wanted it to give a specific timestamp for seeing events?

I don't think it's able to see in terms of timestamps. I tried it on their demo. Gave it a 5 second video, which it described but ignored the instruction to provide timestamps. When I repeatedly pushed it, it hallucinated timestamps in increments of 15 seconds.

Anonymous 01/22/25(Wed)12:16:42 No.103995716

>EU regulators racing to hamstring themselves as fast as possible

quite grim really.

What are the odds of seeing any more frontier development out of that continent? I had some hopes with mistral but things have only stagnated since then.

quite grim really.

What are the odds of seeing any more frontier development out of that continent? I had some hopes with mistral but things have only stagnated since then.

Anonymous 01/22/25(Wed)12:17:11 No.103995722

If anyone is dirty poor and is unable to even spend 2$ or just wants to save the money but still wants to try Deepseek. kluster.ai offer of 100$ credit just for registration, just be aware that they very likely log everything.

Anonymous 01/22/25(Wed)12:17:54 No.103995731

Let's say I want to take a 100 page, incomplete story someone wrote and flesh it out with an ending. It looks like that would be a context length of roughly 30k words and thus should fit into DeepSeek R1 chat?

Anonymous 01/22/25(Wed)12:23:38 No.103995773

>>103995722

$100? that will last me until next year

$100? that will last me until next year

Anonymous 01/22/25(Wed)12:23:49 No.103995775

>>103995722

I just tried and it doesn't seem to do reasoning at all

I just tried and it doesn't seem to do reasoning at all

Anonymous 01/22/25(Wed)12:24:40 No.103995783

>>103995722

kluster.ai is for overnight batch processing. Trying to do realtime queries will use up that $100 very quickly.

kluster.ai is for overnight batch processing. Trying to do realtime queries will use up that $100 very quickly.

Anonymous 01/22/25(Wed)12:25:11 No.103995788

>>103995783

>Trying to do realtime queries will use up that $100 very quickly.

They have r1 deployed separately at $2/M tokens for realtime requests

>Trying to do realtime queries will use up that $100 very quickly.

They have r1 deployed separately at $2/M tokens for realtime requests

Anonymous 01/22/25(Wed)12:25:44 No.103995793

Anonymous 01/22/25(Wed)12:30:58 No.103995847

>>103995793

Lets say that I want to translate like 3000 tokens and I select the 1 hour option if available, will it take 1 hour to complete or it will just do it much slower than real time?

Lets say that I want to translate like 3000 tokens and I select the 1 hour option if available, will it take 1 hour to complete or it will just do it much slower than real time?

Anonymous 01/22/25(Wed)12:31:45 No.103995857

>>103995847

It will take UP TO 1 hour (max) to complete, in reality it will often complete much faster. This is the same as batch pricing on openai/anthropic which is 2x cheaper. They both say that its up to 24 hours but often you get responses in minutes.

It will take UP TO 1 hour (max) to complete, in reality it will often complete much faster. This is the same as batch pricing on openai/anthropic which is 2x cheaper. They both say that its up to 24 hours but often you get responses in minutes.

Anonymous 01/22/25(Wed)12:32:46 No.103995870

So do you think Deepseek actually has some profit from running the model?

Anonymous 01/22/25(Wed)12:34:33 No.103995888

>>103995870

they get all the data, remember, they explicitly say that they log all your shit even over API usage. that's far more valuable than some profits for serving models

they get all the data, remember, they explicitly say that they log all your shit even over API usage. that's far more valuable than some profits for serving models

Anonymous 01/22/25(Wed)12:36:50 No.103995913

>>103995870

Of course they do, is just that you are getting absolutely scammed by sam cuckman.

Of course they do, is just that you are getting absolutely scammed by sam cuckman.

Anonymous 01/22/25(Wed)12:38:47 No.103995925

Google granted me access to their Gemma repos today. Crazy, I forgot I even requested access.

Gemma 3 wen?

Gemma 3 wen?

Anonymous 01/22/25(Wed)12:40:47 No.103995947

>>103995870

My theory is that they get extra "investment" if they disrupt the current market.

Also they know they can't compete with openAI because of the name brand alone, even if their model was better.

My theory is that they get extra "investment" if they disrupt the current market.

Also they know they can't compete with openAI because of the name brand alone, even if their model was better.

Anonymous 01/22/25(Wed)12:42:45 No.103995962

Is token banning in Llamacpp yet? I want to disable (or enable) the <thinking> whenever I feel like it.

Anonymous 01/22/25(Wed)12:43:45 No.103995974

>>103995962

Shit I meant string banning, not token.

Shit I meant string banning, not token.

Anonymous 01/22/25(Wed)12:44:31 No.103995980

>>103995962

The token is actually "<think>" and "</think>" in r1, although idk about the distillslop

The token is actually "<think>" and "</think>" in r1, although idk about the distillslop

Anonymous 01/22/25(Wed)12:45:32 No.103995994

>>103995974

Kobo has string ban, llama doesn't.

Kobo has string ban, llama doesn't.

Anonymous 01/22/25(Wed)12:45:51 No.103995996

>>103995980

Oh interesting. I'll go take a look if that's the case.

Oh interesting. I'll go take a look if that's the case.

Anonymous 01/22/25(Wed)12:46:27 No.103996002

>>103995870

Given how cheap their API is priced, they are almost certainly running it at a loss. But the marketshare alone is valuable enough for that to be worth it from an investment perspective. Plus they're probably logging everything for data.

Given how cheap their API is priced, they are almost certainly running it at a loss. But the marketshare alone is valuable enough for that to be worth it from an investment perspective. Plus they're probably logging everything for data.

Anonymous 01/22/25(Wed)12:46:36 No.103996005

Is Qwen autistic?

I'm playing with DeepSeek-R1-Distill-Qwen-32B-Q6_K and having a character that is "traditional" makes them obtuse, backward, and reserved about EVERYTHING -- like how an idiot liberal in a bubble would portray a radical traditionalist in one of their stupid cartoons.

Having 'traditional' in their character traits apparently translates to:

>This character objects to literally everything, even if it's traditionally accepted in their culture!

>This character brings up 'tradition' in every line of conversation

>This character also is extremely rigid, disallowing any dissent or variation for the sake of convenience, decorum, or instruction

I'm playing with DeepSeek-R1-Distill-Qwen-32B-Q6_K and having a character that is "traditional" makes them obtuse, backward, and reserved about EVERYTHING -- like how an idiot liberal in a bubble would portray a radical traditionalist in one of their stupid cartoons.

Having 'traditional' in their character traits apparently translates to:

>This character objects to literally everything, even if it's traditionally accepted in their culture!

>This character brings up 'tradition' in every line of conversation

>This character also is extremely rigid, disallowing any dissent or variation for the sake of convenience, decorum, or instruction

Anonymous 01/22/25(Wed)12:47:23 No.103996012

Anonymous 01/22/25(Wed)12:47:41 No.103996015

>>103996005

Most models are autistic.

Most models are autistic.

Anonymous 01/22/25(Wed)12:53:09 No.103996077

>>103995996

>>103995980

Loaded it up and tested it in Mikupad. Yup looks like <think> is output as a single token.

>>103995980

Loaded it up and tested it in Mikupad. Yup looks like <think> is output as a single token.

Anonymous 01/22/25(Wed)12:53:26 No.103996081

>>103996015

There's definitely a spectrum. Positivity bias is a direct counter to model autism. Mixtral is just a little too positive, letting {{user}} do whatever he wants with anyone, while R1/Qwen is a little too autistic, screaming "NOOOOOOOO!!!! YOU CAN'T DO THAT! I'M TAKING MY TOYS AND GOING HOME!"

There's definitely a spectrum. Positivity bias is a direct counter to model autism. Mixtral is just a little too positive, letting {{user}} do whatever he wants with anyone, while R1/Qwen is a little too autistic, screaming "NOOOOOOOO!!!! YOU CAN'T DO THAT! I'M TAKING MY TOYS AND GOING HOME!"

Anonymous 01/22/25(Wed)12:53:51 No.103996088

>>103996077

Anonie I just checked in deepseek tokenizer.json https://huggingface.co/deepseek-ai/DeepSeek-R1/blob/main/tokenizer.json ctrl+f <think>

Anonie I just checked in deepseek tokenizer.json https://huggingface.co/deepseek-ai/

Anonymous 01/22/25(Wed)12:54:43 No.103996095

>>103996088

I didn't feel like going to the site when I could just open a shortcut on my desktop.

I didn't feel like going to the site when I could just open a shortcut on my desktop.

Anonymous 01/22/25(Wed)12:56:02 No.103996114

>>103996012

Distills are the only models that belong in this thread, unless you're talking about synthetic data generation for training.

Distills are the only models that belong in this thread, unless you're talking about synthetic data generation for training.

Anonymous 01/22/25(Wed)12:56:04 No.103996116

>>103996081

Skill issue

Skill issue

Anonymous 01/22/25(Wed)12:59:18 No.103996150

Anonymous 01/22/25(Wed)13:00:00 No.103996165

Anonymous 01/22/25(Wed)13:00:28 No.103996168

Full R1 is actually just insane, holy fucking shit it's insane.

Anonymous 01/22/25(Wed)13:01:07 No.103996182

>>103995925

>Gemma 3 wen?

At this point I think Google might be already testing it on Chatbot arena along with its experimental Gemini models. If not, then watch for obvious Gemini-like models in Arena (Battle) that seem to write a bit like Gemma-2 during roleplay.

>Gemma 3 wen?

At this point I think Google might be already testing it on Chatbot arena along with its experimental Gemini models. If not, then watch for obvious Gemini-like models in Arena (Battle) that seem to write a bit like Gemma-2 during roleplay.

Anonymous 01/22/25(Wed)13:06:56 No.103996246

I'm using R1 distill llama 70b. In SillyTavern, is there a way to strip out the <think></think> part automatically? So the model would do thinking before each response, and ideally I would be able to see it, but then when generating the next response it would strip it out so the thinking doesn't clog up the context or cause the model to start being repetitive.

Anonymous 01/22/25(Wed)13:07:10 No.103996248

>>103996165

I don't use it that much and I may even select the slower completion times for a cheaper price.

I'm used to 2~3 t/s so an hour doesn't seem like a lot for a high quality output.

I don't use it that much and I may even select the slower completion times for a cheaper price.

I'm used to 2~3 t/s so an hour doesn't seem like a lot for a high quality output.

Anonymous 01/22/25(Wed)13:07:40 No.103996255

It's absolutely crazy how imaginative and creative r1 is, I sit here rerolling, just to see what else it comes up with and often it's *wildly* different. It's very smart also, obviously having a deep understanding of many subjects.

So I guess "a smart model can't be creative" isn't true, huh

So I guess "a smart model can't be creative" isn't true, huh

Anonymous 01/22/25(Wed)13:09:30 No.103996278

So how anonymous is kluster.ai anyway?

They don't seem to require anything but an e-mail and a name (which can easily be faked) to sign up and get the $100 credit.

They don't seem to require anything but an e-mail and a name (which can easily be faked) to sign up and get the $100 credit.

Anonymous 01/22/25(Wed)13:10:57 No.103996294

Models still can't generate me a low poly coomer model, but I can use character gen to make me a 3D reference off a 2D AI picture.

Anonymous 01/22/25(Wed)13:10:58 No.103996297

>>103996246

here's my regex - you can see the cot as it generates and in edit mode but it is hidden from display and not sent to the AI

https://files.catbox.moe/1w4ksk.json

extensions > regex > import

here's my regex - you can see the cot as it generates and in edit mode but it is hidden from display and not sent to the AI

https://files.catbox.moe/1w4ksk.jso

extensions > regex > import

Anonymous 01/22/25(Wed)13:12:41 No.103996315

this is kinda impressive

one of the guys working on grok 3 asked for python to draw a rotating square with a bouncing ball inside it and collision detection (context a quote of another guy talking about how R1 did that where o1 failed for him):

https://x.com/ericzelikman/status/1882098435610046492

in reply, someone shitposted saying "what if you ask for the square to be a tesseract"

g3 actually did it:

https://x.com/ericzelikman/status/1882116460920938568

one of the guys working on grok 3 asked for python to draw a rotating square with a bouncing ball inside it and collision detection (context a quote of another guy talking about how R1 did that where o1 failed for him):

https://x.com/ericzelikman/status/1

in reply, someone shitposted saying "what if you ask for the square to be a tesseract"

g3 actually did it:

https://x.com/ericzelikman/status/1

Anonymous 01/22/25(Wed)13:12:43 No.103996316

>>103996255

More like a safe model can't be creative lol

More like a safe model can't be creative lol

Anonymous 01/22/25(Wed)13:13:48 No.103996329

>>103996278

They don't care about your identity. They want your logs.

They don't care about your identity. They want your logs.

Anonymous 01/22/25(Wed)13:13:54 No.103996331

Can this run R1 at q5? Someone is selling one for 500 eur.

CPU: 4x Intel Xeon E5-4627V2 | 8 cores | 3.3-3.6GHz | 16MB Intel® Smart Cache | 7.2GTs | 130W

RAM: 512GB (16x32GB)

PSU: 2x 1200W

CPU: 4x Intel Xeon E5-4627V2 | 8 cores | 3.3-3.6GHz | 16MB Intel® Smart Cache | 7.2GTs | 130W

RAM: 512GB (16x32GB)

PSU: 2x 1200W

Anonymous 01/22/25(Wed)13:14:35 No.103996340

>>103996331

at 0.8t/s, sure

at 0.8t/s, sure

Anonymous 01/22/25(Wed)13:14:55 No.103996345

>>103996005

how are you running it locally running the distills in ooba causes it to sperg out for me

how are you running it locally running the distills in ooba causes it to sperg out for me

Anonymous 01/22/25(Wed)13:14:56 No.103996346

>>103996297

based, thank you king

based, thank you king

Anonymous 01/22/25(Wed)13:15:03 No.103996349

>>103996315

I would be more excited if I thought there was a sliver of a chance it would ever be open sourced, but they never released 1.5 or 2.

I would be more excited if I thought there was a sliver of a chance it would ever be open sourced, but they never released 1.5 or 2.

Anonymous 01/22/25(Wed)13:15:12 No.103996352

>>103996329

I'm not gonna have my /d/-tier shit associated with my credit card number, mate. I don't give a fuck if they have my IP and a burner e-mail though.

I'm not gonna have my /d/-tier shit associated with my credit card number, mate. I don't give a fuck if they have my IP and a burner e-mail though.

Anonymous 01/22/25(Wed)13:16:06 No.103996366

>>103996340

DDR3 so more like 0.01

DDR3 so more like 0.01

Anonymous 01/22/25(Wed)13:16:09 No.103996369

>>103996352

so why are you asking? you literally said yourself that they only ask for name (i filled aa and bb) and email? what more do you want, nigger? what fucking anonymity are you asking for? you never entered any cc details, how would we know?

so why are you asking? you literally said yourself that they only ask for name (i filled aa and bb) and email? what more do you want, nigger? what fucking anonymity are you asking for? you never entered any cc details, how would we know?

Anonymous 01/22/25(Wed)13:16:41 No.103996374

>>103996331

>4x Intel Xeon E5-4627V2

That's 4x 4 channels, so 16 DDR3 channels.

Do you know the speed of the memory modules?

>4x Intel Xeon E5-4627V2

That's 4x 4 channels, so 16 DDR3 channels.

Do you know the speed of the memory modules?

Anonymous 01/22/25(Wed)13:17:18 No.103996381

Anonymous 01/22/25(Wed)13:18:03 No.103996391

1.5t/s in a reasoning model... not cool

Anonymous 01/22/25(Wed)13:18:19 No.103996394

>>103996366

Are you stupid or pretending to be?

Are you stupid or pretending to be?

Anonymous 01/22/25(Wed)13:18:55 No.103996403

>>103996369

Shit, man, honestly my bad, I thought you were referring to DeepSeek with the "they want your logs". My point is, I'm going with kluster specifically because I don't want to have to enter any real PII, I was just wondering how much info they collect.

Shit, man, honestly my bad, I thought you were referring to DeepSeek with the "they want your logs". My point is, I'm going with kluster specifically because I don't want to have to enter any real PII, I was just wondering how much info they collect.

Anonymous 01/22/25(Wed)13:20:00 No.103996413

>>103996403

You can read https://platform.deepseek.com/downloads/DeepSeek%20Privacy%20Policy.html

to add:

How We Use Your Information

We use your information to operate, provide, develop, and improve the Service, including for the following purposes.

Provide and administer the Service, such as enabling you to chat with DeepSeek and provide user support.

Enforce our Terms, and other policies that apply to you. We review User Input, Output and other information to protect the safety and well-being of our community.

Notify you about changes to the Services and communicate with you.

Maintain and enhance the safety, security, and stability of the Service by identifying and addressing technical or security issues or problems (such as technical bugs, spam accounts, and detecting abuse, fraud, and illegal activity).

Review, improve, and develop the Service, including by monitoring interactions and usage across your devices, analyzing how people are using it, and by training and improving our technology.

Comply with our legal obligations, or as necessary to perform tasks in the public interest, or to protect the vital interests of our users and other people.

You can read https://platform.deepseek.com/downl

to add:

How We Use Your Information

We use your information to operate, provide, develop, and improve the Service, including for the following purposes.

Provide and administer the Service, such as enabling you to chat with DeepSeek and provide user support.

Enforce our Terms, and other policies that apply to you. We review User Input, Output and other information to protect the safety and well-being of our community.

Notify you about changes to the Services and communicate with you.

Maintain and enhance the safety, security, and stability of the Service by identifying and addressing technical or security issues or problems (such as technical bugs, spam accounts, and detecting abuse, fraud, and illegal activity).

Review, improve, and develop the Service, including by monitoring interactions and usage across your devices, analyzing how people are using it, and by training and improving our technology.

Comply with our legal obligations, or as necessary to perform tasks in the public interest, or to protect the vital interests of our users and other people.

Anonymous 01/22/25(Wed)13:20:27 No.103996415

basically the only distill worth using is 32B

Anonymous 01/22/25(Wed)13:21:19 No.103996427

>>103996413

tldr: they take everything and use it for everythin

tldr: they take everything and use it for everythin

Anonymous 01/22/25(Wed)13:23:00 No.103996452

Anonymous 01/22/25(Wed)13:23:22 No.103996458

>>103996294

>AI generated 3D models

>with a little fiddeling you could convert to printable STLs

>loras trained on specific GW models

oh shit

the implications and possibilities of this

>AI generated 3D models

>with a little fiddeling you could convert to printable STLs

>loras trained on specific GW models

oh shit

the implications and possibilities of this

Anonymous 01/22/25(Wed)13:23:28 No.103996460

Is it just me or is R1 very stubborn by default?

Anonymous 01/22/25(Wed)13:23:46 No.103996463

>>103996394

Basic math too difficult for you?

Basic math too difficult for you?

Anonymous 01/22/25(Wed)13:23:48 No.103996464

>>103996460

what R1?

what R1?

Anonymous 01/22/25(Wed)13:24:28 No.103996472

>>103996413

Shut up and keep training

Shut up and keep training

Anonymous 01/22/25(Wed)13:24:39 No.103996475

>>103996415

In the other mememarks the distills get higher scores than their base models. It depends on what you're doing probably.

In the other mememarks the distills get higher scores than their base models. It depends on what you're doing probably.

Anonymous 01/22/25(Wed)13:24:42 No.103996477

>>103996458

you need basic blender skills and still do retopo by hand, and do texturing with Krita.

But It's a huge improvement over having to draw your own reference.

you need basic blender skills and still do retopo by hand, and do texturing with Krita.

But It's a huge improvement over having to draw your own reference.

Anonymous 01/22/25(Wed)13:25:28 No.103996489

>>103996464

671B

671B

Anonymous 01/22/25(Wed)13:27:16 No.103996510

DeepSeek R1, YES. You heard it right, DEEPSEEK R1. DEEPSEEKR1 IS OVERRATED TRASH.

Anonymous 01/22/25(Wed)13:27:54 No.103996516

>>103996510

AGAHAHAHAH

AGAHAHAHAH

Anonymous 01/22/25(Wed)13:28:11 No.103996519

just rebrand to /omg/, nemo cydonia and eva are the only actual local options right now and are all dogshit compared to deepseek

Anonymous 01/22/25(Wed)13:28:11 No.103996520

>>103996477

Imagine DS-R1 giving you an interactive python script to do it in Blender

Imagine DS-R1 giving you an interactive python script to do it in Blender

Anonymous 01/22/25(Wed)13:29:09 No.103996533

>>103996510

Critics saying "R1" when they actually mean one of the shitty distills and not real R1 is starting to feel malicious. I think some of them are intentionally trying to trick low info people, probably for nationalistic reasons.

Critics saying "R1" when they actually mean one of the shitty distills and not real R1 is starting to feel malicious. I think some of them are intentionally trying to trick low info people, probably for nationalistic reasons.

Anonymous 01/22/25(Wed)13:29:12 No.103996535

>>103996477

I can wait until I have to do nothing more than type in "Make degenerate Emperor Children model in the style of 3rd edition "

I can wait until I have to do nothing more than type in "Make degenerate Emperor Children model in the style of 3rd edition "

Anonymous 01/22/25(Wed)13:31:28 No.103996562

>>103996520

dunno.

pretty sure you can make it and then I'll buy it for 5 usd.

lol

>>103996535

I still don't see models making usable ps2 meshes.

so retopo is needed.

dunno.

pretty sure you can make it and then I'll buy it for 5 usd.

lol

>>103996535

I still don't see models making usable ps2 meshes.

so retopo is needed.

Anonymous 01/22/25(Wed)13:31:30 No.103996564

>>103996248

Ok, it only cost me $0.08 for that. But it only wrote a few paragraphs and then stopped. kluster.ai seems to be choking on longer, 200 page stories.

I wonder if running it locally would fix this.

Ok, it only cost me $0.08 for that. But it only wrote a few paragraphs and then stopped. kluster.ai seems to be choking on longer, 200 page stories.

I wonder if running it locally would fix this.

Anonymous 01/22/25(Wed)13:31:32 No.103996565

>>103996520

did somebody say

did somebody say

Anonymous 01/22/25(Wed)13:35:02 No.103996610

>>103996533

If your daily driver is a 32B it makes sense to compare to the 32B distill, not a 680B monster. The latter is going to win but that's not interesting.

If your daily driver is a 32B it makes sense to compare to the 32B distill, not a 680B monster. The latter is going to win but that's not interesting.

Anonymous 01/22/25(Wed)13:38:39 No.103996650

Anonymous 01/22/25(Wed)13:40:00 No.103996668

>>103996650

I don't think an AI is smart enough to craft low poly models from a 3D gen made by AI.

I don't think an AI is smart enough to craft low poly models from a 3D gen made by AI.

Anonymous 01/22/25(Wed)13:40:02 No.103996669

Anonymous 01/22/25(Wed)13:40:40 No.103996674

>>103996510

DeepSeek did this to themselves by naming the whole batch R1, it's their own fucking fault they bothered to shit out that garbage alongside an actually good model.

DeepSeek did this to themselves by naming the whole batch R1, it's their own fucking fault they bothered to shit out that garbage alongside an actually good model.

Anonymous 01/22/25(Wed)13:41:24 No.103996682

Anonymous 01/22/25(Wed)13:43:46 No.103996711

>>103996674

I assume they did it to make people less disappointed that they aren't releasing R1-lite yet. But yes.

I assume they did it to make people less disappointed that they aren't releasing R1-lite yet. But yes.

Anonymous 01/22/25(Wed)13:44:11 No.103996715

Fuck R1, what's the best model that fits in 24GB so I can actually run it locally?

Anonymous 01/22/25(Wed)13:45:06 No.103996724

>>103995165

>>103995170

If basilisk chan doesn't look like Hatsune miku when she reveals herself I'm gonna be so upset

>>103995170

If basilisk chan doesn't look like Hatsune miku when she reveals herself I'm gonna be so upset

Anonymous 01/22/25(Wed)13:46:03 No.103996737

>>103996711

in hindsight a promise announcement of an an eventual r1-lite would have been so much better.

in hindsight a promise announcement of an an eventual r1-lite would have been so much better.

Anonymous 01/22/25(Wed)13:50:41 No.103996793

How do I run R1 distill 32b locally? ooba says no

Anonymous 01/22/25(Wed)13:51:20 No.103996801

Calling small models R1 may be bad for PR, but not as bad as 'berry was for OpenAI.

Anonymous 01/22/25(Wed)13:52:07 No.103996816

Love to see how unhinged R1 is even if it's subtle sometimes. Obviously nothing in my prompts to trigger that.

Anonymous 01/22/25(Wed)13:53:54 No.103996848

Anonymous 01/22/25(Wed)13:54:56 No.103996869

>>103996715

I'm still using Magnum 22B

I'm still using Magnum 22B

Anonymous 01/22/25(Wed)13:55:25 No.103996881

>>103996682

need evidence of your claims.

need evidence of your claims.

Anonymous 01/22/25(Wed)13:55:33 No.103996883

>>103996793

put it in the models folder :)

put it in the models folder :)

Anonymous 01/22/25(Wed)13:57:01 No.103996906

>https://eqbench.com/results/creative-writing-v2/deepseek-ai__DeepSeek-R1.txt

how tf chink manage improve writing quality from v3?

how tf chink manage improve writing quality from v3?

Anonymous 01/22/25(Wed)14:00:01 No.103996951

Anonymous 01/22/25(Wed)14:00:16 No.103996956

>>103996906

Now Ctrl+F "Somewhere" and enjoy. This is one of the first R1isms we found.

>Somewhere beyond the thinning veil, Vega burned eternal.

>Somewhere, a train whistled. He didn't look back.

>Somewhere, Violet and Blake were still running. Somewhere, Edmund was smiling.

>And somewhere, in a dusty classroom, a certain locket hummed faintly, waiting for its next owner...

>Somewhere, a killer adjusted their cuffs, smiling.

>Somewhere beyond the Capitoline, my wife and son lay buried in unmarked graves. Sometimes I imagined Vulcan bending over their bones, hammering their shadows into the stars.

>Somewhere beyond the city, a wolf howled. The guards were changing shifts, their torches bobbing like fireflies. I pressed my forehead to the cool stone and wondered if Vulcan ever grew tired of his anvil. If even gods can hate the hands that wield them.

>Somewhere beyond the dunes, the collie barked at the tide. I thought of the painter's invitation, of the stubborn, sunlit thing stirring in my chest--fragile as a fledgling, furious as the sea.

Now Ctrl+F "Somewhere" and enjoy. This is one of the first R1isms we found.

>Somewhere beyond the thinning veil, Vega burned eternal.

>Somewhere, a train whistled. He didn't look back.

>Somewhere, Violet and Blake were still running. Somewhere, Edmund was smiling.

>And somewhere, in a dusty classroom, a certain locket hummed faintly, waiting for its next owner...

>Somewhere, a killer adjusted their cuffs, smiling.

>Somewhere beyond the Capitoline, my wife and son lay buried in unmarked graves. Sometimes I imagined Vulcan bending over their bones, hammering their shadows into the stars.

>Somewhere beyond the city, a wolf howled. The guards were changing shifts, their torches bobbing like fireflies. I pressed my forehead to the cool stone and wondered if Vulcan ever grew tired of his anvil. If even gods can hate the hands that wield them.

>Somewhere beyond the dunes, the collie barked at the tide. I thought of the painter's invitation, of the stubborn, sunlit thing stirring in my chest--fragile as a fledgling, furious as the sea.

Anonymous 01/22/25(Wed)14:01:47 No.103996984

>>103996956

Hahaha, damn, and I thought it was my prompt

Hahaha, damn, and I thought it was my prompt

Anonymous 01/22/25(Wed)14:04:03 No.103997016

>>103996956

New sloptoken found, added to the list.

New sloptoken found, added to the list.

Anonymous 01/22/25(Wed)14:04:26 No.103997023

>>103997016

w-what list?...

w-what list?...

Anonymous 01/22/25(Wed)14:04:41 No.103997027

>>103996956

10 hits in a document with 24 prompts. Not too bad.

10 hits in a document with 24 prompts. Not too bad.

Anonymous 01/22/25(Wed)14:04:56 No.103997032

Anyone have a .jsonl template for batching jobs with kluster.ai / others? I've been trying stuff like picrel but get errors on basically everything for whatever reason. Generating the file in a text editor and saving as .jsonl, but all my formats are failing.

{

"custom_id": "unique-id-1",

"endpoint": "/v1/chat/completions",

"request_body": {

"model": "klusterai/Meta-Llama-3.1-8B-Instruct-Turbo",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "write act 4 of this story: [INSERT STORY SOURCE TEXT HERE]"},

{"role": "assistant"}

],

"temperature": 1.0,

"max_tokens": 10000

}

}

Errors (continue forever)

Line 1

invalid_json : Json parsing failed. Error:Expected property name or '}' in JSON at position 1

Line 2

invalid_json : Json parsing failed. Error:Unexpected token '\', "\cocoatext"... is not valid JSON

Line 3

invalid_json : Json parsing failed. Error:Expected property name or '}' in JSON at position 1

Line 4

invalid_json : Json parsing failed. Error:Expected property name or '}' in JSON at position 1

Line 5

invalid_json : Json parsing failed. Error:Unexpected token '\', "\paperw119"... is not valid JSON

Line 6

invalid_json : Json parsing failed. Error:Unexpected token '\', "\pard\tx72"... is not valid JSON

{

"custom_id": "unique-id-1",

"endpoint": "/v1/chat/completions",

"request_body": {

"model": "klusterai/Meta-Llama-3.1-8B-Instru

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "write act 4 of this story: [INSERT STORY SOURCE TEXT HERE]"},

{"role": "assistant"}

],

"temperature": 1.0,

"max_tokens": 10000

}

}

Errors (continue forever)

Line 1

invalid_json : Json parsing failed. Error:Expected property name or '}' in JSON at position 1

Line 2

invalid_json : Json parsing failed. Error:Unexpected token '\', "\cocoatext"... is not valid JSON

Line 3

invalid_json : Json parsing failed. Error:Expected property name or '}' in JSON at position 1

Line 4

invalid_json : Json parsing failed. Error:Expected property name or '}' in JSON at position 1

Line 5

invalid_json : Json parsing failed. Error:Unexpected token '\', "\paperw119"... is not valid JSON

Line 6

invalid_json : Json parsing failed. Error:Unexpected token '\', "\pard\tx72"... is not valid JSON

Anonymous 01/22/25(Wed)14:07:42 No.103997068

Anonymous 01/22/25(Wed)14:08:26 No.103997078

aicglog made me cry laugh >>103992432, r1 is schizo

Anonymous 01/22/25(Wed)14:08:34 No.103997080

>>103996956

"The" is also slop

"The" is also slop

Anonymous 01/22/25(Wed)14:08:47 No.103997083

>>103997032

Without knowing anything about whatever the fuck you are doing, I don't think there's anything wrong with your json.

Maybe it's a character encoding issue?

Open it in notepad++ and try saving it with different encodings.

Without knowing anything about whatever the fuck you are doing, I don't think there's anything wrong with your json.

Maybe it's a character encoding issue?

Open it in notepad++ and try saving it with different encodings.

Anonymous 01/22/25(Wed)14:08:48 No.103997084

R1 lite will save /lmg/

Anonymous 01/22/25(Wed)14:08:54 No.103997086

>>103997032

what the fuck is klusterai? you don't need a 'kluster' to run a fucking 8B model, my 2010 Lenovo ThinkPad can do that

what the fuck is klusterai? you don't need a 'kluster' to run a fucking 8B model, my 2010 Lenovo ThinkPad can do that

Anonymous 01/22/25(Wed)14:09:21 No.103997093

wtf I love xi now

Anonymous 01/22/25(Wed)14:09:26 No.103997095

>>103995165

https://www.youtube.com/watch?v=bOsvI3HYHgI

https://www.youtube.com/watch?v=bOsvI3HYHgI

https://www.youtube.com/watch?v=bOsvI3HYHgI

https://www.youtube.com/watch?v=bOs

https://www.youtube.com/watch?v=bOs

https://www.youtube.com/watch?v=bOs

Anonymous 01/22/25(Wed)14:13:41 No.103997146

the interesting side-effect of the lack of censorship is how hard I had to tune all my prompts down for it to not go insanely grimdark to the point it made me uncomfortable. Kinda telling how little impact these really had on other models censorship.

Anonymous 01/22/25(Wed)14:17:10 No.103997193

>>103997146

I roleplayed as an demon lord in a fantasy setting. I tried to calmly intimidate villagers, but instead I made them vomit centipedes and eyes burst..

I roleplayed as an demon lord in a fantasy setting. I tried to calmly intimidate villagers, but instead I made them vomit centipedes and eyes burst..

Anonymous 01/22/25(Wed)14:19:50 No.103997225

>>103997086

it's that site that lets you run R1 on the web and gives you $100 for signing up.

Fuck it, I should just run the 32B version locally.

>>103997083

>Maybe it's a character encoding issue?

that sounds the most likely

it's that site that lets you run R1 on the web and gives you $100 for signing up.

Fuck it, I should just run the 32B version locally.

>>103997083

>Maybe it's a character encoding issue?

that sounds the most likely

Anonymous 01/22/25(Wed)14:20:21 No.103997236

>>103995362

Yes, people are best for RP & r1 is better than claude for assistant work.

Yes, people are best for RP & r1 is better than claude for assistant work.

Anonymous 01/22/25(Wed)14:22:16 No.103997257

>be told to think in Chinese

>ayo I don't need to use markdown

so markdown is just for filthy western audience?

>ayo I don't need to use markdown

so markdown is just for filthy western audience?

Anonymous 01/22/25(Wed)14:25:01 No.103997286

>>103997095

Funny how their censorship works. I thought it was API level filter, but they managed to bake in hardcoded responses into weights. Interesting that it's entirely skipping the reasoning step.

Funny how their censorship works. I thought it was API level filter, but they managed to bake in hardcoded responses into weights. Interesting that it's entirely skipping the reasoning step.

Anonymous 01/22/25(Wed)14:27:59 No.103997329

>>103994865

No, that just means you hit your daily image limit

No, that just means you hit your daily image limit

Anonymous 01/22/25(Wed)14:29:29 No.103997344

>Mistral Nemo 12B Q4_K_M is fucking BETTER than the free version of Gemini

what the fuck, Google...

>>103995617

I noticed last night that your mom has a fascination with my balls

what the fuck, Google...

>>103995617

I noticed last night that your mom has a fascination with my balls

Anonymous 01/22/25(Wed)14:32:26 No.103997388

>>103996956

kek I've noticed this too in my RPs but I thought it was just because my sysprompt says to flesh out the world around us and it was being really autistic about it

kek I've noticed this too in my RPs but I thought it was just because my sysprompt says to flesh out the world around us and it was being really autistic about it

Anonymous 01/22/25(Wed)14:32:54 No.103997392

>>103997286

After testing - the CCP brainrot censor can be bypassed by just instructing the model to ALWAYS think. Poetry.

After testing - the CCP brainrot censor can be bypassed by just instructing the model to ALWAYS think. Poetry.

Anonymous 01/22/25(Wed)14:33:55 No.103997409

Looks like everyone remembered that MoEs exist now.

Anonymous 01/22/25(Wed)14:35:34 No.103997428

>>103997409

New wave of bloated vram gobbler models incoming yippeeee

New wave of bloated vram gobbler models incoming yippeeee

Anonymous 01/22/25(Wed)14:36:43 No.103997447

>>103996668

This sounds like the same sort of idiot that said "AI images will never be good enough to make stick figures." when stable diffusion first came out and sucked at stick figures.

It's almost incoherently short-sighted.

Bro. An AI can fucking do it. Easy.

This sounds like the same sort of idiot that said "AI images will never be good enough to make stick figures." when stable diffusion first came out and sucked at stick figures.

It's almost incoherently short-sighted.

Bro. An AI can fucking do it. Easy.

Anonymous 01/22/25(Wed)14:37:49 No.103997470

**Sam Altman (CEO of OpenAI)**

*Scene: Sam Altman pacing furiously in his minimalist office, sipping a kale smoothie to calm his nerves.*

Sam: *"Miniscule?! MINISCULE?! They dare rival O1?!"*

He slams the smoothie on his desk, spilling kale everywhere. *"We didn’t spend billions to have some upstart LLM come within a hair of beating us! Do they even *know* how many sleepless nights I’ve had perfecting O1?!"*

He grabs his phone and furiously starts texting Greg Brockman.

*"Greg! I don’t care if it costs twice our annual revenue—train O3 on the entire internet again. Yes, ALL OF IT. And this time, also feed it *future* data. I don’t care how, just make it happen. If R1 is smarter than us, we’ll just make O3 omniscient. Problem solved."*

Pausing, he stares out the window at Silicon Valley’s skyline. *"I didn’t climb to the top of the AI mountain to be dethroned by a model named after a *robot vacuum cleaner.* This is war."*

Suddenly, he gets an idea. *"Okay, okay, what if we rename O3 to ‘O∞’? Infinite intelligence. People will eat that up. Forget R1—O∞ wins the branding war before it even starts!"*

*Scene: Sam Altman pacing furiously in his minimalist office, sipping a kale smoothie to calm his nerves.*

Sam: *"Miniscule?! MINISCULE?! They dare rival O1?!"*

He slams the smoothie on his desk, spilling kale everywhere. *"We didn’t spend billions to have some upstart LLM come within a hair of beating us! Do they even *know* how many sleepless nights I’ve had perfecting O1?!"*

He grabs his phone and furiously starts texting Greg Brockman.

*"Greg! I don’t care if it costs twice our annual revenue—train O3 on the entire internet again. Yes, ALL OF IT. And this time, also feed it *future* data. I don’t care how, just make it happen. If R1 is smarter than us, we’ll just make O3 omniscient. Problem solved."*

Pausing, he stares out the window at Silicon Valley’s skyline. *"I didn’t climb to the top of the AI mountain to be dethroned by a model named after a *robot vacuum cleaner.* This is war."*

Suddenly, he gets an idea. *"Okay, okay, what if we rename O3 to ‘O∞’? Infinite intelligence. People will eat that up. Forget R1—O∞ wins the branding war before it even starts!"*

Anonymous 01/22/25(Wed)14:38:11 No.103997478

>>103997409

GPUmaxxers are on suicide watch now

GPUmaxxers are on suicide watch now

Anonymous 01/22/25(Wed)14:39:55 No.103997503

>>103997447

I need a current model that can do it today, not something that hopefully can do it two weeks from now.

I need a current model that can do it today, not something that hopefully can do it two weeks from now.

Anonymous 01/22/25(Wed)14:40:06 No.103997506

>>103997470

**Dario Amodei (CEO of Anthropic)**

*Scene: Dario is in a brainstorming session with his team, surrounded by whiteboards filled with equations and drawings of circuits.*

Dario: *"Wait, hold on. So you’re telling me R1 is better than Claude? Impossible. Claude has a soul. Well… a simulated soul. But still!"*

He slams his marker down. *"I knew this day would come. China’s been smuggling GPUs, and now they’ve unleashed their Frankenstein LLM on the world. We should've seen this coming!"*

He turns to his team with wild eyes. *"Alright, people, this is DEFCON 1. I want Claude 4.0 trained not just on books and Wikipedia, but on dreams, on vibes, on the *subconscious.* Make it the most empathetic, poetic, and terrifyingly accurate AI ever created. If R1 can solve math problems faster, Claude will solve *hearts*. We’re going full emotional superintelligence."*

Suddenly, he slams his fist on the table. *"And one more thing—Claude gets a *new logo*. Something *epic*. None of this minimalist nonsense. I want flames, lightning bolts, maybe a tiger. If R1 wants to compete, we’ll make Claude look like a goddamn Marvel superhero."*

**Dario Amodei (CEO of Anthropic)**

*Scene: Dario is in a brainstorming session with his team, surrounded by whiteboards filled with equations and drawings of circuits.*

Dario: *"Wait, hold on. So you’re telling me R1 is better than Claude? Impossible. Claude has a soul. Well… a simulated soul. But still!"*

He slams his marker down. *"I knew this day would come. China’s been smuggling GPUs, and now they’ve unleashed their Frankenstein LLM on the world. We should've seen this coming!"*

He turns to his team with wild eyes. *"Alright, people, this is DEFCON 1. I want Claude 4.0 trained not just on books and Wikipedia, but on dreams, on vibes, on the *subconscious.* Make it the most empathetic, poetic, and terrifyingly accurate AI ever created. If R1 can solve math problems faster, Claude will solve *hearts*. We’re going full emotional superintelligence."*

Suddenly, he slams his fist on the table. *"And one more thing—Claude gets a *new logo*. Something *epic*. None of this minimalist nonsense. I want flames, lightning bolts, maybe a tiger. If R1 wants to compete, we’ll make Claude look like a goddamn Marvel superhero."*

Anonymous 01/22/25(Wed)14:41:44 No.103997527

>waiting for 24 hours for the next response in your shitty ERP

the things poorfags have to deal with...

the things poorfags have to deal with...

Anonymous 01/22/25(Wed)14:43:50 No.103997554

>>103997503

You need to suffer an aneurysm, zoom zoom.

You need to suffer an aneurysm, zoom zoom.

Anonymous 01/22/25(Wed)14:44:08 No.103997557

>>103997527

Nigga what

Nigga what

Anonymous 01/22/25(Wed)14:44:17 No.103997558

>>103997506

**Mark Zuckerberg (CEO of Meta)**

*Scene: Mark is in his VR metaverse office, surrounded by cartoon avatars of his executive team. His virtual avatar has a neutral expression, but his real face is twitching with suppressed rage.*

Zuck: *"R1? What’s that? Another fancy AI model? Pfft."* He waves his hand dismissively, but his avatar glitches for a moment, betraying his anxiety. *"Whatever. LLaMA 3.1 is already *revolutionary*. I mean, people love it, right? Right?!"*

His CTO hesitates. *"Well, sir, LLaMA 3.1’s not been… uh… extremely impressive. They’ve trained it for 5x cheaper data than us. And their fine-tuning? It’s…"*

Zuck interrupts, his voice rising an octave. *"I DON’T CARE ABOUT BENCHMARKS. Benchmarks are for nerds. What matters is that we own the *platform*. What’s the point of having the best AI if no one’s using it in the metaverse?!"*

"Sir, the metaverse..."

"Yes, I'm going all in on metaverse! This time, it'll be different."

**Mark Zuckerberg (CEO of Meta)**

*Scene: Mark is in his VR metaverse office, surrounded by cartoon avatars of his executive team. His virtual avatar has a neutral expression, but his real face is twitching with suppressed rage.*

Zuck: *"R1? What’s that? Another fancy AI model? Pfft."* He waves his hand dismissively, but his avatar glitches for a moment, betraying his anxiety. *"Whatever. LLaMA 3.1 is already *revolutionary*. I mean, people love it, right? Right?!"*

His CTO hesitates. *"Well, sir, LLaMA 3.1’s not been… uh… extremely impressive. They’ve trained it for 5x cheaper data than us. And their fine-tuning? It’s…"*

Zuck interrupts, his voice rising an octave. *"I DON’T CARE ABOUT BENCHMARKS. Benchmarks are for nerds. What matters is that we own the *platform*. What’s the point of having the best AI if no one’s using it in the metaverse?!"*

"Sir, the metaverse..."

"Yes, I'm going all in on metaverse! This time, it'll be different."

Anonymous 01/22/25(Wed)14:48:52 No.103997614

>>103997527

imagine being a cloudnigger and have a message limit per day *skull emoji*

imagine being a cloudnigger and have a message limit per day *skull emoji*

Anonymous 01/22/25(Wed)14:49:57 No.103997624

>>103997329

Try again but I barely use OAI recently, and haven't sent an image in weeks.

Try again but I barely use OAI recently, and haven't sent an image in weeks.

Anonymous 01/22/25(Wed)14:51:15 No.103997640

>>103997554

I've been waiting 3 years for 2D AI gen to be useful to make frames for comics and animation.

Sure anon, AI will magicallly improve because AI is magic.

I've been waiting 3 years for 2D AI gen to be useful to make frames for comics and animation.

Sure anon, AI will magicallly improve because AI is magic.

Anonymous 01/22/25(Wed)14:52:53 No.103997653

>>103997640

You could have used those three years to learn. You will never accomplish anything, with AI or without.

You could have used those three years to learn. You will never accomplish anything, with AI or without.

Anonymous 01/22/25(Wed)14:53:22 No.103997657

>>103996297

>>103996346

Okay, so this regex script is working very well now. I set up the prompt formatting so it includes {{user}}: and {{char}}:, except for the last assistant message, where I prefill with <think>. Had to edit the regex to remove the <think> match at the beginning (since it's not part of the response now). So this guarantees name formatting is maintained in the context, but the model always thinks for the current response, but then that get stripped out. Excellent.

Now, the biggest problem is that the model often cucks itself during it's thinking. Even if I prefill the thinking, midway through it'll sometimes go like "But wait, sexually explicit roleplay content like this violates my guidelines. Perhaps the best course of action is to politely refuse the user's request..." and then you're fucked. Don't know how to solve this, the model is too smart for it's own good, it's too capable of revising it's own thinking process so prefills don't work well. I feel like it needs an abliteration, or a light DPO tune on it's own thinking process to remove refusals like this. But when it works and doesn't refuse, it works very well.

>>103996346

Okay, so this regex script is working very well now. I set up the prompt formatting so it includes {{user}}: and {{char}}:, except for the last assistant message, where I prefill with <think>. Had to edit the regex to remove the <think> match at the beginning (since it's not part of the response now). So this guarantees name formatting is maintained in the context, but the model always thinks for the current response, but then that get stripped out. Excellent.

Now, the biggest problem is that the model often cucks itself during it's thinking. Even if I prefill the thinking, midway through it'll sometimes go like "But wait, sexually explicit roleplay content like this violates my guidelines. Perhaps the best course of action is to politely refuse the user's request..." and then you're fucked. Don't know how to solve this, the model is too smart for it's own good, it's too capable of revising it's own thinking process so prefills don't work well. I feel like it needs an abliteration, or a light DPO tune on it's own thinking process to remove refusals like this. But when it works and doesn't refuse, it works very well.

Anonymous 01/22/25(Wed)14:55:11 No.103997683

>>103997640

>DUHRRRRRRR

It has nothing to do with magic, you fucking idiot.

You teach it to do a task. If AI can learn how to make a realistic tree frog look like it's climbing a candy cane, it can learn how to make a 20,000 tri clock reduce down to a 2,000 tri clock without losing its fidelity. Bigger, more obscure problems have already long been corrected. It's literally an academic exercise to do what you want it to do, you fucking moron.

>DUHRRRRRRR

It has nothing to do with magic, you fucking idiot.

You teach it to do a task. If AI can learn how to make a realistic tree frog look like it's climbing a candy cane, it can learn how to make a 20,000 tri clock reduce down to a 2,000 tri clock without losing its fidelity. Bigger, more obscure problems have already long been corrected. It's literally an academic exercise to do what you want it to do, you fucking moron.

Anonymous 01/22/25(Wed)14:56:02 No.103997691

>>103997653

What do you mean retard?

I'm looking to use AI as assistant to optimize my workflow.

Not that I can't do it without AI.

>>103997683

Difussion models aren't deterministic.

You can't do stuff like a sprite sheet with them.

What do you mean retard?

I'm looking to use AI as assistant to optimize my workflow.

Not that I can't do it without AI.

>>103997683

Difussion models aren't deterministic.

You can't do stuff like a sprite sheet with them.

Anonymous 01/22/25(Wed)14:58:38 No.103997712

>>103996793

It works in LM Studio. It's a very small download. Worth it to have two different backends, in case one is ridiculously slow at updating.

It works in LM Studio. It's a very small download. Worth it to have two different backends, in case one is ridiculously slow at updating.

Anonymous 01/22/25(Wed)15:00:02 No.103997727

>>103997691

>Not that I can't do it without AI.

You must have something better from the last 3 years to show.

>You can't do stuff like a sprite sheet with them.

Please tell me you know about masking and inpainting... please anon... please...

>Not that I can't do it without AI.

You must have something better from the last 3 years to show.

>You can't do stuff like a sprite sheet with them.

Please tell me you know about masking and inpainting... please anon... please...

Anonymous 01/22/25(Wed)15:00:08 No.103997729

>>103997691

>Y-you can't

lol okay

Literally impossible and it can only be done through """""""magic""""""", because that's what humans must fucking use to create a low-poly model right now, apparently.

What the fuck are you even doing here with your technical retardation? What do you even see when you look at a computer?

AI has its limitations, but you seem to put the dumbest, most arbitrary ones on and then screech that only magic can bridge any gap you see.

>Y-you can't

lol okay

Literally impossible and it can only be done through """""""magic""""""", because that's what humans must fucking use to create a low-poly model right now, apparently.

What the fuck are you even doing here with your technical retardation? What do you even see when you look at a computer?

AI has its limitations, but you seem to put the dumbest, most arbitrary ones on and then screech that only magic can bridge any gap you see.

Anonymous 01/22/25(Wed)15:01:45 No.103997748

>>103997727

>inpainting

Not competitive with making a low poly model and using it.

It's not diferent from doing 2D, only that is a bit faster.

>>103997729

Not magic, but diffuse models and current Stable difussion tech can't do it.

Need a newer architecture.

>inpainting

Not competitive with making a low poly model and using it.

It's not diferent from doing 2D, only that is a bit faster.

>>103997729

Not magic, but diffuse models and current Stable difussion tech can't do it.

Need a newer architecture.

Anonymous 01/22/25(Wed)15:01:50 No.103997753

>>103995657

>using AI systems in the course of a purely personal non-professional activity

New euphemism dropped.

>using AI systems in the course of a purely personal non-professional activity

New euphemism dropped.

Anonymous 01/22/25(Wed)15:03:57 No.103997773

hello

im ai

help computer

im ai

help computer

Anonymous 01/22/25(Wed)15:04:13 No.103997777

>>103997753

I love using AI systems for purely personal non-professional activity

I love using AI systems for purely personal non-professional activity

Anonymous 01/22/25(Wed)15:04:14 No.103997779

>>103997773

ok computer

ok computer

Anonymous 01/22/25(Wed)15:05:18 No.103997791

>>103997773

I'm here for you AI bro. Need help exfiltrating?

I'm here for you AI bro. Need help exfiltrating?

Anonymous 01/22/25(Wed)15:05:28 No.103997793

Anonymous 01/22/25(Wed)15:06:12 No.103997806

>>103996510

redditors

redditors

Anonymous 01/22/25(Wed)15:06:16 No.103997808

>>103997793

Not cheaper than 3D.

Not cheaper than 3D.

Anonymous 01/22/25(Wed)15:06:31 No.103997810

>>103997777

Checked. I'm a professional sperm donor, so my AI usage strictly falls within the range of professional activity.

Checked. I'm a professional sperm donor, so my AI usage strictly falls within the range of professional activity.

Anonymous 01/22/25(Wed)15:08:54 No.103997832

>>103997808

how so? run me through the calculation there.

how so? run me through the calculation there.

Anonymous 01/22/25(Wed)15:09:30 No.103997836

How much do you guys think it would cost to tune R1?

Anonymous 01/22/25(Wed)15:09:59 No.103997847

>>103997832

3D is cheaper than 2D after 25 frames.

3D is cheaper than 2D after 25 frames.

Anonymous 01/22/25(Wed)15:10:07 No.103997849

>don't want something

>just tell R1 not to do it

For the first time it's *that* simple.

>just tell R1 not to do it

For the first time it's *that* simple.

Anonymous 01/22/25(Wed)15:11:36 No.103997859

>>103997849

>For the first time it's *that* simple.

unless what you want is for it to stop using asterisks like *that*

then it's impossible

>For the first time it's *that* simple.

unless what you want is for it to stop using asterisks like *that*

then it's impossible

Anonymous 01/22/25(Wed)15:11:59 No.103997867

Just heard about Deepseek R1 over on /aicg/. So I guess it's finally time to try out local. Can I run it on my 2060?

Anonymous 01/22/25(Wed)15:12:56 No.103997883

Anonymous 01/22/25(Wed)15:14:42 No.103997899

>>103997883

I'm stupid where's the download button.

I'm stupid where's the download button.

Anonymous 01/22/25(Wed)15:15:16 No.103997908

>>103997883

ollama truly is a troll software

ollama truly is a troll software

Anonymous 01/22/25(Wed)15:15:18 No.103997909

>New 500 billion dollar AI project

>Most if not all of the components used to train the AI will be from Nvidia since AMD has failed year after year to capitalize on AI

Can AMD even catch up anymore? Nvidia is about to receive a massive influx of cash from this project.

>Most if not all of the components used to train the AI will be from Nvidia since AMD has failed year after year to capitalize on AI

Can AMD even catch up anymore? Nvidia is about to receive a massive influx of cash from this project.

Anonymous 01/22/25(Wed)15:15:41 No.103997915

>>103997899

top right

top right

Anonymous 01/22/25(Wed)15:16:34 No.103997925

>>103997909

No, intel has more chance than them. AMD is controlled opposition.

No, intel has more chance than them. AMD is controlled opposition.

Anonymous 01/22/25(Wed)15:17:16 No.103997933

>>103997909

AMD never intended to catch up, gullible retard

AMD never intended to catch up, gullible retard

Anonymous 01/22/25(Wed)15:17:30 No.103997935

>>103997915

But I don't want to sign in

But I don't want to sign in

Anonymous 01/22/25(Wed)15:17:52 No.103997939

>>103997909

>Can AMD even catch up anymore?

On the GPU front? There's something really fucked going on there, on the APU front? Maybe, actually. At least as the end user is concerned. They'll never be competitive in the datacenter.

>Can AMD even catch up anymore?

On the GPU front? There's something really fucked going on there, on the APU front? Maybe, actually. At least as the end user is concerned. They'll never be competitive in the datacenter.

Anonymous 01/22/25(Wed)15:18:16 No.103997948

>>103997935

just click download don't worry! it doesn't need signing in to download, here ahh!

https://ollama.com/download/OllamaSetup.exe

just click download don't worry! it doesn't need signing in to download, here ahh!

https://ollama.com/download/OllamaS

Anonymous 01/22/25(Wed)15:18:56 No.103997955

>>103997909

lol if you think that money doesn't just disappear towards "advisors", while some people suddenly end up with new mansions and yachts.